Computer vision enables machines to interpret and understand visual information in the world. This encompasses a variety of tasks, such as image classification, object detection, and semantic segmentation. Innovations in this area have been driven by the development of advanced neural network architectures, particularly convolutional neural networks (CNNs) and, more recently, transformers. These models have demonstrated significant potential in processing visual data. Still, there remains a continuing need for improvements in their ability to balance computational efficiency with capturing both local and global visual contexts.

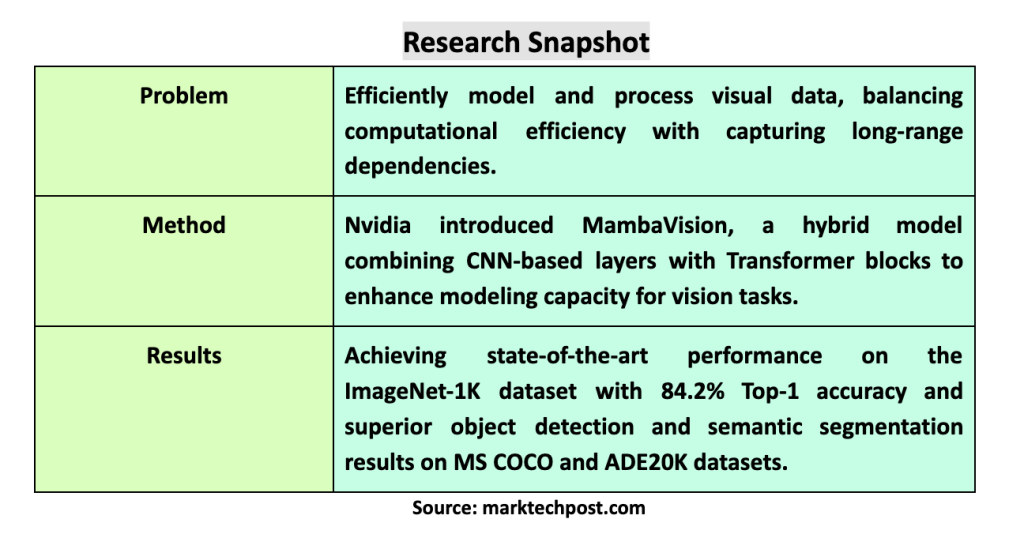

A central challenge in computer vision is the efficient modeling and processing of visual data. This requires understanding both local details and broader contextual information within images. Traditional models often need help in achieving this balance. CNNs, while efficient at handling local spatial relationships, can miss broader contextual information. On the other hand, Transformers, which leverage self-attention mechanisms to capture global context, can be computationally intensive due to their quadratic complexity relative to sequence length. This trade-off between efficiency and context-capturing capability has significantly hampered the performance of advancing vision models.

Existing approaches primarily use CNNs for their effectiveness in handling local spatial relationships. However, these models can only partially capture the broader contextual information needed for more complex vision tasks. Transformers have been applied to vision tasks to address this problem, using self-attention mechanisms to improve understanding of the global context. Despite these advances, both CNNs and transformers have inherent limitations. CNNs can miss the broader context, while transformers are computationally expensive and difficult to train and deploy efficiently.

NVIDIA researchers have presented MambaVisiona new hybrid model that combines the strengths of the Mamba and Transformer architectures. This new approach integrates CNN-based layers with Transformer blocks to enhance modeling capabilities for vision applications. The MambaVision family includes various model configurations to meet different design criteria and application needs, providing a flexible and powerful tool for various vision tasks. The introduction of MambaVision represents a significant advancement in the development of hybrid models for machine vision.

MambaVision employs a hierarchical architecture divided into four stages. The initial stages use CNN layers for fast feature extraction, leveraging their efficiency in processing high-resolution features. Later stages incorporate MambaVision and Transformer blocks to effectively capture short- and long-range dependencies. This innovative design allows the model to handle global context more efficiently than traditional approaches. The redesigned Mamba blocks, which now include self-attention mechanisms, are central to this improvement, as they allow the model to process visual data with greater accuracy and performance.

MambaVision’s performance is remarkable, achieving state-of-the-art results on the ImageNet-1K dataset. For example, the MambaVision-B model achieves a Top-1 accuracy of 84.2%, outperforming other leading models such as ConvNeXt-B and Swin-B, which scored 83.8% and 83.5%, respectively. In addition to its high accuracy, MambaVision demonstrates superior image performance, with the MambaVision-B model processing images significantly faster than its competitors. On downstream tasks such as object detection and semantic segmentation on the MS COCO and ADE20K datasets, MambaVision outperforms comparably sized backbones, demonstrating its versatility and efficiency. For example, the MambaVision models show improvements in the Box AP and Mask AP metrics, achieving 46.4 and 41.8, respectively, higher than those achieved by models such as ConvNeXt-T and Swin-T.

A comprehensive ablation study supports these findings and demonstrates the effectiveness of MambaVision’s design choices. The researchers improved imaging accuracy and performance by redesigning the Mamba block to make it more suitable for vision tasks. The study explored various integration patterns of the Mamba and Transformer blocks, and revealed that incorporating self-attention blocks in the final layers significantly improves the model’s ability to capture global context and long-range spatial dependencies. This design results in richer feature representation and better performance on various vision tasks.

In conclusion, MambaVision represents a significant advancement in vision modeling by combining the strengths of CNNs and Transformers into a single hybrid architecture. This approach effectively addresses the limitations of existing models by improving the understanding of local and global contexts, leading to superior performance on diverse vision tasks. The results of this study indicate a promising direction for future developments in computer vision, and potentially set a new standard for hybrid vision models.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter.

Join our Telegram Channel and LinkedIn GrAbove!.

If you like our work, you will love our Newsletter..

Don't forget to join our Subreddit with over 46 billion users

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary engineer and entrepreneur, Asif is committed to harnessing the potential of ai for social good. His most recent initiative is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has over 2 million monthly views, illustrating its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER