This is a guest post co-written with Tamir Rubinsky and Aviad Aranias of Nielsen Sports..

Sports Nielsen shapes the world's media and content as a global leader in insights, data and audience analytics. Through our understanding of people and their behaviors across all channels and platforms, we provide our clients with independent, actionable intelligence so they can connect and engage with their audiences, now and in the future.

At Nielsen Sports, our mission is to provide our clients (brands and rights holders) with the ability to measure the return on investment (ROI) and effectiveness of a sports sponsorship advertising campaign across all channels, including TV, Internet, social networks and even newspapers, and provide accurate guidance at local, national and international levels.

In this post, we describe how Nielsen Sports modernized a system that runs thousands of different machine learning (ML) models in production using amazon SageMaker multi-model endpoints (MME) and reduced operational and financial costs by 75%.

Challenges with Channel Video Targeting

Our technology is based on artificial intelligence (ai) and specifically computer vision (CV), allowing us to track brand exposure and identify its location accurately. For example, we identify whether the brand is on a banner or on a t-shirt. Additionally, we identify the location of the brand on the item, such as the top corner of a sign or the sleeve. The following figure shows an example of our labeling system.

To understand our scale and cost challenges, let's look at some representative numbers. Every month, we identify more than 120 million brand impressions across different channels and the system must support the identification of more than 100,000 brands and variations of different brands. We've built one of the world's largest brand impression databases with over 6 billion data points.

Our media evaluation process includes several steps, as illustrated in the following figure:

- First, we record thousands of channels around the world using an international recording system.

- We transmit the content in combination with the broadcast schedule (Electronic Programming Guide) to the next stage, which is the segmentation and separation between the broadcasts of the game itself and other content or advertisements.

- We perform media monitoring, where we add additional metadata to each segment, such as league scores, relevant teams, and players.

- We perform an exposure analysis of brands' visibility and then combine audience information to calculate campaign ratings.

- Information is delivered to the client through a dashboard or analyst reports. The analyst has direct access to the raw data or through our data warehouse.

Because we operate at a scale of over a thousand channels and tens of thousands of hours of video per year, we must have a scalable automation system for the analysis process. Our solution automatically segments the broadcast and knows how to isolate relevant video clips from the rest of the content.

We do this using dedicated algorithms and models developed by us to analyze specific channel characteristics.

In total, we are running thousands of different models in production to support this mission, which is expensive, incurs operational expenses, and is slow and error-prone. It took months to bring models with new architecture to production.

This is where we wanted to innovate and redesign our system.

Cost-effective scaling for CV models using SageMaker MME

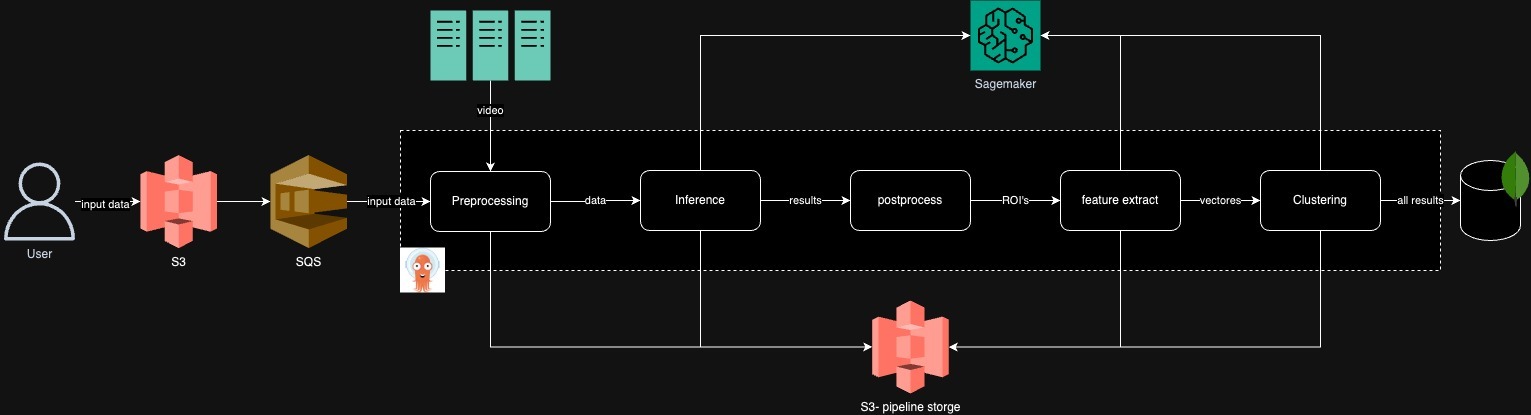

Our legacy video segmentation system was difficult to test, change, and maintain. Some of the challenges include working with an old machine learning framework, interdependencies between components, and a difficult-to-optimize workflow. This is because we relied on RabbitMQ for the pipeline, which was a stateful solution. To debug a component, such as feature extraction, we had to test the entire process.

The following diagram illustrates the above architecture.

As part of our analysis, we identified performance bottlenecks, such as running a single model on one machine, which showed low GPU utilization of 30% to 40%. We also discovered inefficient pipeline runs and scheduling algorithms for the models.

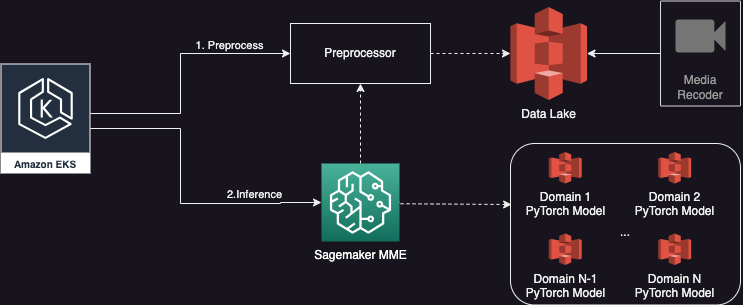

Therefore, we decided to build a new multi-tenant architecture based on SageMaker, which would implement performance optimization improvements, support dynamic batch sizes, and run multiple models simultaneously.

Each workflow run targets a group of videos. Each video lasts between 30 and 90 minutes and each group has more than five models to run.

Let's look at an example: a video can be 60 minutes long and consist of 3,600 images, and each image needs to be inferred by three different ML models during the first stage. With SageMaker MME, we can run batches of 12 images in parallel and the entire batch completes in less than 2 seconds. On a normal day we have over 20 groups of videos and on a busy weekend day we can have over 100 groups of videos.

The following diagram shows our new simplified architecture using a SageMaker MME.

Results

With the new architecture, we achieved many of the desired results and some unseen advantages over the previous architecture:

- Best execution time – By increasing the batch size (12 videos in parallel) and running multiple models simultaneously (five models in parallel), we have reduced the overall runtime of our process by 33%, from 1 hour to 40 minutes.

- Improved infrastructure – With SageMaker, we upgraded our existing infrastructure and are now using newer AWS instances with newer GPUs, such as g5.xlarge. One of the biggest benefits of the change is the immediate performance improvement through the use of TorchScript and CUDA optimizations.

- Optimized use of infrastructure – By having a single endpoint that can host multiple models, we can reduce both the number of endpoints and the number of machines we need to maintain, and also increase the utilization of a single machine and its GPU. For a specific task with five videos, we now use only five g5 instance machines, giving us a 75% cost benefit over the previous solution. For a typical daytime workload, we use a single endpoint with a single g5.xlarge machine with over 80% GPU utilization. In comparison, the previous solution had less than 40% utilization.

- Greater agility and productivity – Using SageMaker allowed us to spend less time migrating models and more time improving our core models and algorithms. This has increased the productivity of our engineering and data science teams. We can now research and deploy a new machine learning model in less than 7 days, instead of the previous month. This is a 75% improvement in speed and planning.

- Better quality and trust – With SageMaker's A/B testing capabilities, we can roll out our models incrementally and be able to roll back safely. The faster life cycle to production also increased the accuracy and results of our ML models.

The following figure shows our GPU utilization with the previous architecture (30–40% GPU utilization).

The following figure shows our GPU utilization with the new simplified architecture (90% GPU utilization).

Conclusion

In this post, we share how Nielsen Sports modernized a system running thousands of different models in production using SageMaker MME and reduced its operational and financial cost by 75%.

For more information, see the following:

About the authors

Eitan Sela is a solutions architect specializing in generative artificial intelligence and machine learning at amazon Web Services. He works with AWS customers to provide guidance and technical support, helping them build and operate generative ai and machine learning solutions on AWS. In his free time, Eitan likes to run and read the latest articles on machine learning.

Eitan Sela is a solutions architect specializing in generative artificial intelligence and machine learning at amazon Web Services. He works with AWS customers to provide guidance and technical support, helping them build and operate generative ai and machine learning solutions on AWS. In his free time, Eitan likes to run and read the latest articles on machine learning.

Gal Goldman He is a Senior Software Engineer and Senior Business Solutions Architect at AWS with a passion for cutting-edge solutions. He specializes in and has developed many distributed Machine Learning services and solutions. Gal is also focused on helping AWS customers accelerate and overcome their engineering and generative ai challenges.

Gal Goldman He is a Senior Software Engineer and Senior Business Solutions Architect at AWS with a passion for cutting-edge solutions. He specializes in and has developed many distributed Machine Learning services and solutions. Gal is also focused on helping AWS customers accelerate and overcome their engineering and generative ai challenges.

such panchek is a senior business development manager for artificial intelligence and machine learning at amazon Web Services. As a BD Specialist, she is responsible for increasing the adoption, utilization, and revenue of AWS services. She brings together customer and industry needs and partners with AWS product teams to innovate, develop, and deliver AWS solutions.

such panchek is a senior business development manager for artificial intelligence and machine learning at amazon Web Services. As a BD Specialist, she is responsible for increasing the adoption, utilization, and revenue of AWS services. She brings together customer and industry needs and partners with AWS product teams to innovate, develop, and deliver AWS solutions.

Tamir Rubinski leads Global Research and Development Engineering at Nielsen Sports, bringing extensive experience creating innovative products and managing high-performance teams. His work transformed sports sponsorship media evaluation through innovative solutions powered by artificial intelligence.

Tamir Rubinski leads Global Research and Development Engineering at Nielsen Sports, bringing extensive experience creating innovative products and managing high-performance teams. His work transformed sports sponsorship media evaluation through innovative solutions powered by artificial intelligence.

Aviad Aranias is an MLOps team leader and sports analytics architect at Nielsen who specializes in building complex pipelines to analyze video of sporting events across numerous channels. He excels at building and deploying deep learning models to handle large-scale data efficiently. In his free time he likes to bake delicious Neapolitan pizzas.

Aviad Aranias is an MLOps team leader and sports analytics architect at Nielsen who specializes in building complex pipelines to analyze video of sporting events across numerous channels. He excels at building and deploying deep learning models to handle large-scale data efficiently. In his free time he likes to bake delicious Neapolitan pizzas.

NEWSLETTER

NEWSLETTER