Multimodal machine learning is a cutting-edge field of research that combines multiple types of data, such as text, images, and audio, to create more complete and accurate models. By integrating these different modalities, researchers aim to improve the model's ability to understand and reason about complex tasks. This integration allows models to leverage the strengths of each modality, leading to improved performance in applications ranging from image recognition and NLP to video analytics and more.

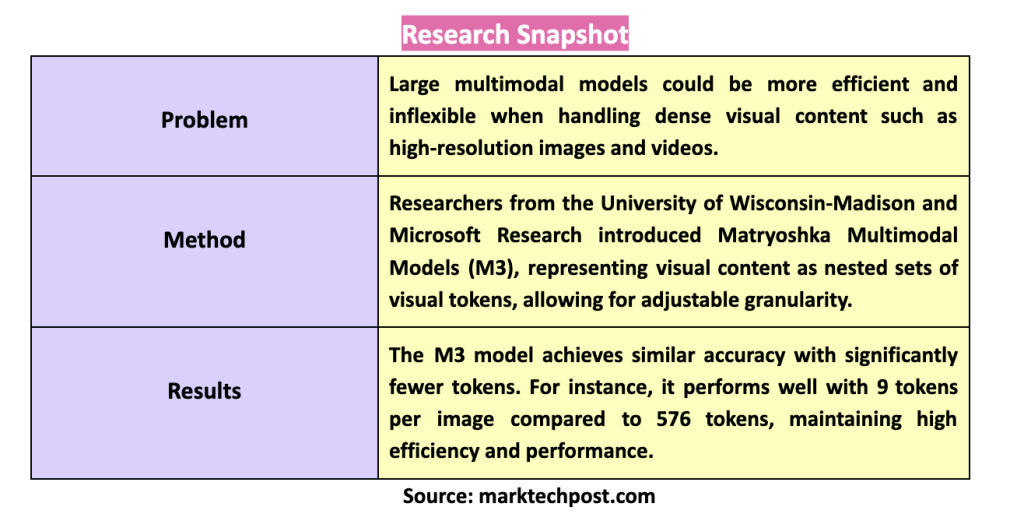

The key problem with multimodal machine learning is the inefficiency and inflexibility of large multimodal models (LMMs) when dealing with high-resolution images and videos. Traditional LMMs, such as LLaVA, use a fixed number of visual tokens to represent an image, often resulting in excessive tokens for dense visual content. This increases computational costs and degrades performance by flooding the model with too much information. Consequently, there is a pressing need for methods that can dynamically adjust the number of tokens based on the complexity of the visual input.

(Featured Article) LLMWare.ai Selected for GitHub 2024 Accelerator: Enabling the Next Wave of Innovation in Enterprise RAG with Small, Specialized Language Models

Existing solutions to this problem, such as token pruning and merging, attempt to reduce the number of visual tokens introduced into the language model. However, these methods typically produce a fixed length output for each image, which does not allow flexibility to balance information density and efficiency. They need to adapt to different levels of visual complexity, which can be critical in applications such as video analysis, where visual content can vary significantly from frame to frame.

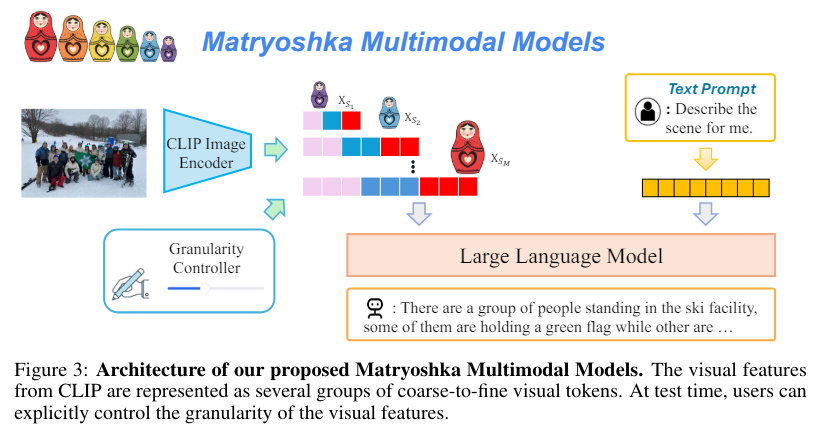

Researchers from the University of Wisconsin-Madison and Microsoft Research presented Matryoshka Multimodal Models (M3). Inspired by the concept of Matryoshka dolls, M3 represents visual content as nested sets of visual tokens that capture information at multiple granularities. This novel approach allows explicit control over visual granularity during inference, allowing the number of tokens to be adjusted based on the anticipated complexity or simplicity of the content. For example, an image with dense details can be represented with more tokens, while simpler images can use fewer tokens.

The M3 model achieves this by encoding images into multiple sets of visual tokens with increasing levels of granularity, from coarse to fine. During training, the model learns to derive coarser tokens from finer ones, ensuring that visual information is captured efficiently. Specifically, the model uses scales such as 1, 9, 36, 144, and 576 tokens, with each level providing a progressively finer representation of the visual content. This hierarchical structure allows the model to preserve spatial information while adapting the level of detail based on specific requirements.

Performance evaluations of the M3 model demonstrate its significant advantages. On COCO-style benchmarks, the model achieved similar accuracy using all 576 tokens with only about 9 per image. This represents a substantial improvement in efficiency without compromising accuracy. The M3 model also performed well on other benchmarks, showing that it can maintain high performance even with a drastically reduced number of tokens. For example, the model's accuracy with 9 tokens was comparable to that of Qwen-VL-Chat with 256 tokens and, in some cases, achieved similar performance with only 1 token.

The model can adapt to different computational and memory constraints during implementation by allowing flexible control over the number of visual tokens. This flexibility is particularly valuable in real-world applications where resources may be limited. The M3 approach also provides a framework for evaluating the visual complexity of data sets, helping researchers understand the optimal granularity needed for various tasks. For example, while natural scene landmarks such as COCO can be handled with around 9 tokens, dense visual perception tasks such as document comprehension or OCR require more tokens, ranging from 144 to 576.

In conclusion, Matryoshka Multimodal Models (M3) addresses the inefficiencies of current LMMs and provides a flexible and adaptive method for representing visual content, laying the foundation for more efficient and effective multimodal systems. The model's ability to dynamically adjust the number of visual tokens based on content complexity ensures a better balance between performance and computational cost. This innovative approach improves the understanding and reasoning capabilities of multimodal models and opens new possibilities for their application in diverse and resource-constrained environments.

Review the Paper and Project. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter. Join our Telegram channel, Discord Channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our 43k+ ML SubReddit | Also, check out our ai Event Platform

![]()

Sana Hassan, a consulting intern at Marktechpost and a dual degree student at IIT Madras, is passionate about applying technology and artificial intelligence to address real-world challenges. With a strong interest in solving practical problems, she brings a new perspective to the intersection of ai and real-life solutions.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>