Amazon Interactive Video Service (Amazon IVS) is a managed live streaming solution that is designed to provide a quick and straightforward setup to let you build interactive video experiences and handles interactive video content from ingestion to delivery.

With the increased usage of live streaming, the need for effective content moderation becomes even more crucial. User-generated content (UGC) presents complex challenges for safety. Many companies rely on human moderators to monitor video streams, which is time-consuming, error-prone, and doesn’t scale with business growth speed. An automated moderation solution supporting a human in the loop (HITL) is increasingly needed.

Amazon Rekognition Content Moderation, a capability of Amazon Rekognition, automates and streamlines image and video moderation workflows without requiring machine learning (ML) experience. In this post, we explain the common practice of live stream visual moderation with a solution that uses the Amazon Rekognition Image API to moderate live streams. You can deploy this solution to your AWS account using the AWS Cloud Development Kit (AWS CDK) package available in our ai-content-moderation-livestream” target=”_blank” rel=”noopener”>GitHub repo.

Moderate live stream visual content

The most common approach for UGC live stream visual moderation involves sampling images from the stream and utilizing image moderation to receive near-real-time results. Live stream platforms can use flexible rules to moderate visual content. For instance, platforms with younger audiences might have strict rules about adult content and certain products, whereas others might focus on hate symbols. These platforms establish different rules to match their policies effectively. Combining human and automatic review, a hybrid process is a common design approach. Certain streams will be stopped automatically, but human moderators will also assess whether a stream violates platform policies and should be deactivated.

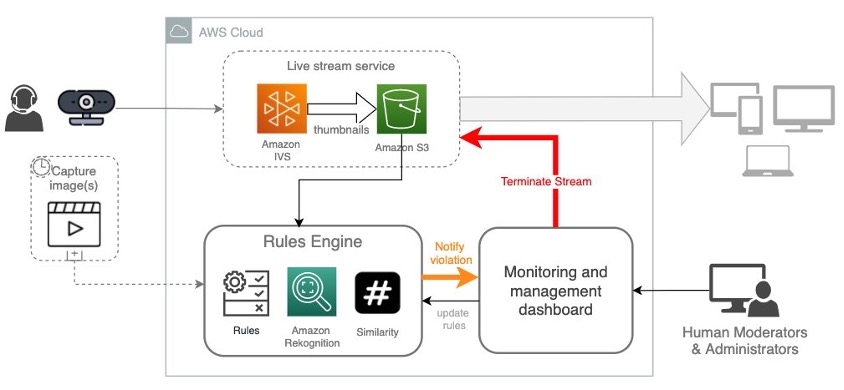

The following diagram illustrates the conceptual workflow of a near-real-time moderation system, designed with loose coupling to the live stream system.

The workflow contains the following steps:

- The live stream service (or the client app) samples image frames from video streams based on a specific interval.

- A rules engine evaluates moderation guidelines, determining the frequency of stream sampling and the applicable moderation categories, all within predefined policies. This process involves the utilization of both ML and non-ML algorithms.

- The rules engine alerts human moderators upon detecting violations in the video streams.

- Human moderators assess the result and deactivate the live stream.

Moderating UGC live streams is distinct from classic video moderation in media. It caters to diverse regulations. How frequently images are sampled from video frames for moderation is typically determined by the platform’s Trust & Safety policy and the service-level agreement (SLA). For instance, if a live stream platform aims to stop channels within 3 minutes for policy violations, a practical approach is to sample every 1–2 minutes, allowing time for human moderators to verify and take action. Some platforms require flexible moderation frequency control. For instance, highly reputable streamers may need less moderation, whereas new ones require closer attention. This also enables cost-optimization by reducing sampling frequency.

Cost is an important consideration in any live stream moderation solution. As UGC live stream platforms rapidly expand, moderating concurrent streams at a high frequency can raise cost concerns. The solution presented in this post is designed to optimize cost by allowing you to define moderation rules to customize sample frequency, ignore similar image frames, and other techniques.

Recording Amazon IVS stream content to Amazon S3

Amazon IVS offers native solutions for recording stream content to an Amazon Simple Storage Service (Amazon S3) bucket and generating thumbnails—image frames from a video stream. It generates thumbnails every 60 seconds by default and provides users the option to customize the image quality and frequency. Using the AWS Management Console, you can create a recording configuration and link it to an Amazon IVS channel. When a recording configuration is associated with a channel, the channel’s live streams are automatically recorded to the specified S3 bucket.

There are no Amazon IVS charges for using the auto-record to Amazon S3 feature or for writing to Amazon S3. There are charges for Amazon S3 storage, Amazon S3 API calls that Amazon IVS makes on behalf of the customer, and serving the stored video to viewers. For details about Amazon IVS costs, refer to Costs (Low-Latency Streaming).

Amazon Rekognition Moderation APIs

In this solution, we use the Amazon Rekognition DetectModerationLabel API to moderate Amazon IVS thumbnails in near-real time. Amazon Rekognition Content Moderation provides pre-trained APIs to analyze a wide range of inappropriate or offensive content, such as violence, nudity, hate symbols, and more. For a comprehensive list of Amazon Rekognition Content Moderation taxonomies, refer to Moderating content.

The following code snippet demonstrates how to call the Amazon Rekognition DetectModerationLabel API to moderate images within an AWS Lambda function using the Python Boto3 library:

The following is an example response from the Amazon Rekognition Image Moderation API:

For additional examples of the Amazon Rekognition Image Moderation API, refer to our Content Moderation Image Lab.

Solution overview

This solution integrates with Amazon IVS by reading thumbnail images from an S3 bucket and sending images to the Amazon Rekognition Image Moderation API. It provides choices for stopping the stream automatically and human-in-the-loop review. You can configure rules for the system to automatically halt streams based on conditions. It also includes a light human review portal, empowering moderators to monitor streams, manage violation alerts, and stop streams when necessary.

In this section, we briefly introduce the system architecture. For more detailed information, refer to the ai-content-moderation-livestream” target=”_blank” rel=”noopener”>GitHub repo.

The following screen recording displays the moderator UI, enabling them to monitor active streams with moderation warnings, and take actions such as stopping the stream or dismissing warnings.

Users can customize moderation rules, controlling video stream sample frequency per channel, configuring Amazon Rekognition moderation categories with confidence thresholds, and enabling similarity checks, which ensures performance and cost-optimization by avoiding processing redundant images.

The following screen recording displays the UI for managing a global configuration.

The solution uses a microservices architecture, which consists of two key components loosely coupled with Amazon IVS.

Rules engine

The rules engine forms the backbone of the live stream moderation system. It is a live processing service that enables near-real-time moderation. It uses Amazon Rekognition to moderate images, validates results against customizable rules, employs image hashing algorithms to recognize and exclude similar images, and can halt streams automatically or alert the human review subsystem upon rule violations. The service integrates with Amazon IVS through Amazon S3-based image reading and facilitates API invocation via Amazon API Gateway.

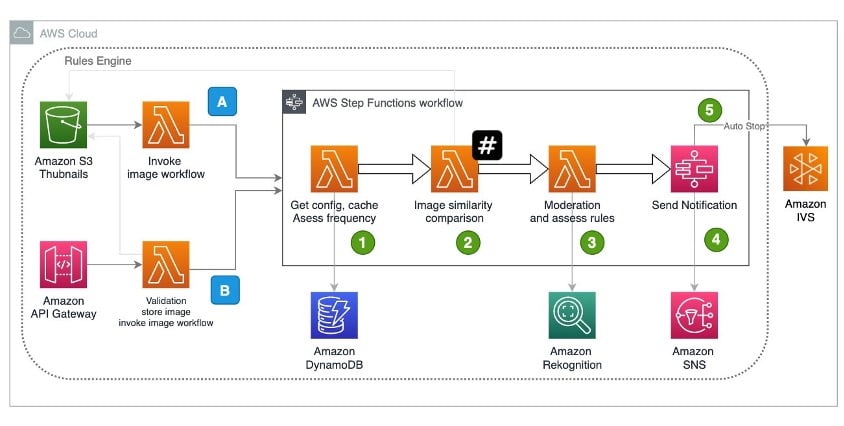

The following architecture diagram illustrates the near-real-time moderation workflow.

There are two methods to trigger the rules engine processing workflow:

- S3 file trigger – When a new image is added to the S3 bucket, the workflow starts. This is the recommended way for Amazon IVS integration.

- REST API call – You can make a RESTful API call to API Gateway with the image bytes in the request body. The API stores the image in an S3 bucket, triggering near-real-time processing. This approach is fitting for images captured by the client side of the live stream app and transmitted over the internet.

The image processing workflow, managed by AWS Step Functions, involves several steps:

- Check the sample frequency rule. Processing halts if the previous sample time is too recent.

- If enabled in the config, perform a similarity check using image hash algorithms. The process skips the image if it’s similar to the previous one received for the same channel.

- Use the Amazon Rekognition Image Moderation API to assess the image against configured rules, applying a confidence threshold and ignoring unnecessary categories.

- If the moderation result violates any rules, send notifications to an Amazon Simple Notification Service (Amazon SNS) topic, alerting downstream systems with moderation warnings.

- If the auto stop moderation rule is violated, the Amazon IVS stream will be stopped automatically.

The design manages rules through a Step Functions state machine, providing a drag-and-drop GUI for flexible workflow definition. You can extend the rules engine by incorporating additional Step Functions workflows.

Monitoring and management dashboard

The monitoring and management dashboard is a web application with a UI that lets human moderators monitor Amazon IVS live streams. It provides near-real-time moderation alerts, allowing moderators to stop streams or dismiss warnings. The web portal also empowers administrators to manage moderation rules for the rules engine. It supports two types of configurations:

- Channel rules – You can define rules for specific channels.

- Global rules – These rules apply to all or a subset of Amazon IVS channels that lack specific configurations. You can define a regular expression to apply the global rule to Amazon IVS channel names matching a pattern. For example: .* applies to all channels. /^test-/ applies to channels with names starting with test-.

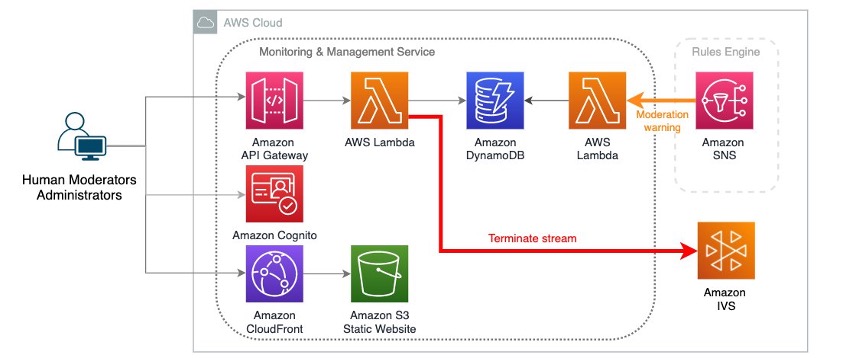

The system is a serverless web app, featuring a static React front end hosted on Amazon S3 with Amazon CloudFront for caching. Authentication is handled by Amazon Cognito. Data is served through API Gateway and Lambda, with state storage in Amazon DynamoDB. The following diagram illustrates this architecture.

The monitoring dashboard is a lightweight demo app that provides essential features for moderators. To enhance functionality, you can extend the implementation to support multiple moderators with a management system and reduce latency by implementing a push mechanism using WebSockets.

Moderation latency

The solution is designed for near-real-time moderation, with latency measured across two separate subsystems:

- Rules engine workflow – The rules engine workflow, from receiving images to sending notifications via Amazon SNS, averages within 2 seconds. This service promptly handles images through a Step Functions state machine. The Amazon Rekognition Image Moderation API processes under 500 milliseconds for average file sizes below 1 MB. (These findings are based on tests conducted with the sample app, meeting near-real-time requirements.) In Amazon IVS, you have the option to select different thumbnail resolutions to adjust the image size.

- Monitoring web portal – The monitoring web portal subscribes to the rules engine’s SNS topic. It records warnings in a DynamoDB table, while the website UI fetches the latest warnings every 10 seconds. This design showcases a lightweight demonstration of the moderator’s view. To further reduce latency, consider implementing a WebSocket to instantly push warnings to the UI upon their arrival via Amazon SNS.

Extend the solution

This post focuses on live stream visual content moderation. However, the solution is intentionally flexible, capable of accommodating complex business rules and extensible to support other media types, including moderating chat messages and audio in live streams. You can enhance the rules engine by introducing new Step Functions state machine workflows with upstream dispatching logic. We’ll delve deeper into live stream text and audio moderation using AWS ai services in upcoming posts.

Summary

In this post, we provided an overview of a sample solution that showcases how to moderate Amazon IVS live stream videos using Amazon Rekognition. You can experience the sample app by following the instructions in the ai-livestream-moderation-poc-in-a-box” target=”_blank” rel=”noopener”>GitHub repo and deploying it to your AWS account using the included AWS CDK package.

Learn more about content moderation on AWS. Take the first step towards streamlining your content moderation operations with AWS.

About the Authors

Lana Zhang is a Senior Solutions Architect at AWS WWSO ai Services team, specializing in ai and ML for Content Moderation, Computer Vision, Natural Language Processing and Generative ai. With her expertise, she is dedicated to promoting AWS ai/ML solutions and assisting customers in transforming their business solutions across diverse industries, including social media, gaming, e-commerce, media, advertising & marketing.

Lana Zhang is a Senior Solutions Architect at AWS WWSO ai Services team, specializing in ai and ML for Content Moderation, Computer Vision, Natural Language Processing and Generative ai. With her expertise, she is dedicated to promoting AWS ai/ML solutions and assisting customers in transforming their business solutions across diverse industries, including social media, gaming, e-commerce, media, advertising & marketing.

Tony Vu is a Senior Partner Engineer at Twitch. He specializes in assessing partner technology for integration with Amazon Interactive Video Service (IVS), aiming to develop and deliver comprehensive joint solutions to our IVS customers.

Tony Vu is a Senior Partner Engineer at Twitch. He specializes in assessing partner technology for integration with Amazon Interactive Video Service (IVS), aiming to develop and deliver comprehensive joint solutions to our IVS customers.