ai has had a significant impact on healthcare, particularly in disease diagnosis and treatment planning. One area gaining attention is the development of medical language-wide vision models (Med-LVLM), which combine visual and textual data for advanced diagnostic tools. These models have shown great potential to improve the analysis of complex medical images, offering interactive and intelligent responses that can help doctors in making clinical decisions. However, as promising as these tools are, they are not without critical challenges that limit their widespread adoption in healthcare.

A major problem faced by Med-LVLMs is the tendency to produce inaccurate or “delusional” medical information. These factual hallucinations can seriously affect patient outcomes if models generate misdiagnoses or misinterpret medical images. The primary reasons for these issues are the need for large, high-quality labeled medical data sets and the distribution gaps between the data used to train these models and the data found in real-world clinical settings. This discrepancy between training data and actual deployment data creates significant reliability concerns, making it difficult to rely on these models in critical medical scenarios. Furthermore, current solutions, such as fine-tuning and recovery augmented generation (RAG) techniques, have limitations, especially when applied in various medical fields such as radiology, pathology, and ophthalmology.

Existing methods to improve the performance of Med-LVLM mainly focus on two approaches: fine-tuning and RAG. Fine-tuning involves adjusting model parameters based on smaller, more specialized data sets to improve accuracy, but the limited availability of high-quality labeled data hampers this approach. Additionally, fitted models often need to perform better when applied to new, unseen data. In contrast, RAG allows models to retrieve external knowledge during the inference process, offering real-time references that could help improve factual accuracy. However, this technique could be even better. Current RAG-based systems often need help to generalize across different medical domains, limiting their reliability and causing potential misalignment between the information retrieved and the actual medical problem being addressed.

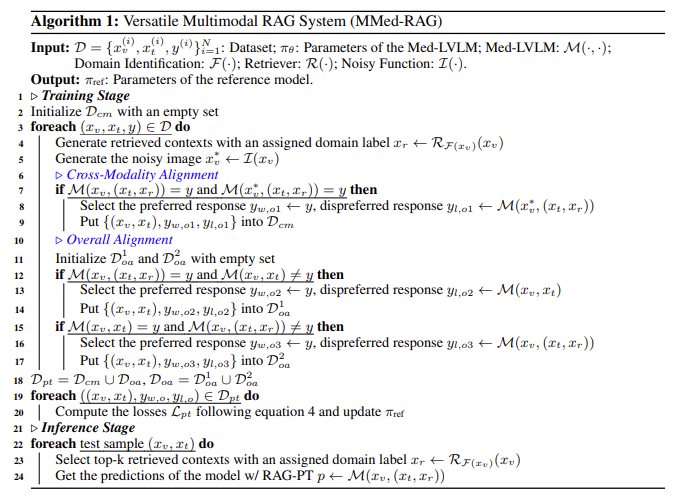

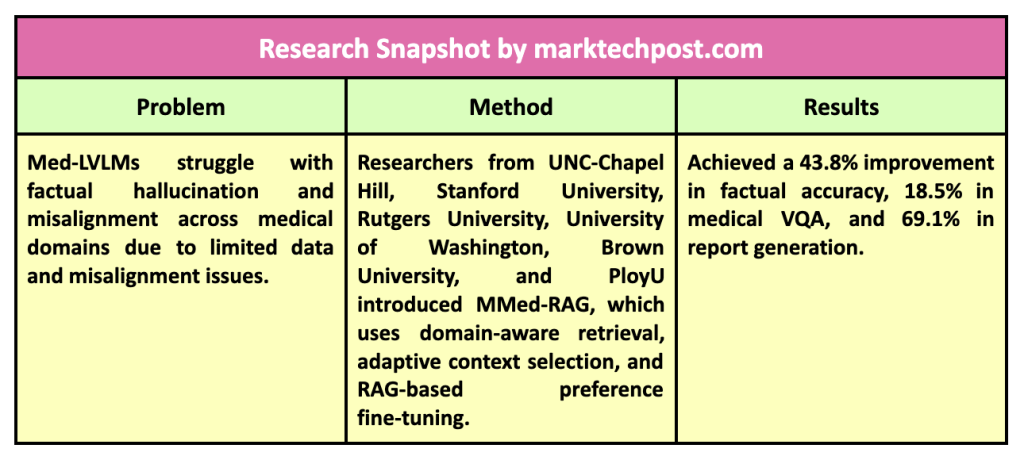

Researchers from UNC-Chapel Hill, Stanford University, Rutgers University, University of Washington, Brown University and PloyU introduced a new system called MMed-RAGa versatile multimodal retrieval augmented generation system designed specifically for medical vision and language models. MMed-RAG aims to significantly improve the factual accuracy of Med-LVLM by implementing a domain-aware retrieval mechanism. This mechanism can handle various types of medical images, such as radiology, ophthalmology, and pathology, ensuring that the retrieval model is appropriate for the specific medical domain. The researchers also developed an adaptive context selection method that adjusts the number of contexts retrieved during inference, ensuring that the model uses only high-quality, relevant information. This adaptive selection helps avoid common errors when models retrieve too much or too little data, which could lead to inaccuracies.

The MMed-RAG system is based on three key components:

- He domain-aware recovery The mechanism ensures that the model retrieves domain-specific information that closely aligns with the input medical image. For example, radiology images would be combined with appropriate radiology information, while pathology images would be extracted from pathology-specific databases.

- He adaptive context selection The method improves the quality of the information retrieved by using similarity scores to filter out irrelevant or low-quality data. This dynamic approach ensures that the model only considers the most relevant contexts, reducing the risk of factual hallucinations.

- He RAG-based preference tuning optimizes the alignment between model modalities, ensuring that the retrieved information and visual input are correctly aligned with the ground truth, thus improving the overall reliability of the model.

MMed-RAG was tested on five medical datasets, spanning radiology, pathology, and ophthalmology, with outstanding results. The system achieved a 43.8% improvement in factual accuracy compared to previous Med-LVLMs, highlighting its ability to improve diagnostic reliability. In medical question answering (VQA) tasks, MMed-RAG improved accuracy by 18.5%, and in medical report generation it achieved a notable improvement of 69.1%. These results demonstrate the effectiveness of the system in closed and open tasks, where the information retrieved is essential to obtain accurate answers. Additionally, the preference tuning technique used by MMed-RAG addresses misalignment between modalities, a common problem in other Med-LVLMs, where models struggle to balance visual input with retrieved textual information.

Key findings from this research include:

- MMed-RAG achieved a 43.8% increase in factual accuracy across five medical data sets.

- The system improved medical VQA accuracy by 18.5% and medical report generation by 69.1%.

- The domain-aware retrieval mechanism ensures that medical images are matched with the correct context, improving diagnostic accuracy.

- Adaptive context selection helps reduce the retrieval of irrelevant data, which increases the reliability of the model output.

- RAG-based preference tuning effectively addresses misalignment between visual inputs and retrieved information, improving overall model performance.

In conclusion, MMed-RAG significantly advances medical models of vision and language by addressing key challenges related to factual accuracy and model alignment. By incorporating domain-aware retrieval, adaptive context selection, and preference adjustment, the system improves the objective reliability of the Med-LVLMs and improves its generalization across multiple medical domains. This system has shown substantial improvements in diagnostic accuracy and the quality of medical reports generated. These advances position MMed-RAG as a crucial step in making ai-assisted medical diagnoses more reliable and trustworthy.

look at the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 50,000ml.

(Next live webinar: October 29, 2024) Best platform to deliver optimized models: Predibase inference engine (promoted)

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for social good. Their most recent endeavor is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has more than 2 million monthly visits, which illustrates its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>