Dissemination policies in Imitation learning (IL) It can generate various agent behaviors, but as models grow in size and capacity, their computational demands increase, leading to slower training and inference. This challenges real-time applications, especially in environments with limited computing power, such as mobile robots. These policies need many parameters and steps to remove noise and are therefore not suitable for use in such scenarios. Although these models can be expanded with larger amounts of data, their high computational cost poses a significant limitation.

Current methods in roboticsas Transformer-based diffusion modelsThey are used for tasks such as Learning by imitation, Offline reinforcement learningand robot design. These models are based on Convolutional neural networks (CNN) or transformers with conditioning techniques such as Movie. While capable of generating multimodal behavior, they are computationally expensive due to large parameters and many denoising steps, which slows down training and inference, making them impractical for real-time applications. Besides, Expert Mix (Ministry of Education) models face problems such as expert collapse and inefficient use of capacity. Despite load balancing solutions, these models struggle to optimize the router and experts, resulting in suboptimal performance.

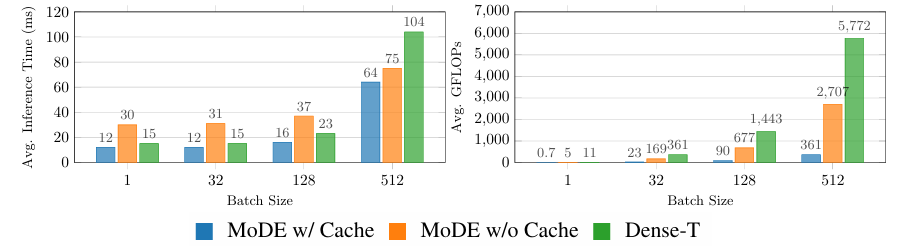

To address the limitations of current methods, researchers from the Karlsruhe Institute of technology and WITH inserted Modeto Mix of Experts (MoE) Dissemination Policy Designed for tasks such as imitation learning and robot design. MoDE improves efficiency by using noise-conditioned routing and a self-attention mechanism for more effective denoising at various noise levels. Unlike traditional methods that rely on a complex denoising process, MoDE calculates and integrates only the necessary experts at each noise level, reducing latency and computational cost. This architecture enables faster and more efficient inference while maintaining performance, achieving significant computational savings by using only a subset of the model parameters during each forward step.

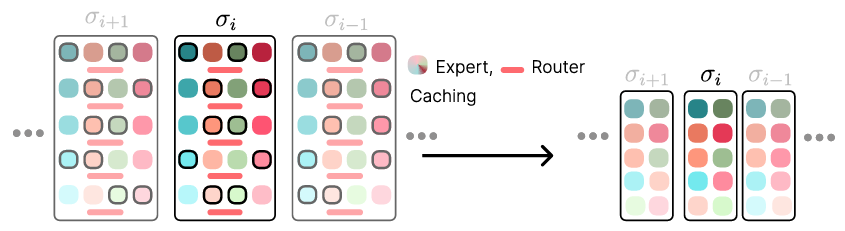

The MoDE framework employs a noise-conditioned approach in which the experts' path is determined by the noise level at each step. It uses a frozen CLIP language encoder for language conditioning and FiLM-conditioned ResNets for image encoding. The model incorporates a sequence of transformer blocks, each responsible for different denoising phases. By introducing noise-sensitive positional embeddings and expert caching, MoDE ensures that only the necessary experts are used, reducing computational overhead. The researchers performed extensive analyzes of MoDE components, which provide useful information for designing efficient and scalable transformer architectures for diffusion policies. Pre-training on diverse multi-robot datasets allows MoDE to outperform existing generalist policies.

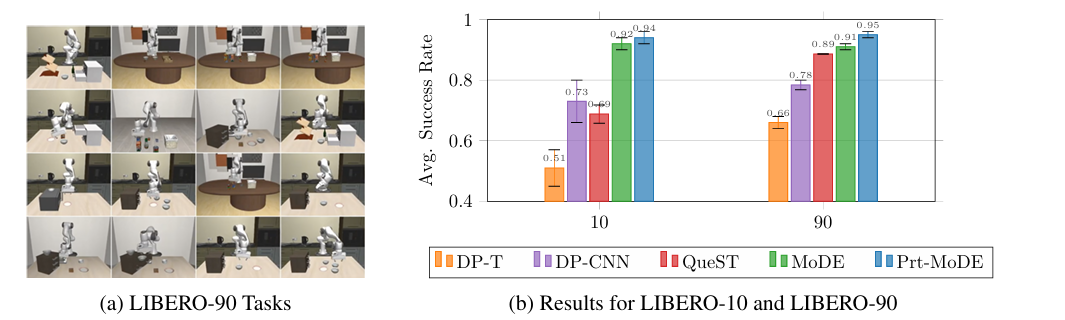

The researchers conducted experiments to evaluate Mode on several key questions, including its performance compared to other broadcast transformer policies and architectures, the effect of large-scale pre-training on its performance, efficiency and speed, and the effectiveness of token routing strategies in different environments. The experiments were compared Mode with previous diffusion transformer architectures, ensuring fairness by using a similar number of active parameters, and tested it on short-term and long-term tasks. MoDE achieved the highest performance in benchmark tests such as LIBERO–90surpassing other models such as Transmission transformation and Search. Pre-training of MoDE improved its performance, demonstrating its ability to learn long-horizon tasks and its efficiency in computational use. MoDE also showed superior performance in the CALVIN Language Skills Benchmarksurpassing models like RoboFlamingo and GR-1 while maintaining greater computational efficiency. Mode outperformed all baselines on zero-shot generalization tasks and demonstrated strong generalization abilities.

In conclusion, the proposed framework improves performance and efficiency using a combination of experts, a transformer, and a noise-conditioned routing strategy. The model outperformed previous diffusion policies as it required fewer parameters and reduced computational costs. Therefore, this framework can be used as a basis to improve the scalability of the model in future research studies. Future studies may also discuss the application of MoDE in other domains because so far it has been possible to continue to expand while maintaining high performance levels in machine learning tasks.

Verify he Paper and Model hugging face. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. Don't forget to join our SubReddit over 60,000 ml.

UPCOMING FREE ai WEBINAR (JANUARY 15, 2025): <a target="_blank" href="https://info.gretel.ai/boost-llm-accuracy-with-sd-and-evaluation-intelligence?utm_source=marktechpost&utm_medium=newsletter&utm_campaign=202501_gretel_galileo_webinar”>Increase LLM Accuracy with Synthetic Data and Assessment Intelligence–<a target="_blank" href="https://info.gretel.ai/boost-llm-accuracy-with-sd-and-evaluation-intelligence?utm_source=marktechpost&utm_medium=newsletter&utm_campaign=202501_gretel_galileo_webinar”>Join this webinar to learn actionable insights to improve LLM model performance and accuracy while protecting data privacy..

Divyesh is a Consulting Intern at Marktechpost. He is pursuing a BTech in Agricultural and Food Engineering from the Indian Institute of technology Kharagpur. He is a data science and machine learning enthusiast who wants to integrate these leading technologies in agriculture and solve challenges.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>