NVIDIA has introduced Mistral-NeMo-Minitron 8Ba highly sophisticated Large Language Model (LLM). This model continues its work in developing cutting-edge ai technologies. It stands out for its impressive performance in multiple benchmark tests, making it one of the most advanced open-access models in its size class.

The Mistral-NeMo-Minitron 8B was created by using width pruning derived from the larger Mistral NeMo 12B model. This process reduces the model size by selectively pruning the less important parts of the network, such as neurons and attention cores. A retraining phase is then performed using a technique known as knowledge distillation. The result is a smaller, more efficient model that retains much of the performance of the original, larger model.

The process of pruning and distilling models

Model pruning is a technique that allows ai models to be reduced in size and made more efficient by removing less critical components. There are two main types of pruning: depth-wise pruning, which reduces the number of layers in the model, and width-wise pruning, which reduces the number of neurons, attention heads, and embedding channels within each layer. In the case of Mistral-NeMo-Minitron 8B, width-wise pruning was chosen to achieve the optimal balance between size and performance.

After pruning, the model undergoes a lightweight retraining process using knowledge distillation. This technique transfers knowledge from the original, larger teacher model to the smaller, pruned student model. The goal is to create a faster, less resource-intensive model while maintaining high accuracy. In the case of Mistral-NeMo-Minitron 8B, this process involved retraining on a dataset of 380 billion tokens, which is significantly smaller than the dataset used to train the original Mistral NeMo 12B model from scratch.

Performance and benchmarking

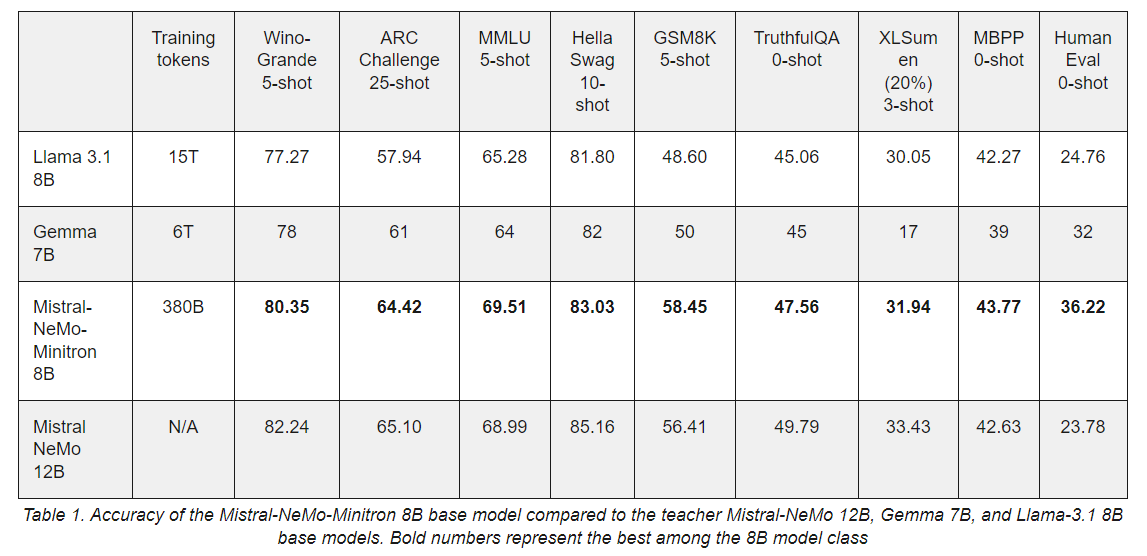

The Mistral-NeMo-Minitron 8B’s performance is a testament to the success of this pruning and distillation approach. The model consistently outperforms other models in its size class on several popular benchmarks. For example, a 5-shot WinoGrande test scored 80.35, beating the Llama 3.1 8B and Gemma 7B. Similarly, it scored 69.51 in the 5-shot MMLU test and 83.03 in the 10-shot HellaSwag test, making it one of the most accurate models in its category.

Comparing the Mistral-NeMo-Minitron 8B to other models such as the Mistral NeMo 12B, Llama 3.1 8B and Gemma 7B highlights its superior performance in several key areas. This success is attributed to the strategic pruning of the Mistral NeMo 12B model and the subsequent light retraining phase. The Mistral-NeMo-Minitron 8B model demonstrates the effectiveness of structured weight pruning and knowledge distillation to produce high-performing compact models.

Technical details and architecture

The Mistral-NeMo-Minitron 8B model architecture is built on a transformer decoder for autoregressive language modeling. It features a model embedding size of 4096, 32 attention heads, and an intermediate MLP dimension of 11,520, distributed across 40 layers. This design also incorporates advanced techniques such as Grouped-Query Attention (GQA) and Rotary Position Embeddings (RoPE), contributing to robust performance across a variety of tasks.

The model was trained on a diverse dataset of multilingual English text and code spanning legal, mathematical, scientific, and financial domains. This large and varied dataset ensures that the model is suitable for a variety of applications. The training process included input of question-answer and alignment data to further improve the model’s performance.

Future directions and ethical considerations

The launch of Mistral-NeMo-Minitron 8B is just the beginning of NVIDIA’s efforts to develop smaller, more efficient models through pruning and distillation. The company plans to continue refining this technique to create even smaller models with high accuracy and efficiency. These models will be integrated into the NVIDIA NeMo framework for generative ai, providing developers with powerful tools for a variety of natural language processing tasks.

However, it is important to note the limitations and ethical considerations of the Mistral-NeMo-Minitron 8B model. Like many large language models, it was trained on data that may contain toxic language and social biases. As a result, there is a risk that the model could amplify these biases or produce inappropriate responses. NVIDIA emphasizes the importance of responsible ai development and encourages users to consider these factors when deploying the model in real-world applications.

Conclusion

NVIDIA introduced the Mistral-NeMo-Minitron 8B using width pruning and knowledge distillation. This model rivals and often outperforms other models in its size class. As NVIDIA continues to refine and expand its ai capabilities, the Mistral-NeMo-Minitron 8B sets a new standard for efficiency and performance in natural language processing.

Take a look at the Model card and Details. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram Channel and LinkedIn GrAbove!. If you like our work, you will love our fact sheet..

Don't forget to join our Over 49,000 ML subscribers on Reddit

Find upcoming ai webinars here

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary engineer and entrepreneur, Asif is committed to harnessing the potential of ai for social good. His most recent initiative is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has over 2 million monthly views, illustrating its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER