Minish Lab was recently introduced Model2Veca revolutionary tool designed to distill smaller and faster models from any Sentence Transformer. With this innovation, Minish Lab aims to provide researchers and developers with a highly efficient alternative to handle Natural Language Processing (NLP) tasks. Model2Vec enables fast distillation of compact models without sacrificing performance, positioning it as a powerful solution in language models.

Model2Vec Overview

Model2Vec is a distillation tool that creates small, fast, and efficient models for a variety of natural language processing tasks. Unlike traditional models, which typically require large amounts of data and training time, Model2Vec works without training data, offering a level of simplicity and speed that was previously unattainable.

Model2vec has two modes:

Production:It works similarly to a sentence transformer, using a subword tokenizer to encode all word fragments. It is fast to create and compact (about 30 MB), although it may have lower performance in certain tasks.

Vocabulary: Works like GloVe or standard word2vec vectors, but offers improved performance. These models are slightly larger, depending on the vocabulary size, but are still fast and are ideal for situations where extra RAM is available but speed is needed.

Model2Vec involves passing a vocabulary through a Sentence Transformer model, reducing the dimensionality of the embeddings using principal component analysis (PCA), and applying Zipf weighting to improve performance. The result is a small, static model that performs exceptionally well on a variety of tasks, making it ideal for settings with limited computing resources.

Distillation and model inference

The distillation process with Model2Vec is remarkably fast. According to the release, using the MPS backend, a model can be distilled in as little as 30 seconds on a 2024 MacBook. This efficiency is achieved without additional training data, which is a significant shift from traditional machine learning models that rely on large datasets for training. The distillation process turns a Sentence Transformer model into a much smaller Model2Vec model, reducing its size by 15x, from 120 million parameters to just 7.5 million. The resulting model takes up just 30 MB on disk, making it ideal for deployment in resource-constrained environments.

Once distilled, the model can be used for inference tasks such as text classification, clustering, or even building Retrieval Augmented Generation (RAG) systems. Inference using Model2Vec models is significantly faster than traditional methods. Models can run up to 500 times faster on the CPU than their larger counterparts, offering a green and highly efficient alternative for natural language processing tasks.

Main features and benefits

One of the most notable features of Model2Vec is its versatility. The tool works with any Sentence Transformer model, meaning that users can bring their own models and vocabulary. This flexibility allows users to create domain-specific models, such as biomedical or multilingual models, by simply entering the relevant vocabulary. Model2Vec is tightly integrated with the HuggingFace hub, making it easy for users to share and upload models directly from the platform. Another advantage of Model2Vec is its ability to handle multilingual tasks. Whether an English, French, or multilingual model is needed, Model2Vec can adapt to these requirements, further expanding its applicability across different languages and domains. Ease of evaluation is also a significant benefit. Model2Vec models are designed to work out of the box on benchmark tasks such as the Massive Text Embedding Benchmark (MTEB), allowing users to measure the performance of their distilled models quickly.

Performance and evaluation

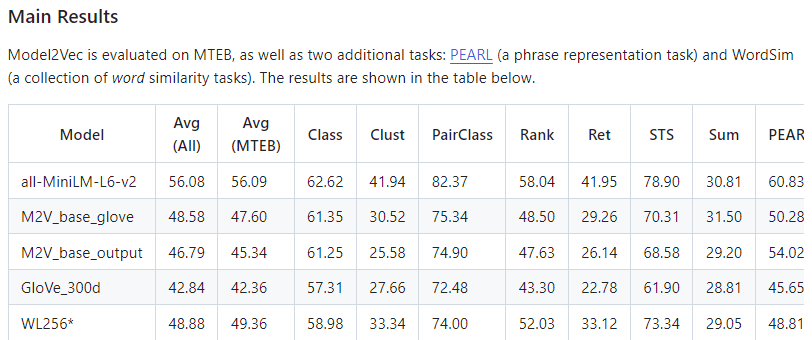

Model2Vec has undergone rigorous testing and evaluation, and has achieved impressive results. Model2Vec models outperformed traditional static embedding models such as GloVe and Word2Vec in benchmark evaluations. For example, the M2V_base_glove model, based on the GloVe vocabulary, demonstrated better performance on a variety of tasks than the original GloVe embeddings.

Model2Vec models have been shown to be competitive with state-of-the-art models such as the MiniLM-L6-v2, while being significantly smaller and faster. The speed advantage is particularly notable, with Model2Vec models offering comparable classification performance to larger models, but at a fraction of the computational cost. This balance between speed and performance makes Model2Vec an excellent choice for developers looking to optimize both model size and efficiency.

Use cases and applications

The release of Model2Vec opens up a wide range of possible applications. Its small size and fast inference times make it particularly suitable for deployment on edge devices, where computational resources are limited. The ability to distill models without training data makes it a valuable tool for researchers and developers working in data-sparse environments. Model2Vec can be used in enterprise settings for a variety of tasks, including sentiment analysis, document classification, and information retrieval. Its compatibility with the HuggingFace hub makes it a natural choice for organizations already using HuggingFace models in their workflows.

Conclusion

Model2Vec represents a significant advancement in the field of natural language processing, offering a powerful and efficient solution. By enabling the distillation of small and fast models without the need for training data, Minish Lab has created a tool that can democratize access to natural language processing technology. Model2Vec provides a versatile and scalable solution for a variety of language-related tasks, whether for academic research, enterprise applications, or deployment in resource-constrained environments.

Take a look at the HF Page and GitHubAll credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram Channel and LinkedIn GrAbove!. If you like our work, you will love our fact sheet..

Don't forget to join our SubReddit of over 50,000 ml

FREE ai WEBINAR: 'SAM 2 for Video: How to Optimize Your Data' (Wednesday, September 25, 4:00 am – 4:45 am EST)

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary engineer and entrepreneur, Asif is committed to harnessing the potential of ai for social good. His most recent initiative is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has over 2 million monthly views, illustrating its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>