amazon SageMaker Data Wrangler provides a visual interface to streamline and accelerate data preparation for machine learning (ML), often the most tedious and time-consuming task in ML projects. amazon SageMaker Canvas is a low-code, no-code visual interface for building and deploying ML models without writing code. Based on customer feedback, we have combined SageMaker Data Wrangler’s advanced ML-specific data preparation capabilities within SageMaker Canvas, providing users with a comprehensive no-code workspace for preparing data and building and deploying ML models.

By abstracting away much of the complexity of the ML workflow, SageMaker Canvas allows you to prepare data and then build or use a model to generate highly accurate business insights without writing code. Additionally, data preparation in SageMaker Canvas offers many improvements, such as up to 10x faster page loads, a natural language interface for data preparation, the ability to see the size and shape of data at every step, and improved replace and reorder transformations to iterate through a data flow. Finally, you can build a model with a single click in the same interface or create a SageMaker Canvas dataset to tune base models (FMs).

This post shows how to move existing SageMaker Data Wrangler flows (the statements created when creating data transformations) from SageMaker Studio Classic to SageMaker Canvas. We provide an example of how to move files from SageMaker Studio Classic to amazon Simple Storage Service (amazon S3) as an intermediate step before importing them into SageMaker Canvas.

Solution Overview

The high-level steps are as follows:

- Open a terminal in SageMaker Studio and copy the flow files to amazon S3.

- Import flow files into SageMaker Canvas from amazon S3.

Prerequisites

In this example, we use a folder called data-wrangler-classic-flows as a staging folder for migrating flow files to amazon S3. You do not need to create a migration folder, but in this example, the folder was created using the file system explorer portion of SageMaker Studio Classic. After creating the folder, take care to move and consolidate the relevant SageMaker Data Wrangler flow files. In the following screenshot, three flow files required for the migration have been moved to the folder. data-wrangler-classic-flows, as seen in the left panel. One of these files, titanic.flowopens and is visible in the right panel.

Copying stream files to amazon S3

To copy the flow files to amazon S3, complete the following steps:

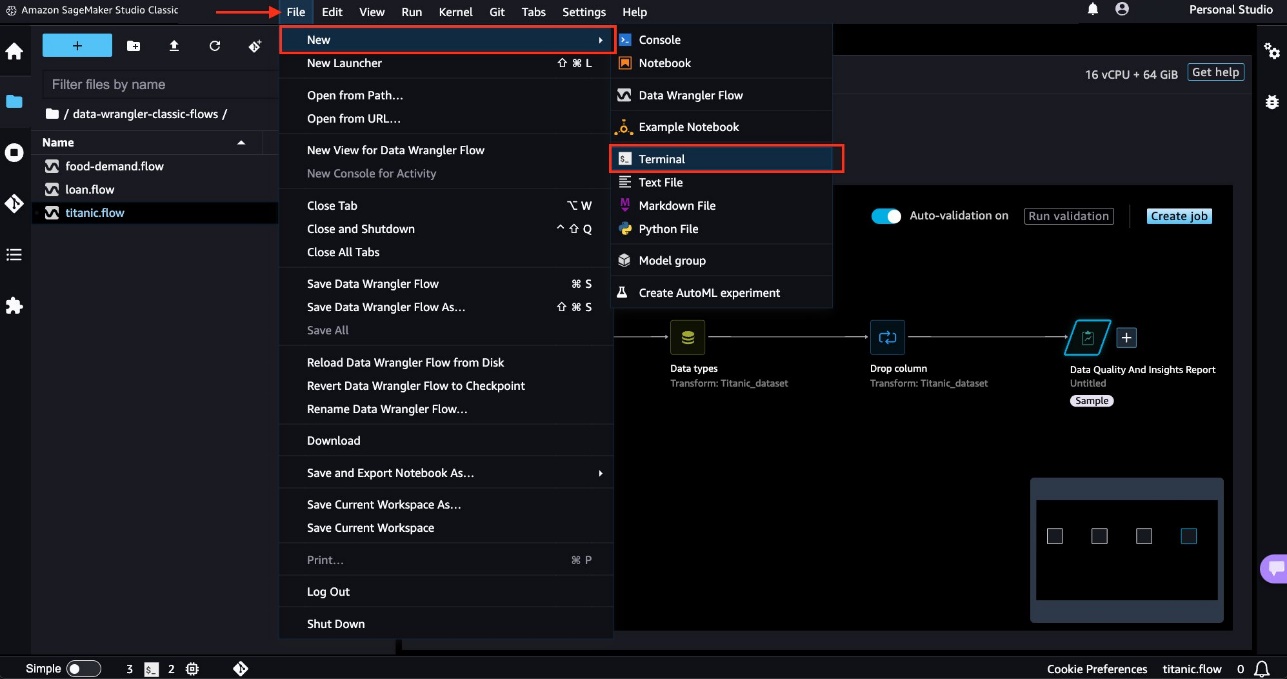

- To open a new terminal in SageMaker Studio Classic, in the Archive menu, choose Terminal.

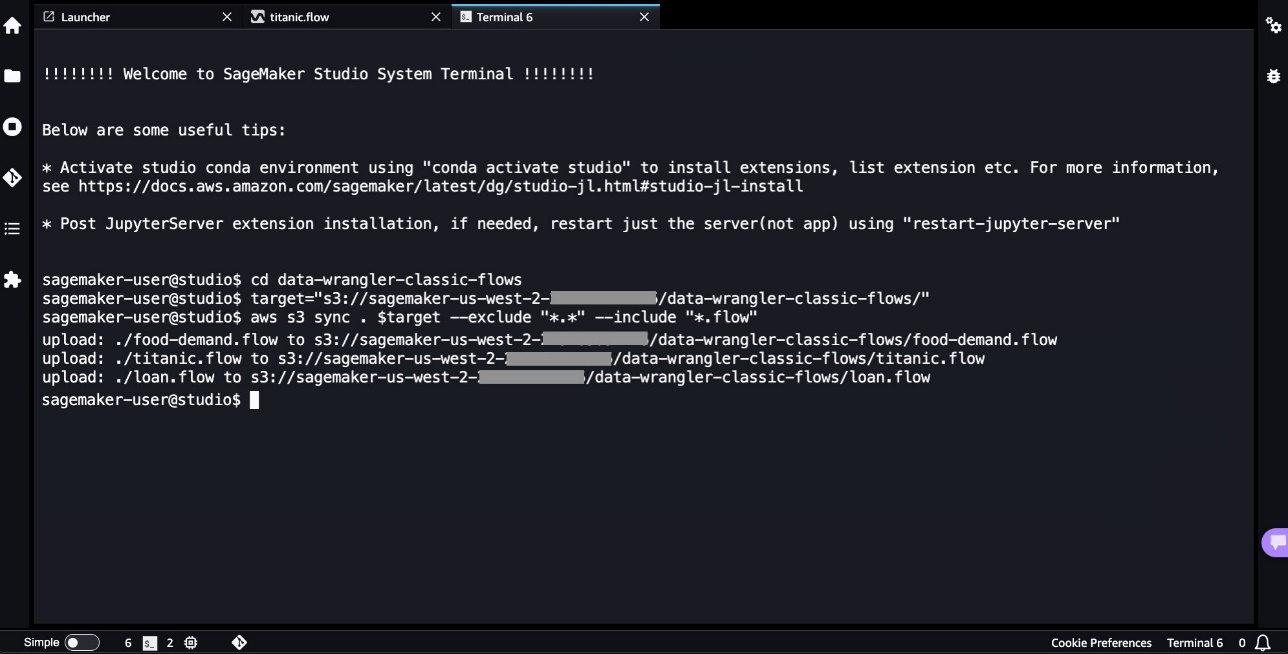

- With a new terminal open, you can provide the following commands to copy your flow files to the amazon S3 location of your choice (replacing NNNNNNNNNNNN with your AWS account number):

The following screenshot shows an example of what the amazon S3 sync process should look like. You will receive a confirmation after all files have been uploaded. You can adjust the above code to meet your unique amazon S3 location and input folder needs. If you do not want to create a folder, when you enter the terminal, simply skip the directory change (cd) and all flow files in the entire SageMaker Studio Classic file system will be copied to amazon S3, regardless of the source folder.

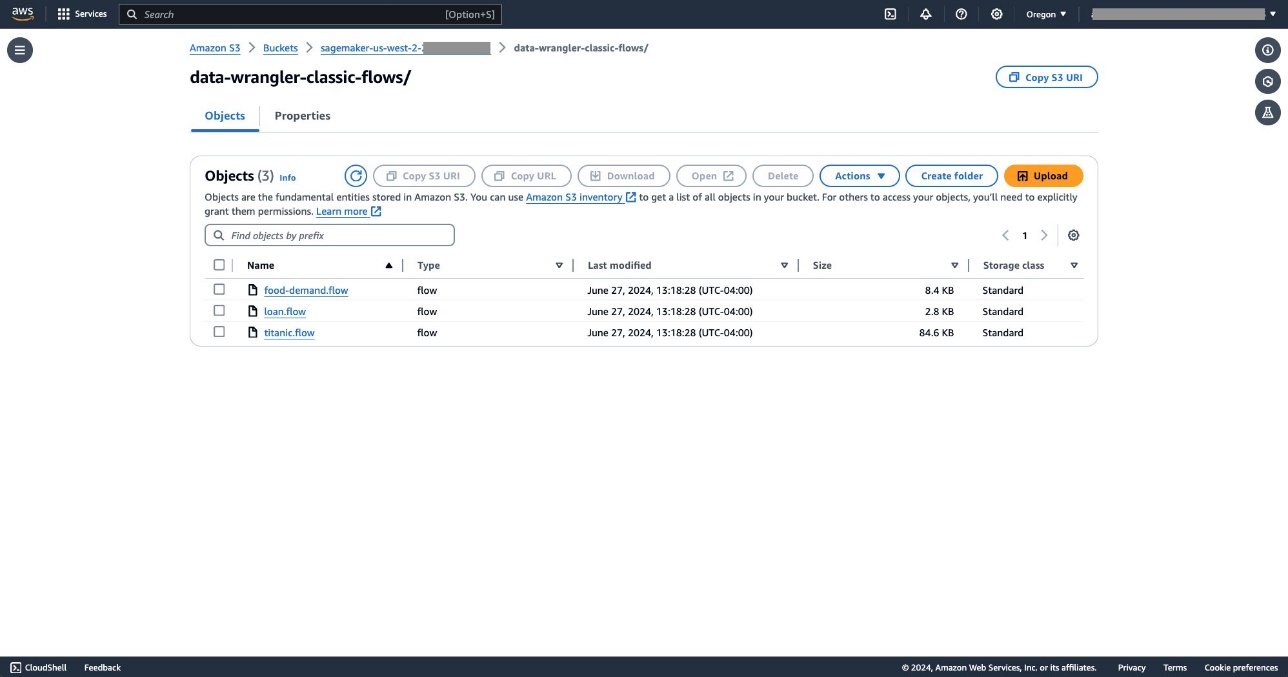

After uploading the files to amazon S3, you can validate that they have been copied using the amazon S3 console. In the following screenshot, we see the three original flow files, now in an S3 bucket.

Importing Data Wrangler flow files into SageMaker Canvas

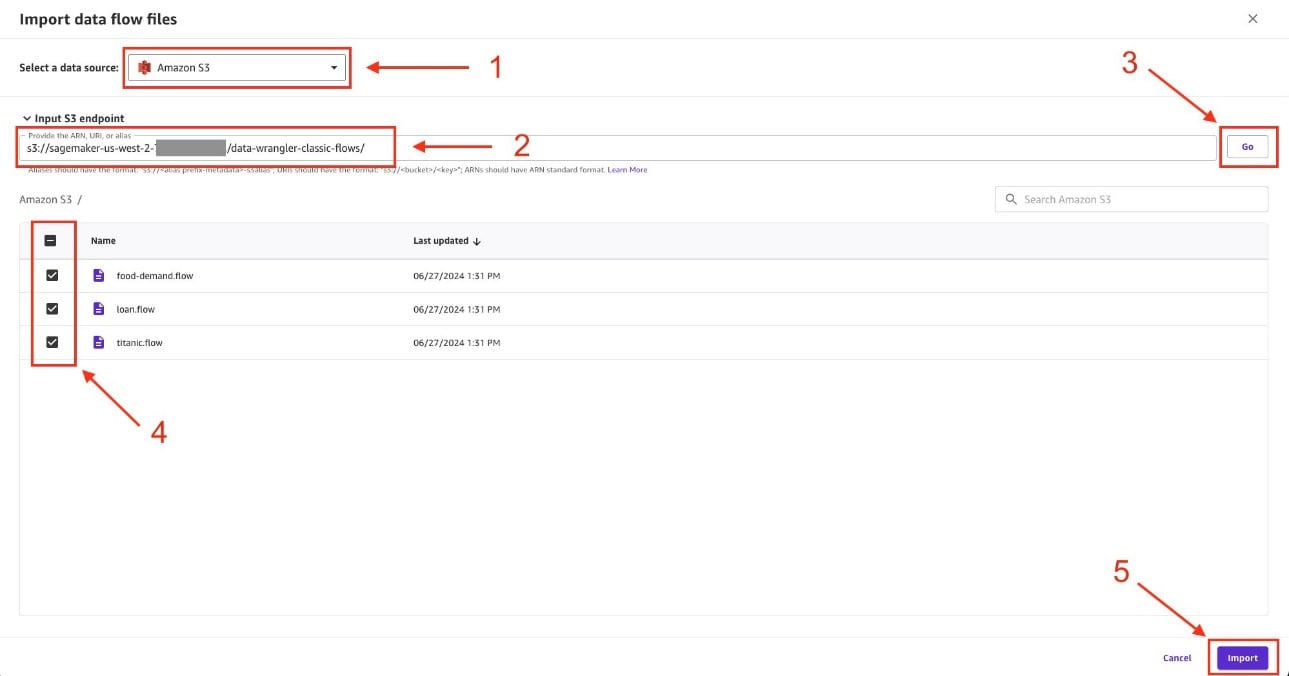

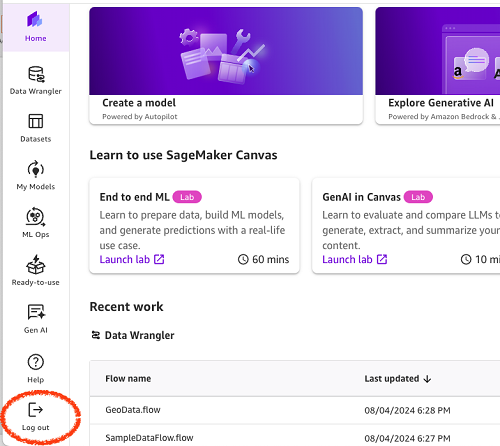

To import flow files into SageMaker Canvas, complete the following steps:

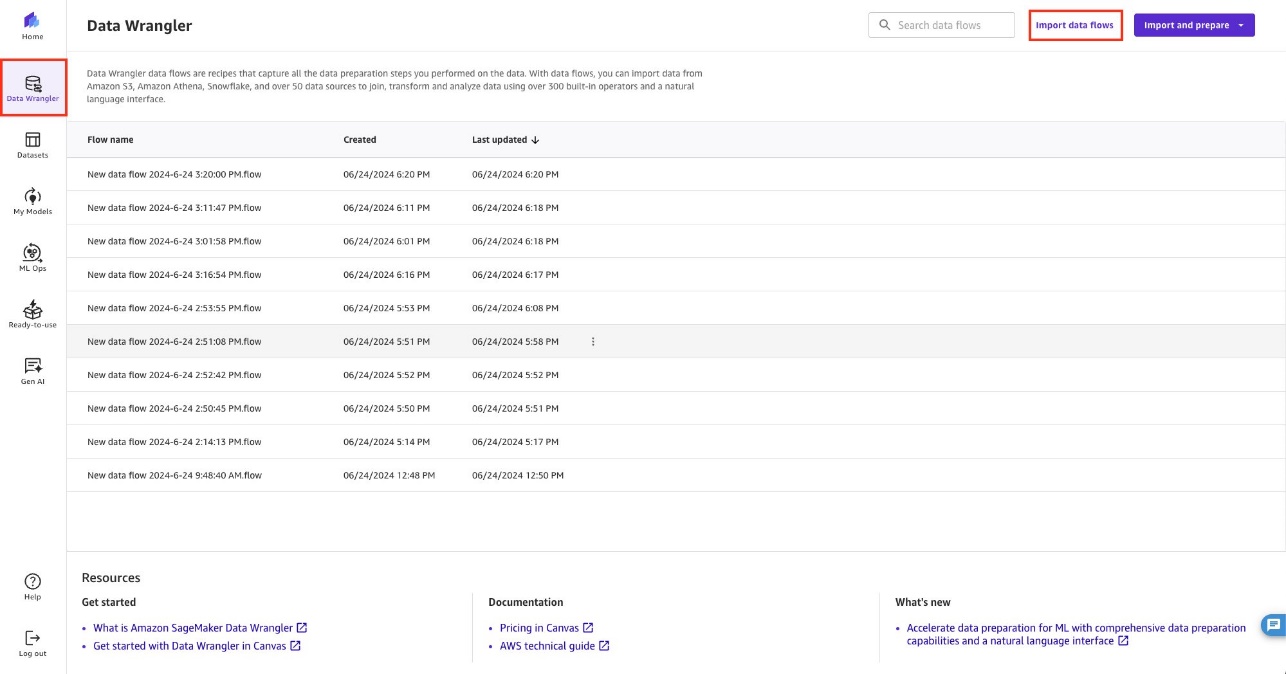

- In the SageMaker Studio console, select Data Wrangler in the navigation panel.

- Choose Import data streams.

- For Select a data source, choose amazon S3.

- For S3 input endpointEnter the amazon S3 location you previously used to copy files from SageMaker Studio to amazon S3 and then choose GoYou can also navigate to the amazon S3 location using the browser below.

- Select the flow files you want to import and then choose Matter.

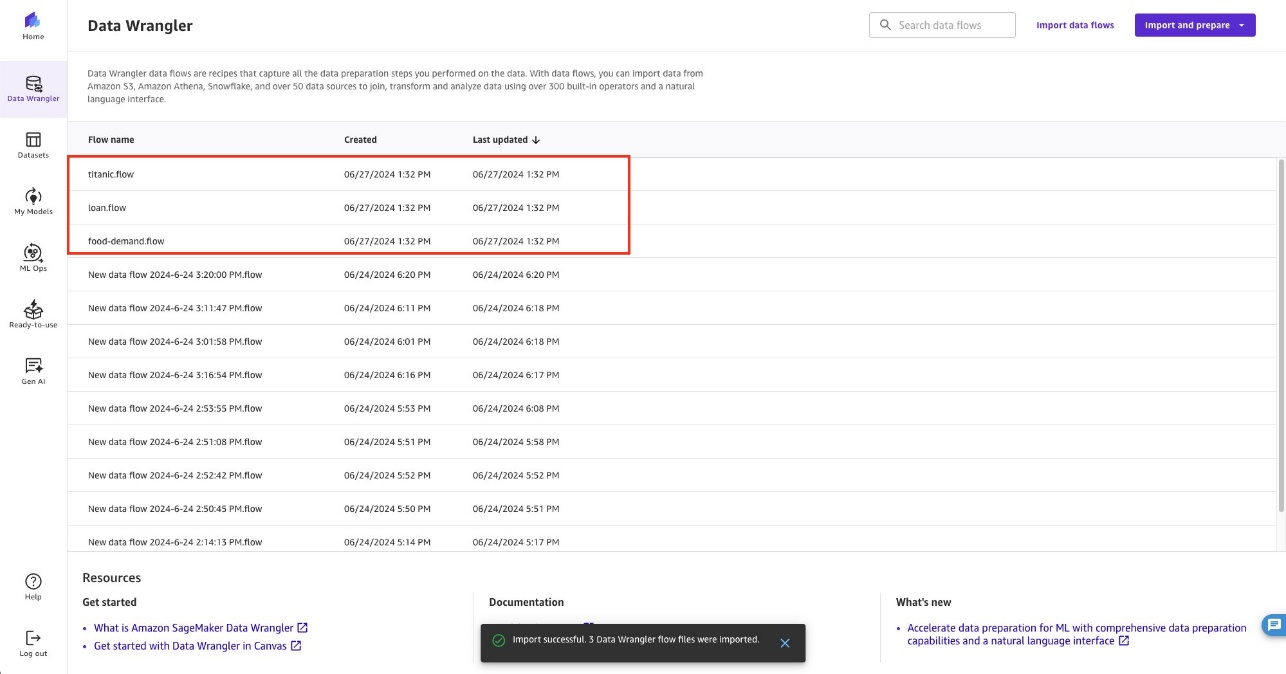

After you import the files, the SageMaker Data Wrangler page will refresh to display the newly imported files, as shown in the following screenshot.

Use SageMaker Canvas for data transformation with SageMaker Data Wrangler

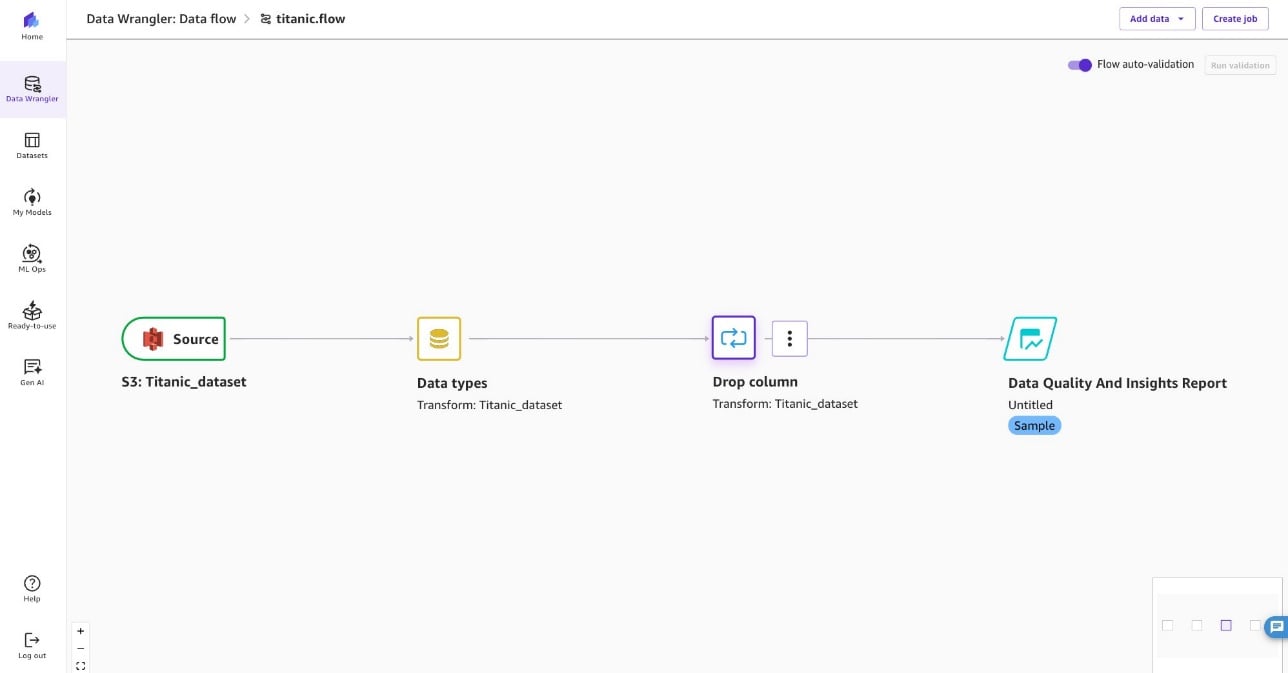

Choose one of the flows (for this example, we chose titanic.flow) to start the SageMaker Data Wrangler transformation.

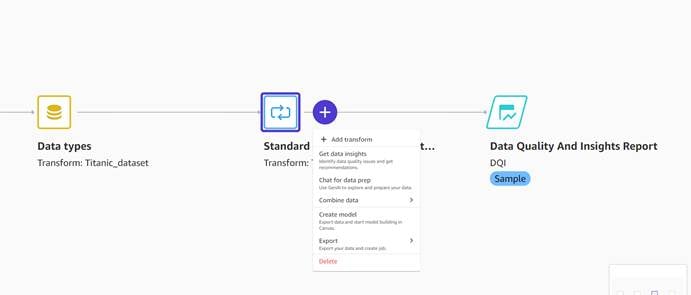

You can now add analysis and transformations to your dataflow using a visual interface (Accelerate data preparation for ML in amazon SageMaker Canvas) or a natural language interface (Use natural language to explore and prepare data with a new amazon SageMaker Canvas capability).

When you are satisfied with the data, select the plus sign and choose Create modelor choose Export to export the dataset to build and use ML models.

Alternative migration method

This post has provided guidance on using amazon S3 to migrate SageMaker Data Wrangler flow files from a SageMaker Studio Classic environment. Phase 3: (Optional) Migrate data from Studio Classic to Studio provides a second method that uses your local computer to transfer the flow files. Additionally, you can download individual flow files from the SageMaker Studio tree control to your local computer and then manually import them into SageMaker Canvas. Choose the method that suits your needs and use case.

Clean

When you are done, close all SageMaker Data Wrangler applications running in SageMaker Studio Classic. To save costs, you can also delete the flow files from the SageMaker Studio Classic File Explorer, which is an amazon Elastic File System (amazon EFS) volume. You can also delete any of the intermediate files in amazon S3. Once the flow files are imported into SageMaker Canvas, the files copied to amazon S3 are no longer needed.

You can log out of SageMaker Canvas when you're done and then log back in when you're ready to use it again.

Conclusion

Migrating your existing SageMaker Data Wrangler flows to SageMaker Canvas is a straightforward process that allows you to use the advanced data preparations you’ve already developed while leveraging SageMaker Canvas’ low-code, no-code, end-to-end machine learning workflow. By following the steps outlined in this post, you can seamlessly transition your data manipulation artifacts to the SageMaker Canvas environment, streamlining your machine learning projects and enabling business analysts and non-technical users to build and deploy models more efficiently.

Start exploring SageMaker Canvas today and experience the power of a unified platform for data preparation, model building, and deployment!

About the authors

Charles Laughlin Charles is a Principal artificial intelligence Specialist at amazon Web Services (AWS). He holds an MS in Supply Chain Management and a PhD in Data Science. He works on the amazon SageMaker Service team, where he brings research and customer feedback to inform the service roadmap. In his role, he collaborates daily with a variety of AWS customers to help them transform their businesses with cutting-edge AWS technologies and thought leadership.

Charles Laughlin Charles is a Principal artificial intelligence Specialist at amazon Web Services (AWS). He holds an MS in Supply Chain Management and a PhD in Data Science. He works on the amazon SageMaker Service team, where he brings research and customer feedback to inform the service roadmap. In his role, he collaborates daily with a variety of AWS customers to help them transform their businesses with cutting-edge AWS technologies and thought leadership.

Dan Sinnreich is a Senior Product Manager at amazon SageMaker, focused on expanding no-code and low-code services. He is dedicated to making machine learning and generative ai more accessible and applying them to solve complex problems. Outside of work, he can be found playing hockey, scuba diving, and reading science fiction.

Dan Sinnreich is a Senior Product Manager at amazon SageMaker, focused on expanding no-code and low-code services. He is dedicated to making machine learning and generative ai more accessible and applying them to solve complex problems. Outside of work, he can be found playing hockey, scuba diving, and reading science fiction.

Huong Nguyen is a Senior Product Manager at AWS. He leads ML data preparation for SageMaker Canvas and SageMaker Data Wrangler, and has 15 years of experience building customer-centric, data-driven products.

Huong Nguyen is a Senior Product Manager at AWS. He leads ML data preparation for SageMaker Canvas and SageMaker Data Wrangler, and has 15 years of experience building customer-centric, data-driven products.

Davide Gallitelli is a Solutions Architect specializing in ai/ML in the EMEA region. He is based in Brussels and works closely with clients across the Benelux. He has been a developer since a very young age and started coding at the age of 7. He started learning ai/ML in his final years of university and fell in love with it ever since.Get confirmation

Davide Gallitelli is a Solutions Architect specializing in ai/ML in the EMEA region. He is based in Brussels and works closely with clients across the Benelux. He has been a developer since a very young age and started coding at the age of 7. He started learning ai/ML in his final years of university and fell in love with it ever since.Get confirmation