In the ever-evolving field of computational linguistics, the search for models that can seamlessly generate human-like texts has led researchers to explore innovative techniques beyond traditional frameworks. One of the most promising avenues in recent times has been the exploration of diffusion models, previously praised for their success in the visual and auditory domains and their potential in natural language generation (NLG). These models have opened up new possibilities for creating text that is not only contextually relevant and coherent, but exhibits a remarkable degree of variability and adaptability to different styles and tones, an obstacle that many previous methods struggled to overcome efficiently.

Previous text generation methods often required work to produce content that could adapt to various requirements without extensive retraining or manual interventions. This challenge was particularly pronounced in applications that required great versatility, such as creating dynamic content for websites or custom dialog systems, where context and style could change rapidly.

Diffusion models have emerged as a ray of hope in this landscape, celebrated for their ability to iteratively refine results toward high-quality solutions. However, its application in NLG has not yet been straightforward, mainly due to the discrete nature of the language. This discretion complicates the diffusion process, which is based on gradual transformations, making it less intuitive for text than images or audio.

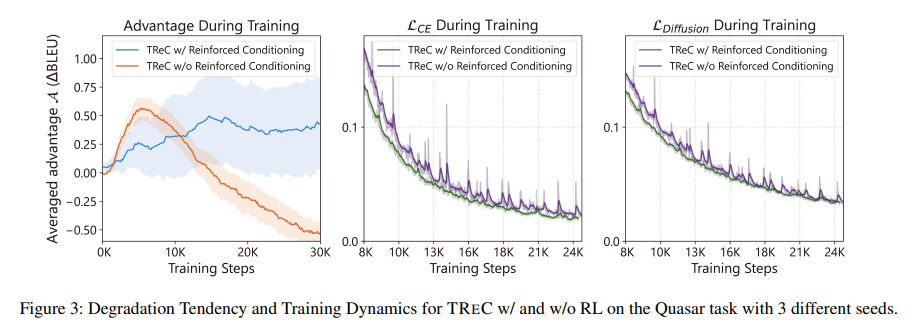

Researchers from Peking University and Microsoft Corporation presented TRmyc (textension Reforced conconditioning), a novel text diffusion model designed to close this gap. It focuses on the specific challenges posed by the discrete nature of text, with the goal of leveraging the iterative refinement skill of diffusion models to improve text generation. TRmyc introduces reinforced conditioning, a strategy designed to combat the degradation of self-conditioning seen during training. This degradation often results in models that fail to fully utilize the iterative refinement potential of diffusion, relying too much on the quality of the initial steps and therefore limiting the effectiveness of the model.

TRmyc employs Time-Aware Variance Scaling, an innovative approach to more closely align training and sampling processes. This alignment is crucial to maintaining consistency in the quality of model results, ensuring that the refinement process during sampling reflects the conditions under which the model was trained. TRmyc It significantly improves the model's ability to produce high-quality, contextually relevant text sequences by addressing these two critical issues.

The effectiveness of TRmyc has been rigorously tested on a spectrum of NLG tasks, including machine translation, paraphrasing, and question generation. The results are nothing short of impressive, with TRmyc It not only holds its own against autoregressive and non-autoregressive baselines, but also outperforms them in several cases. This performance underlines TRmycThe ability to harness the full potential of diffusion processes for text generation, offering significant improvements in the quality and contextual relevance of the generated text.

what sets TRmyc Apart from this is its innovative methodology and the tangible results it achieves. In automatic translation, TRmyc has proven its superiority by delivering more accurate and nuanced translations than those produced by established models. In the paraphrasing and question generation tasks, TRmycThe results of are varied and contextually suitable, showing a level of adaptability and coherence that marks a significant advance in NLG.

In conclusion, TRmycThe development of s is a historic achievement in the search for models capable of generating human-like text. By addressing the intrinsic challenges of text diffusion models, namely degradation during training and misalignment during sampling, TRmyc sets a new standard for text generation and opens new avenues for research and application in computational linguistics. Its success in various NLG tasks illustrates the robustness and versatility of the model, heralding a future in which machines will be able to generate text that is indistinguishable from that written by humans, adapted to a variety of styles, tones and contexts. This breakthrough is a testament to the ingenuity and forward-thinking of the researchers behind it. TRmycwhich offers a vision of the future of artificial intelligence in language generation.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter and Google news. Join our 38k+ ML SubReddit, 41k+ Facebook community, Discord Channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

You may also like our FREE ai Courses….

![]()

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

<!– ai CONTENT END 2 –>

NEWSLETTER

NEWSLETTER