Great language models have demonstrated never-before-seen proficiency in creating and understanding language, paving the way for advances in logic, mathematics, physics, and other fields. But LLM training is quite expensive. To train a 540B model, for example, PaLM requires 6,144 TPUv4 chips, while GPT-3 175B requires several thousand petaflop/s-days of computation for pre-training. This highlights the need to reduce LLM training costs, particularly to scale the next generation of extremely intelligent models. One of the most promising approaches to saving costs is low-precision training, which offers fast processing, low memory usage, and minimal communication overhead. Most current training systems, such as Megatron-LM, MetaSeq, and Colossal-ai, train LLM by default using mixed-precision FP16/BF16 or full-precision FP32.

However, for large models, this is optional for full accuracy. FP8 is emerging as the next-generation data type for low-precision rendering with the arrival of the Nvidia H100 GPU. Compared to existing 16- and 32-bit floating-point mixed-precision training, FP8 has the potential to theoretically achieve 2x speedup, memory cost reductions of 50% to 75%, and communications savings of 50% to 75%. 75%. These results are very encouraging for expanding next-generation foundation models. Unfortunately, there needs to be more, and infrequent, help for the formation of FP8. Nvidia Transformer Engine is the only viable framework; however, it only uses FP8 for the GEMM calculation and maintains master weights and gradients with extreme precision, such as FP16 or FP32. Because of this, the end-to-end performance gains, memory savings, and communication cost savings are relatively small, keeping the full potential of the 8PM hidden.

Researchers at Microsoft Azure and Microsoft Research provide a highly efficient FP8 mixed-precision framework for LLM training to solve this problem. The main concept is to leverage low-precision FP8 for computing, storage, and communication during the large model training process. This will significantly reduce system demands compared to previous frameworks. To be more precise, they create three optimization stages that use FP8 to simplify distributed and mixed precision training. The three levels incrementally introduce the optimizer, distributed parallel training, and 8-bit collective communication. A higher level of optimization suggests that 8PM was used more in the LLM training process. Additionally, their system offers FP8 low-bit parallelism, including tensor, pipeline, and sequence parallelism. It enables large-scale training, such as GPT-175B trained on thousands of GPUs, opening the door to next-generation low-precision parallel training.

It takes work to form LLMs with FP8. Difficulties arise from problems such as data overflow or overflow, as well as quantization errors caused by the lower precision and lower dynamic range of FP8 data formats. Throughout the training process, these difficulties lead to permanent numerical divergences and instabilities. To address these issues, they suggest two methods: auto-scaling to avoid information loss and precision decoupling to isolate the impact of data precision on parameters such as weights, gradients, and optimizer states. The first method involves reducing the precision of precision-insensitive components and preserving gradient values within the representation range of the FP8 data format by dynamically adjusting the tensor scaling factors. This prevents overflow and overflow incidents during full reduction communication.

They use the low-precision framework suggested by FP8 for training GPT-style models, which includes supervised fine-tuning and pre-training, to verify this. Comparing their FP8 methodology with the widely used BF16 mixed-precision training approach, experimental results show significant improvements, such as a 27% to 42% decrease in real memory usage and a notable 63% to 65% decrease in the communication overhead of the weight gradient. In both pre- and post-training tasks, models trained with FP8 show parity of performance with those using high-precision BF16, without any adjustment to hyperparameters such as learning rate and weight decay. While training the GPT-175B model, it is noteworthy that its FP8 mixed-precision framework uses 21% less memory on the H100 GPU platform and saves 17% less training time than TE.

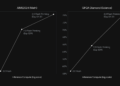

Figure 1: A comparison of the largest model sizes achievable on a cluster of Nvidia H100 GPUs with 80GB RAM using our FP8 mixed precision training method with the more popular BF16 method.

More significantly, when the scale of the models increases, as seen in Fig. 1, the cost savings achieved by using the low-precision FP8 can be further improved. To better match pretrained LLMs to end tasks and user preferences, they use the mixed precision of the FP8 to fine-tune instruction and reinforce learning with human involvement. In particular, they employ publicly available user-shared instruction-following data to fine-tune pre-trained models. While they achieve 27% gains in training speed, models tuned with their FP8 mixed precision perform similarly to those using the half-precision BF16 on the AlpacaEval and MT-Bench benchmarks. Furthermore, the mixed accuracy of FP8 shows great promise in RLHF, a procedure that requires loading many models during training.

The popular RLHF framework, AlpacaFarm, can achieve a 46% decrease in model weights and a 62% reduction in memory usage of optimizer states by using FP8 during training. This further shows how flexible and adaptable their 8PM low-precision training architecture is. The following are the contributions they are making to promote the development of FP8 low-precision training for LLMs in the future generation. • A new framework for mixed precision training in FP8. It is easy to use and gradually unlocks 8-bit weights, gradients, optimizers, and distributed training in a complementary way. The current 16/32-bit mixed-precision equivalents of this 8-bit framework can be easily swapped for this by simply changing the hyperparameters and training receipts. They also provide an implementation for Pytorch that allows 8-bit low precision training with just a few lines of code.

A new line of GPT style models trained on the FP8. They illustrate the capabilities of the proposed 8PM scheme on a variety of model sizes, from 7B to 175B parameters, applying it to GPT pre-training and tuning. They provide support for FP8 (tensor, pipeline, and sequence parallelisms) to popular parallel computing paradigms, allowing FP8 to be used to train massive base models. The first FP8 GPT training codebase, which is based on the Megatron-LM implementation, is publicly available. They anticipate that the introduction of their FP8 framework will provide a new standard for low-precision training systems targeting large base models in the future generation.

Review the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to join. our 32k+ ML SubReddit, Facebook community of more than 40,000 people, Discord channel, and Electronic newsletterwhere we share the latest news on ai research, interesting ai projects and more.

If you like our work, you’ll love our newsletter.

we are also in Telegram and WhatsApp.

![]()

Aneesh Tickoo is a consulting intern at MarktechPost. She is currently pursuing her bachelor’s degree in Data Science and artificial intelligence at the Indian Institute of technology (IIT), Bhilai. She spends most of her time working on projects aimed at harnessing the power of machine learning. Her research interest is image processing and she is passionate about creating solutions around it. She loves connecting with people and collaborating on interesting projects.

<!– ai CONTENT END 2 –>

NEWSLETTER

NEWSLETTER