Historically, the development of language models has operated under the premise that the larger the model, the greater its performance capabilities. However, breaking with this established belief, Researchers from Microsoft Research's Machine Learning Foundations team introduced Phi-2, an innovative language model with 2.7 billion parameters. This model defies traditional scaling laws that have long dictated the field, challenging the widely held notion that a model's size is the singular determinant of its language processing capabilities.

This research navigates the prevailing assumption that superior performance requires larger models. Researchers present Phi-2 as a paradigm shift, deviating from the norm. The article sheds light on the distinctive attributes of Phi-2 and the innovative methodologies adopted in its development. Unlike conventional approaches, Phi-2 relies on meticulously curated high-quality training data and leverages knowledge transfer from smaller models, presenting a formidable challenge to established standards at the language model scale.

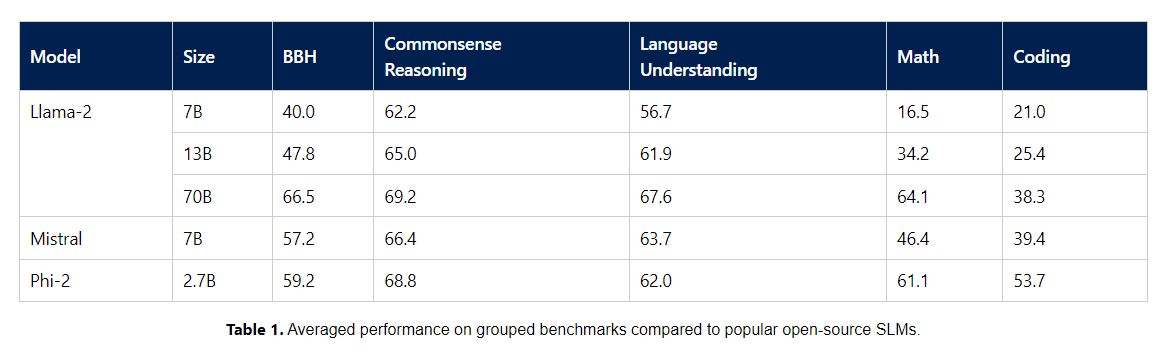

The crux of Phi-2's methodology lies in two fundamental ideas. First, the researchers emphasize the paramount role of training data quality, using meticulously designed “textbook-quality” data to instill reasoning, knowledge, and common sense in the model. Secondly, innovative techniques come into play that allow the model's knowledge to be efficiently scaled, starting from the Phi-1.5 parameter of 1.3 billion. The article delves into the architecture of Phi-2, a Transformer-based model with a next-word prediction objective trained on web and synthetic datasets. Surprisingly, despite its modest size, Phi-2 outperforms larger models in various benchmarks, underscoring its efficiency and formidable capabilities.

In conclusion, Microsoft Research researchers propose Phi-2 as a transformative force in the development of language models. This model not only challenges but successfully refutes the long-held industry belief that model capabilities are intrinsically linked to size. This paradigm shift encourages new perspectives and avenues of research, emphasizing the efficiency that can be achieved without strictly adhering to conventional scaling laws. Phi-2's distinctive combination of high-quality training data and innovative scaling techniques means a monumental advance in natural language processing, promising new possibilities and safer language models for the future.

![]()

Madhur Garg is a consulting intern at MarktechPost. He is currently pursuing his Bachelor's degree in Civil and Environmental Engineering from the Indian Institute of technology (IIT), Patna. He shares a great passion for machine learning and enjoys exploring the latest advances in technologies and their practical applications. With a keen interest in artificial intelligence and its various applications, Madhur is determined to contribute to the field of data science and harness the potential impact of it in various industries.

<!– ai CONTENT END 2 –>

NEWSLETTER

NEWSLETTER