Large multimodal models (LMMs) have the potential to revolutionize the way machines interact with human languages and visual information, offering more intuitive and natural ways for machines to understand our world. The challenge in multimodal learning involves accurately interpreting and synthesizing information from textual and visual inputs. This process is complex due to the need to understand the different properties of each modality and effectively integrate this knowledge into a cohesive understanding.

Current research focuses on autoregressive LLMs for visual-language learning and how to effectively exploit LLMs by considering visual cues as conditional information. The scan also includes tuning the LMMs with visual instruction tuning data to improve their zero-shot capabilities. Small-scale LMMs have been developed to reduce computing overhead, and current models such as Phi-2, TinyLlama, and StableLM-2 achieve impressive performances while maintaining reasonable computing budgets.

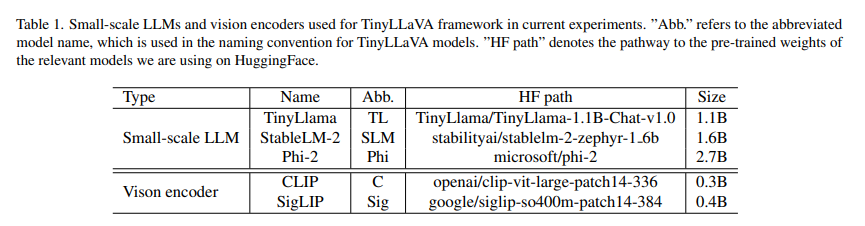

Researchers from Beihang University and Tsinghua University in China have introduced TinyLLaVA, a novel framework that uses small-scale LLM for multimodal tasks. This framework comprises a vision encoder, a small-scale LLM decoder, an intermediate connector, and custom training pipelines. TinyLLaVA aims to achieve high performance in multimodal learning by minimizing computational demands.

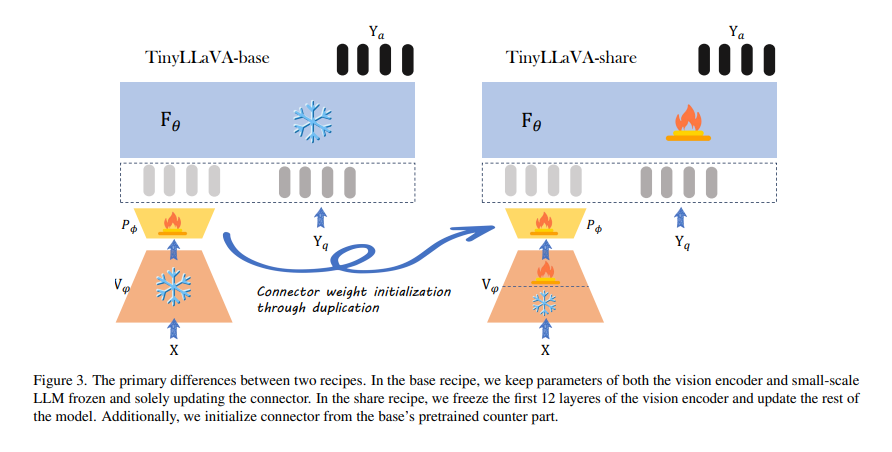

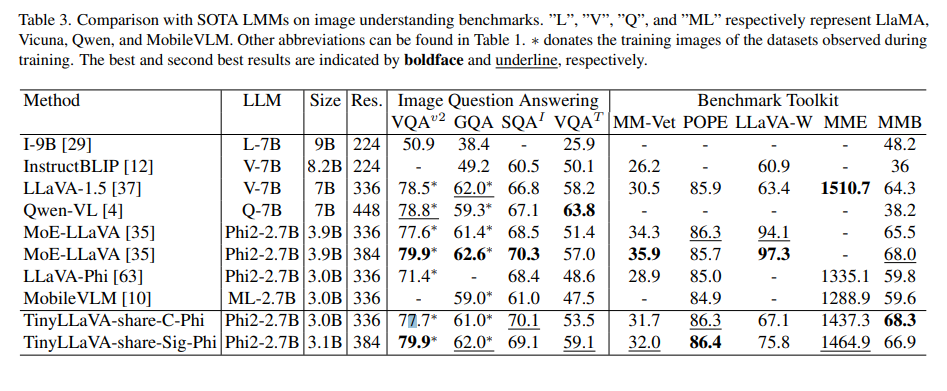

The framework trains a family of small-scale LMMs, with the best model, TinyLLaVA-3.1B, outperforming existing 7B models such as LLaVA-1.5 and Qwen-VL. Combine vision encoders such as CLIP-Large and SigLIP with small-scale LMMs for better performance. The training data consists of two different datasets, LLaVA-1.5 and ShareGPT4V, which are used to study the impact of data quality on LMM performance. Allows adjustment of partially learnable parameters of the LLM and vision encoder during the supervised fine-tuning stage. It also provides a unified analysis of model selections, training recipes, and data contributions to small-scale LMM performance.

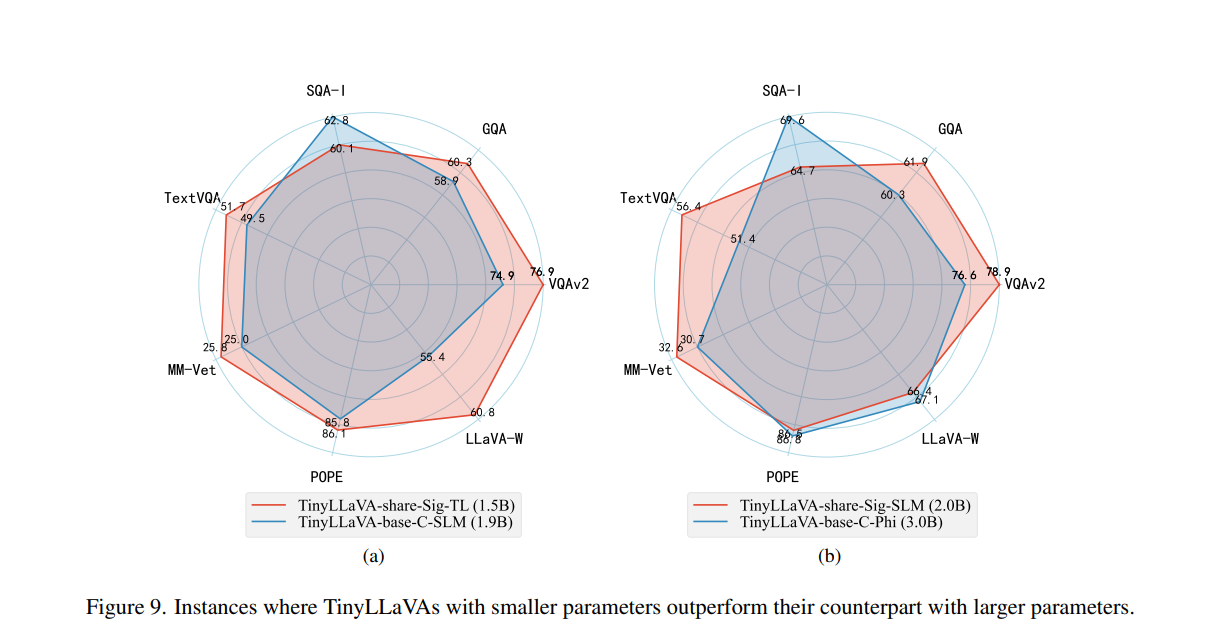

The experiments revealed important findings: variants of the model employing larger LLMs and the SigLIP vision encoder demonstrated superior performance. The shared recipe, including vision encoder tuning, improved the effectiveness of all model variants. Among the notable results, the TinyLLaVA-share-Sig-Phi variant, with 3.1 billion parameters, outperformed the larger 7 billion parameter LLaVA-1.5 model in comprehensive benchmarks, showing the potential of the Smaller LMMs when optimized with proper data and training methodologies.

In conclusion, TinyLLaVA represents an important step forward in multimodal learning. By leveraging small-scale LLMs, the framework offers a more accessible and efficient approach to integrating language and visual information. This development improves our understanding of multimodal systems and opens new possibilities for their application in real-world scenarios. The success of TinyLLaVA underlines the importance of innovative solutions to enhance the capabilities of artificial intelligence.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter and Google news. Join our 38k+ ML SubReddit, 41k+ Facebook community, Discord Channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

You may also like our FREE ai Courses….

![]()

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

<!– ai CONTENT END 2 –>