Atmospheric science and meteorology have recently advanced the modeling of local weather and climate phenomena by capturing fine-scale dynamics crucial for accurate forecasting and planning. Small-scale atmospheric physics, including the intricate details of storm patterns, temperature gradients, and localized events, requires high-resolution data to be accurately represented. These finer details play an important role in applications ranging from daily weather forecasts to regional planning for disaster resilience. Emerging technologies in machine learning have paved the way for creating high-resolution simulations from lower-resolution data, improving the ability to predict such details and improving regional atmospheric models.

A major challenge in this area is the significant difference between the resolution of large-scale data inputs and the higher resolution needed to capture fine atmospheric details. Data on large-scale weather patterns often come in crude formats that fail to encapsulate the finer nuances needed for localized predictions. Variability between large-scale deterministic dynamics, such as broader temperature changes, and smaller, stochastic atmospheric features, such as thunderstorms or localized precipitation, complicates the modeling process. Furthermore, the limited availability of observational data exacerbates these challenges, restricting the capability of existing models and often leading to overfitting when attempting to represent complex atmospheric behaviors.

Traditional approaches to address these challenges have included flow and conditional diffusion models, which have achieved significant results in generating fine details in image processing tasks. However, these methods need to be improved in atmospheric modeling, where spatial alignment and multiscale dynamics are particularly complex. In previous attempts, residual learning techniques were first used to model the deterministic components, followed by super-resolution residual details to capture small-scale dynamics. This two-stage approach, while valuable, introduces risks of overfitting, especially with limited data, and requires mechanisms to optimize both the deterministic and stochastic elements of the atmospheric data. Consequently, many existing models need help balancing these components effectively, especially when dealing with large-scale misaligned data.

To overcome these limitations, a research team from NVIDIA and Imperial College London introduced a novel approach called Stochastic Flow Matching (SFM). SFM is specifically designed to address the unique demands of atmospheric data, such as spatial misalignment and the complex multiscale physics inherent in meteorological data. The method redefines the input data by encoding it into a latent base distribution closest to the target fine-scale data, allowing for improved alignment before applying stream matching. Stream matching creates realistic small-scale features by transporting samples from this encoded distribution to the target distribution. This approach allows SFM to maintain high fidelity while mitigating overfitting, achieving superior robustness compared to existing diffusion models.

The SFM methodology comprises an encoder that translates coarse-resolution data into a latent distribution that reflects the fine-scale target data. This process captures deterministic patterns, a basis for adding small-scale stochastic details through stream matching. To handle uncertainties and reduce overfitting, SFM incorporates adaptive noise scaling, a mechanism that dynamically adjusts noise in response to the encoder's error predictions. By leveraging maximum likelihood estimates, SFM balances deterministic and stochastic influences, refining the model's ability to generate fine-scale details with greater accuracy. This innovation provides a well-tuned method to adapt to variability within the data, allowing the model to respond dynamically and avoid over-reliance on deterministic information, which could otherwise lead to errors.

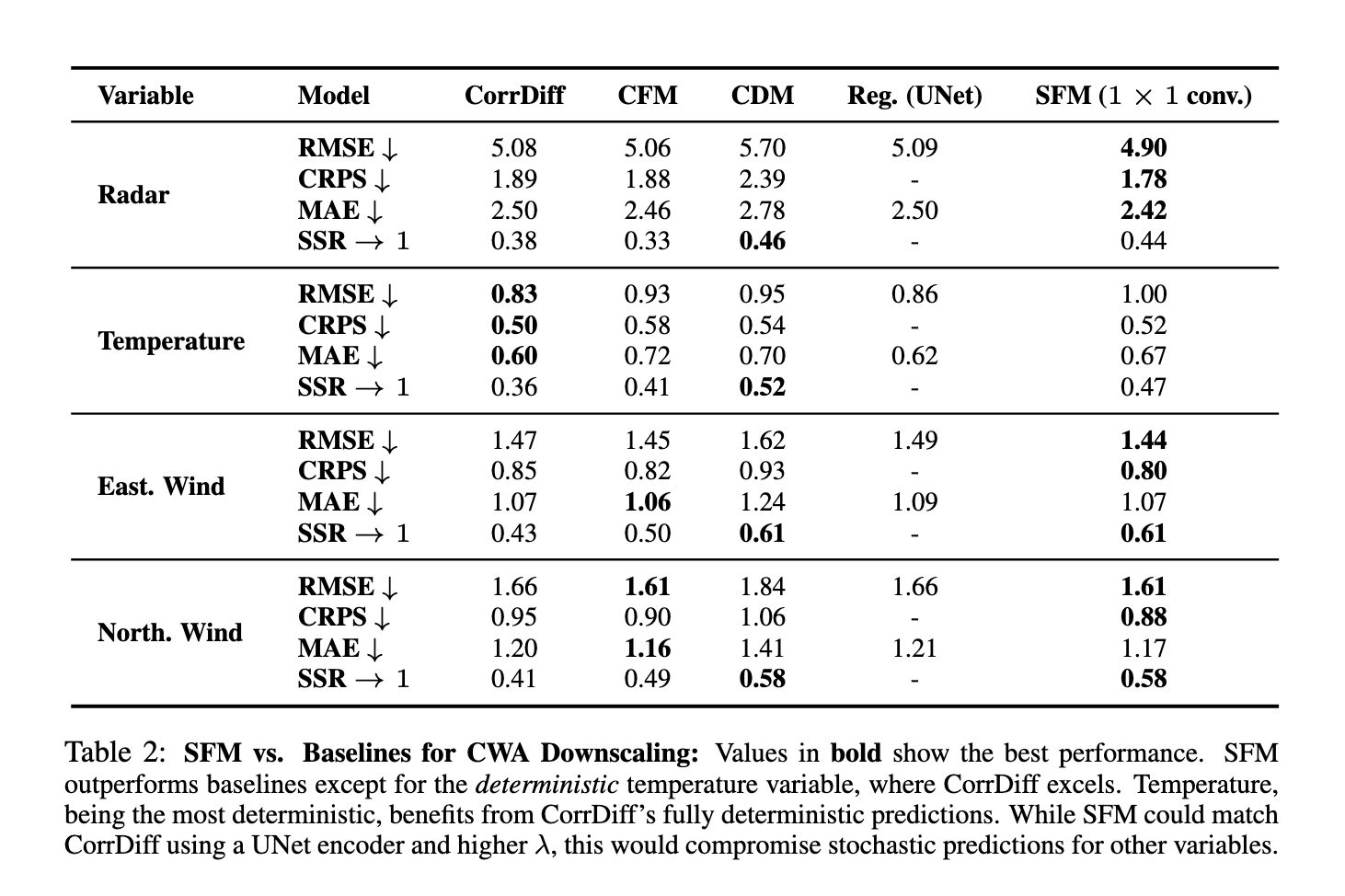

The research team conducted extensive experiments on synthetic and real-world datasets, including a meteorological dataset from Taiwan's Central Meteorological Administration (CWA). The results demonstrated the significant improvement of SFM over conventional methods. For example, on the Taiwan dataset, which involves super-resolution coarse meteorological variables from 25 km to 2 km scales, SFM achieved superior results on multiple metrics such as root mean square error (RMSE), continuous ranked probability score (CRPS) and Extended Skills Ratio (SSR). Regarding radar reflectivity, which requires an entirely new generation of data, SFM outperformed the baselines by a notable margin, demonstrating improved spectral fidelity and accurate capture of high-frequency details. Regarding RMSE, SFM maintained lower errors than the baselines, while the SSR metric highlighted that SFM was better calibrated, achieving values close to 1.0, indicating an optimal balance between sparsity and precision.

The superiority of the SFM model was further illustrated by spectral analysis, where it closely matched the ground truth data on several climate variables. While other models, such as conditional diffusion and flow matching techniques, struggled to achieve high fidelity, SFM consistently produced accurate representations of small-scale dynamics. For example, SFM effectively reconstructed high-frequency radar reflectivity data (absent in the input variables), illustrating its ability to generate new physically consistent data channels. Furthermore, SFM achieved these results without compromising calibration, demonstrating a well-calibrated ensemble that supports probabilistic forecasting in uncertain atmospheric environments.

Through its innovative framework, SFM successfully addresses the persistent problem of reconciling low- and high-resolution data in atmospheric modeling, striking a careful balance between deterministic and stochastic elements. By providing high-fidelity downscaling, SFM opens up new possibilities for advanced weather simulations, supporting improved climate resilience and localized weather predictions. The SFM method marks a significant advance in atmospheric science, setting a new benchmark in model accuracy for high-resolution weather data, especially when conventional models face limitations due to data scarcity and resolution misalignment. .

look at the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 55,000ml.

(Sponsorship opportunity with us) Promote your research/product/webinar to over 1 million monthly readers and over 500,000 community members

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>