Recent developments in artificial intelligence (AI) have been truly remarkable, with rapid advances in deep learning and other machine learning techniques leading to advances in a wide range of applications. One of the mentioned applications refers to the estimation of the pose of an object.

Object pose estimation is a field of computer vision that aims to determine the location and orientation of objects in an image or video sequence. It is a crucial task for many applications, such as augmented reality, robotics, and autonomous driving. Object position estimation can be performed using a variety of techniques, including 2D keypoint detection and 3D reconstruction. The ultimate goal of object pose estimation is to provide a rich representation of the objects in the scene, including their position and orientation, shape, size, and texture.

Object pose estimation is crucial for immersive human-object interactions in augmented reality (AR). The AR scenario demands the estimation of the pose of arbitrary household objects in our daily life. However, most of the existing methods are based on high-fidelity CAD models of objects or require the training of a separate network for each category of objects. The instance or category specific nature of these methods limits their applicability in real world applications.

Recent techniques have been investigated to overcome these problems and limitations.

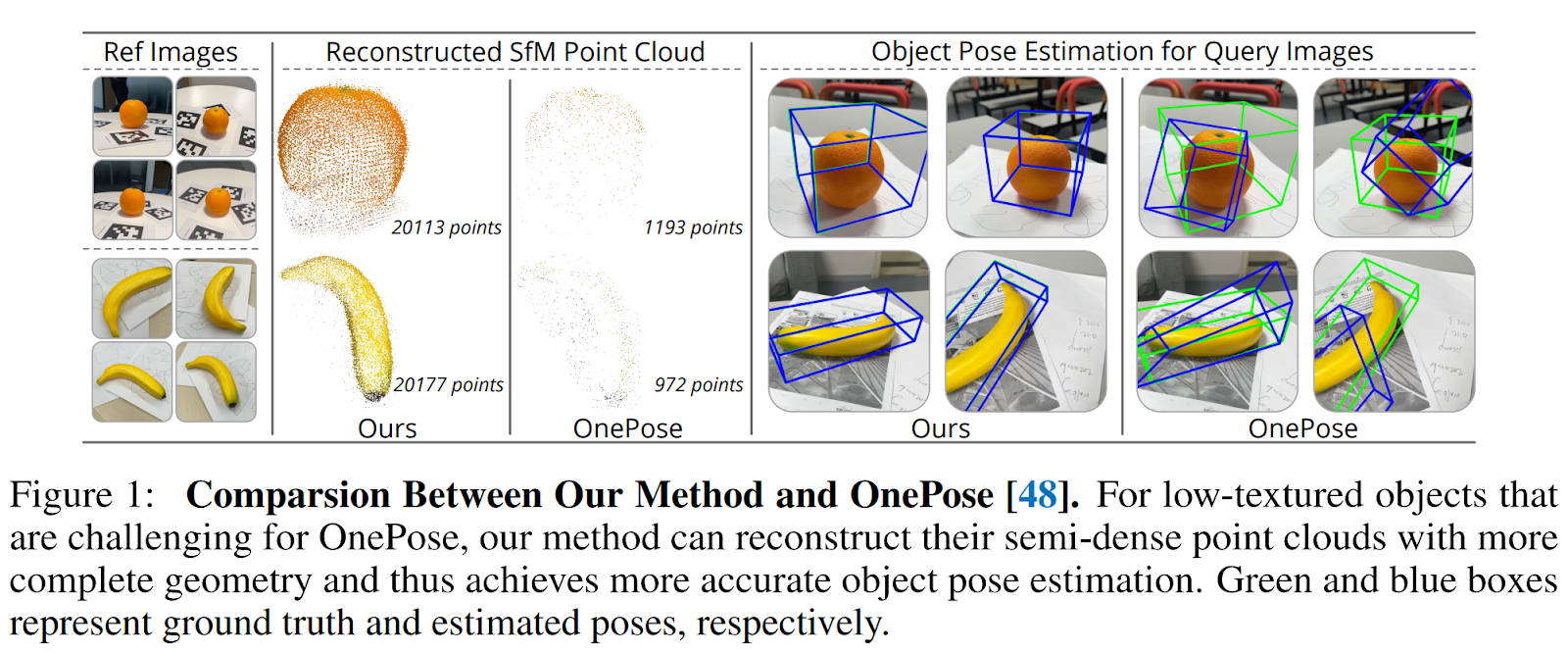

OnePose aims to simplify the process of estimating object poses for AR applications by eliminating the need for CAD models and category-specific training. Instead, it only requires a video sequence with annotated object poses. OnePose uses a feature matching approach that reconstructs point clouds of sparse objects, establishes 2D-3D correspondences between key points, and estimates the object’s pose. However, this method has problems with low-texture objects, as full point clouds are difficult to reconstruct with a keypoint-based structure from motion (SfM), leading to pose estimation failures. .

Based on the challenges mentioned above, OnePose++ has been developed. Its architecture is presented in the following figure.

OnePose++ leverages a feature-matching pipeline without keypoints on top of OnePose to handle low-texture objects. First, it reconstructs the correct semi-dense object point cloud from reference photographs. It then resolves the object pose for the test images by mapping 2D-3D from coarse to fine shape.

An adapted version of the LoFTR method is exploited to achieve feature matching. It is a semi-dense technique without keypoints that works exceptionally well for matching image pairs and identifying correspondences in regions with little texture. It uses the regular grid centers in the image on the left as key points and finds precise sub-pixel matches in the image on the right through a coarse-to-fine process. However, the two-view dependent nature of LoFTR leads to inconsistent key points and incomplete feature clues. As a result, the feature match method without keypoints cannot be used directly in OnePose for object pose estimation.

To take advantage of both methods, a novel system has been developed to adapt the keypoint-free matching technique for single shot object pose estimation. The authors propose a sparse-to-dense 2D-3D matching network that efficiently establishes accurate 2D-3D correspondences for pose estimation, taking full advantage of architecture’s keypoint-less design. More specifically, to better adapt LoFTR to SfM, they designed a coarse-to-fine scheme for accurate and complete reconstruction of semi-dense objects. The LoFTR coarse-to-fine structure is then disassembled and integrated into the reconstruction pipeline. Furthermore, self-attention and cross-attention are used to model the long-range dependencies required for robust 2D-3D comparison and pose estimation of complex real-world objects, which typically contain repetitive patterns or low-texture regions.

The following figure offers a comparison between the proposed approach and OnePose.

This was the brief for OnePose++, a novel one-shot object pose estimation framework without AI keypoints without CAD models.

If you are interested or would like more information on this framework, you can find a link to the document and the project page.

review the Paper, Github, and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 13k+ ML SubReddit, discord channel, and electronic newsletterwhere we share the latest AI research news, exciting AI projects, and more.

Daniele Lorenzi received his M.Sc. in ICT for Internet and Multimedia Engineering in 2021 from the University of Padua, Italy. He is a Ph.D. candidate at the Institute of Information Technology (ITEC) at the Alpen-Adria-Universität (AAU) Klagenfurt. He currently works at the Christian Doppler ATHENA Laboratory and his research interests include adaptive video streaming, immersive media, machine learning and QoS / QoE evaluation.

NEWSLETTER

NEWSLETTER

Read our latest AI newsletter

Read our latest AI newsletter