The ability to predict outcomes from a multitude of parameters has traditionally relied on specific, narrowly focused regression methods. While effective within its scope, this specialized approach often needs to be revised when faced with the complexity and diversity inherent in real-world experiments. Therefore, the challenge lies not simply in prediction but in creating a tool versatile enough to navigate across the wide spectrum of tasks, each with its different parameters and results, without needing to adapt them to specific tasks.

Regression tools have been developed to address this predictive task, leveraging statistical techniques and neural networks to estimate results based on input parameters. These tools, including Gaussian processes, tree-based methods, and neural networks, have shown promise in their respective fields. They encounter limitations when generalizing across diverse experiments or adapting to scenarios that require multi-task learning, often requiring complex feature engineering or complex normalization processes to work effectively.

OmniPred emerges as an innovative framework from a collaborative effort of researchers at Google DeepMind, Carnegie Mellon University, and Google. This innovative framework reconceptualizes the role of linguistic models, transforming them into universal end-to-end regressors. The genius of OmniPred lies in its use of textual representations of mathematical parameters and values, allowing it to skillfully predict metrics in various experimental designs. Based on Google Vizier's vast data set, OmniPred demonstrates an exceptional ability for accurate numerical regression, significantly outperforming traditional regression models in versatility and accuracy.

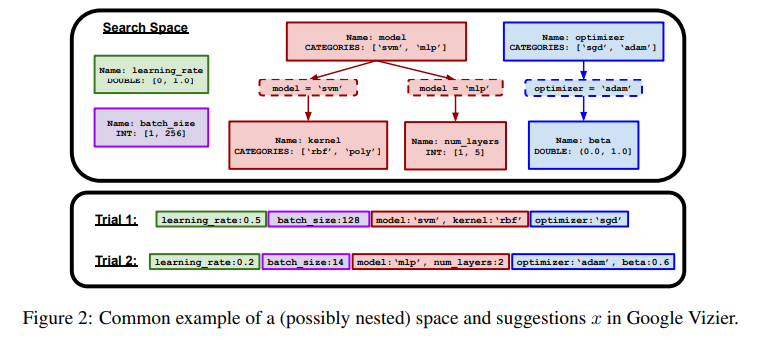

The core of OmniPred is a simple yet scalable metric prediction framework that eschews constraint-dependent representations in favor of generalizable textual inputs. This approach allows OmniPred to navigate the complexities of experimental design data with remarkable precision. The framework's prowess is further enhanced through multi-task learning, allowing it to surpass the capabilities of conventional regression models by leveraging the nuanced understanding provided by textual and token-based representations.

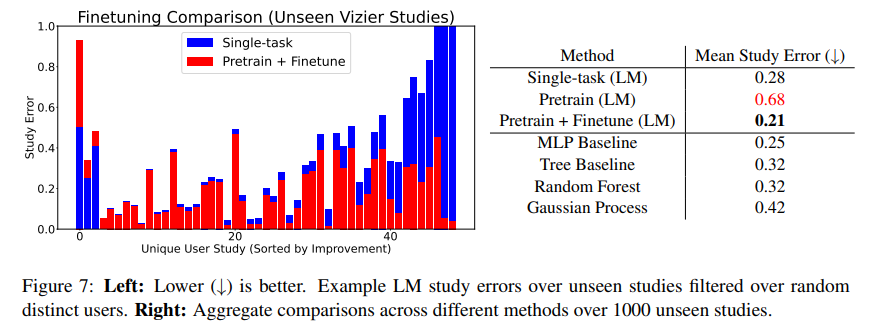

The framework's ability to process textual representations and its scalability sets a new standard for metric prediction. Through rigorous experimentation using the Google Vizier dataset, OmniPred demonstrated significant improvement over baseline models and highlighted the advantage of multi-task learning and the potential for fine-tuning to improve accuracy on unseen tasks.

By synthesizing these findings, OmniPred represents the potential of integrating language models into the fabric of experimental design, offering:

- A revolutionary approach to regression that leverages the nuanced capabilities of language models for universal metric prediction.

- Demonstrated superiority over traditional regression models, with significant improvements in accuracy and adaptability across various tasks.

- The ability to transcend the limitations of fixed input representations, offering a flexible and scalable solution for experimental design.

- A framework encompassing multi-task learning, showing the benefits of transfer learning even in the face of unseen tasks, further augmented by the potential for localized fine-tuning.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter and Google news. Join our 38k+ ML SubReddit, 41k+ Facebook community, Discord Channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

You may also like our FREE ai Courses….

![]()

Hello, my name is Adnan Hassan. I'm a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a double degree from the Indian Institute of technology, Kharagpur. I am passionate about technology and I want to create new products that make a difference.

<!– ai CONTENT END 2 –>