The rapid development of large language models (LLM) has transformed natural language processing (NLP). Proprietary models such as GPT-4 and Claude 3 have set high standards in terms of performance, but often suffer from drawbacks such as high costs, limited accessibility, and opaque methodologies. Meanwhile, many so-called open source models fail to fully embody the ideals of openness, withholding key elements such as training data and tuning processes and often applying restrictive licenses. These practices hinder innovation, reduce reproducibility, and complicate adoption across industries. Addressing these barriers is crucial to fostering trust, collaboration and progress in the ai ecosystem.

Introducing Moxin LLM 7B

Researchers from Northeastern University, Harvard University, Cornell University, Tulane University, University of Washington, Roboraction.ai, Futurewei Technologies and AIBAO LLC launch Mohín LLM 7B to address these challenges, guided by the principles of transparency and inclusion. Developed under the Model Openness Framework (MOF), it provides full access to your pre-training code, data sets, configurations and intermediate checkpoints. This fully open source model is available in two versions:Base and Chat—and achieves the MOF's highest classification, “open science.” With a 32k token context size and features like Grouped Query Attention (GQA) and Sliding Window Attention (SWA), Moxin LLM 7B offers a robust yet affordable option for coding and NLP applications. It is a valuable tool for researchers, developers and companies looking for flexible, high-performance solutions.

Technical innovations and key benefits

Moxin LLM 7B is based on the Mistral architecture and improves it with an expanded 36-block design. This extension integrates GQA to improve memory efficiency and SWA to process long sequences effectively. The inclusion of a continuous buffer cache optimizes memory usage, making the model ideal for handling extended contexts in real-world applications.

The model training process is based on carefully selected data sources, including SlimPajama and DCLM-BASELINE for text, and The Stack for encoding. Leveraging Colossal-ai's advanced parallelization techniques, the model was trained on over 2 trillion tokens across three phases, each of which progressively increased the context length and refined specific capabilities.

These design options ensure several key benefits. First, the open source nature of Moxin LLM 7B allows for customization and adaptability across various domains. Second, its strong performance on low- and zero-test assessments demonstrates its ability to handle complex reasoning, coding, and multitasking challenges. Finally, the model's balance between computational efficiency and quality of results makes it practical for both research and real-world use cases.

Performance information

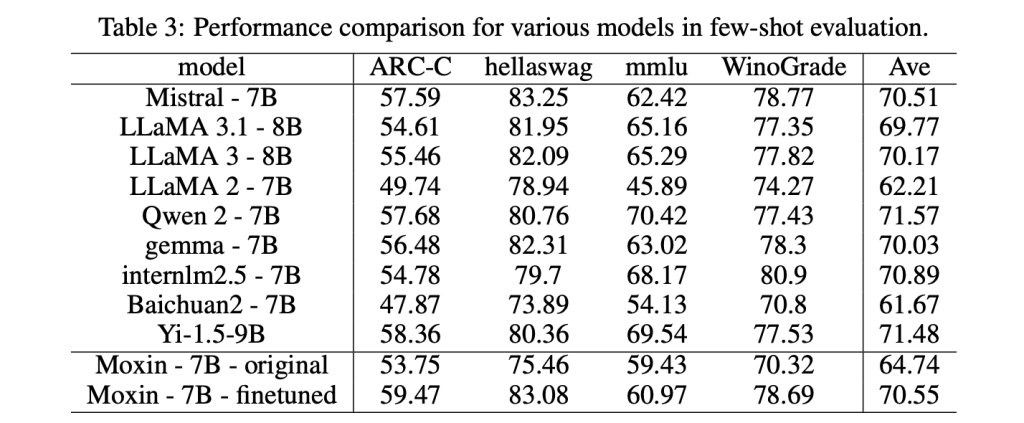

Moxin LLM 7B has been subjected to rigorous evaluation against comparable models. In zero-shot configurations, it outperforms alternatives like LLaMA 2-7B and Gemma-7B in benchmarks including AI2 Reasoning Challenge, HellaSwag, and PIQA. For example, the upgraded version achieves an impressive 82.24% in PIQA, marking a significant improvement over the existing next-generation models.

The results of the low-chance evaluation of the model further underscore its strengths, particularly in tasks that require advanced reasoning and domain-specific knowledge. Evaluations using MTBench highlight the Moxin Chat 7B's capabilities as an interactive assistant, achieving competitive scores that often rival those of larger proprietary models.

Conclusion

Moxin LLM 7B stands out as a significant contribution to the open source LLM landscape. By fully adopting the principles of the Model Openness Framework, it addresses critical issues of transparency, reproducibility and accessibility that often challenge other models. With its technical sophistication, robust performance and commitment to openness, Moxin LLM 7B offers a compelling alternative to proprietary solutions. As the role of ai continues to grow across industries, models like Moxin LLM 7B lay the foundation for a more collaborative, inclusive and innovative future in natural language processing and beyond.

look at the Paper, GitHub page, Basic modeland Chat model. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. Don't forget to join our SubReddit over 60,000 ml.

Trending: LG ai Research launches EXAONE 3.5 – three frontier-level bilingual open-source ai models that deliver unmatched instruction following and broad context understanding for global leadership in generative ai excellence….

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for social good. Their most recent endeavor is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has more than 2 million monthly visits, which illustrates its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER