As social beings, humans communicate and express themselves on a daily basis through their behavior and accessories. With the expansion of social life to the online realm through social networks and games, virtual representations of users, often called avatars, have become increasingly important for social presence. The consequence is a growing demand for digital clothing and accessories.

Among all others, glasses are a common accessory worn by billions of people around the world. To do this, to achieve realism, work still needs to be done on modeling the glasses in isolation. Not only the shape must be taken into account, but also its interactions with the face. The glasses and the faces are not rigid and deform with each other at the points of contact, which means that the shapes of the glasses and the faces cannot be determined independently. Also, their appearance is affected by global light transport, and shadows and interreflections can appear and affect glare. Therefore, a computational approach is necessary to model these interactions in order to achieve photorealism.

Traditional methods use powerful 2D generative models to generate different models of glasses in the image realm. While these methods can create photorealistic images, the absence of 3D information causes visual and temporal inconsistencies in the results produced.

Recently, neural rendering approaches have been investigated to achieve photorealistic rendering of human heads and objects in general in a consistent 3D manner.

Although these approaches can be extended to consider models of faces and eyeglasses, the interactions between objects are not

considered, leading to implausible compositions of objects.

Unsupervised learning can also be used to generate composite 3D models from a collection of images. However, the lack of prior structure on faces or glasses leads to suboptimal fidelity.

Also, all of these approaches cannot be turned back on, which means that the glasses produced will suffer from inconsistencies in the new lighting conditions.

To overcome the aforementioned problems, a new AI Morphable Eyeglass and Avatar Network (MEGANE) has been developed.

An overview of the strategy is shown in the following figure.

Unlike existing approaches, MEGANE can be transformed and re-lit, rendering the shape and appearance of eyeglass frames and their interaction with faces. A hybrid rendering combines surface geometry with a volumetric rendering for shape customization and rendering efficiency.

This hybrid representation makes the structure easily deformable depending on the shapes of the head, offering direct correspondences between the glasses. Furthermore, the model is associated with a high-fidelity generative model of the human head. In this way, the models of glasses can specialize in deformations and changes in appearance.

The authors propose spectacle-conditioned appearance and deformation networks for the transformable face model to incorporate interaction effects caused by spectacle wearing.

In addition, MEGAN includes an analytical lens model, which provides photorealistic reflections and refractions to the lens.

To render glasses and faces together with novel illuminations, the authors incorporate a new, physically-inspired neural illumination into their proposed generative model, which infers output radiation given lighting and optical conditions. Based on this relighting technique, the properties of different materials, including translucent plastic and metal, can be emulated within a single model.

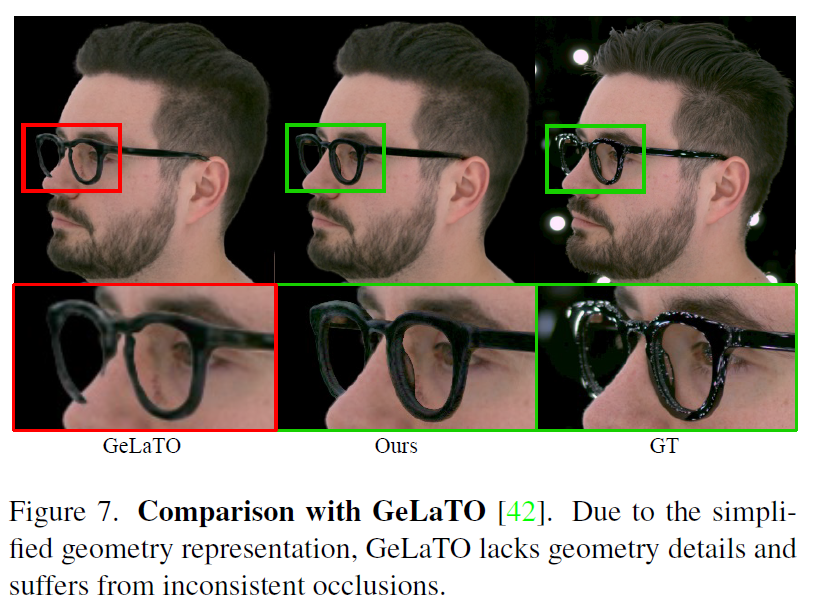

The reported results in comparison to the latest generation GeLaTO are reported below.

With an in-depth look at the previous figures and according to the authors, GeLaTO lacks geometric details and generates incorrect occlusion boundaries in the face-glasses interaction. MEGANE, on the other hand, achieves detailed and realistic results.

This was the brief for a new AI framework for the conscious combination of 3D with neurally generative radiation fields (NeRFs).

If you are interested or would like more information on this framework, you can find a link to the document and the project page.

review the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 15k+ ML SubReddit, discord channeland electronic newsletterwhere we share the latest AI research news, exciting AI projects, and more.

Daniele Lorenzi received his M.Sc. in ICT for Internet and Multimedia Engineering in 2021 from the University of Padua, Italy. He is a Ph.D. candidate at the Institute of Information Technology (ITEC) at the Alpen-Adria-Universität (AAU) Klagenfurt. He currently works at the Christian Doppler ATHENA Laboratory and his research interests include adaptive video streaming, immersive media, machine learning and QoS / QoE evaluation.

NEWSLETTER

NEWSLETTER

Be the first to know about the latest advances in AI research.

Be the first to know about the latest advances in AI research.