One of the most interesting developments in this field is the investigation of state space models (SSM) as an alternative to the widely used Transformer networks. These SSMs, distinguished by their innovative use of activation, convolutions, and input-dependent token selection, aim to overcome the computational inefficiencies posed by the quadratic cost of multi-head attention in Transformers. Despite their promising performance, the in-context learning (ICL) capabilities of SSMs have not yet been fully explored, especially in comparison to their Transformer counterparts.

The crux of this research lies in improving the ICL capabilities of ai models, a feature that allows them to learn new tasks through a few examples without the need for extensive parameter optimization. This capability is essential to develop more versatile and efficient ai systems. However, current models, especially those based on Transformer architectures, face scalability challenges and computational demands. These limitations require exploring alternative models that can achieve similar or superior ICL performance without the associated computational burden.

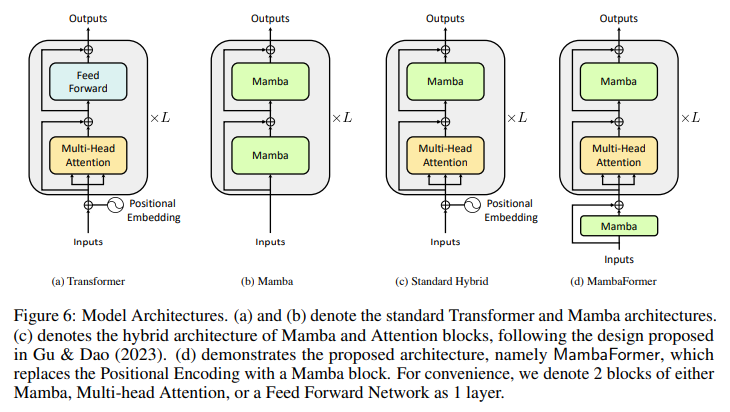

MambaFormer is proposed by researchers from KRAFTON, Seoul National University, the University of Wisconsin-Madison, and the University of Michigan. This hybrid model represents a significant advance in the field of learning in context. This model cleverly combines the strengths of Mamba SSM with attention blocks from the Transformer models, creating a powerful new architecture designed to outperform both on tasks where they fail. By eliminating the need for positional encodings and integrating the best features of SSM and Transformers, MambaFormer offers a promising new direction for improving ICL capabilities in language models.

By focusing on a diverse set of ICL tasks, researchers could evaluate and compare the performance of SSMs, Transformer models, and the recently proposed hybrid model against various challenges. This comprehensive evaluation revealed that while SSMs and Transformers have strengths, they also possess limitations that may hinder their performance on certain ICL tasks. MambaFormer's hybrid architecture was designed to address these shortcomings, leveraging the combined strengths of its constituent models to achieve superior performance across a broad spectrum of tasks.

On tasks where traditional SSMs and Transformer models struggled, such as sparse parity learning and complex recovery functionalities, MambaFormer demonstrated remarkable proficiency. This performance highlights the versatility and efficiency of the model and underlines the potential of hybrid architectures to overcome the limitations of existing ai models. MambaFormer's ability to excel at a wide range of ICL tasks without the need for positional encodings marks an important step forward in the development of more adaptive and efficient ai systems.

As we reflect on the contributions of this research, several key insights emerge:

- The development of MambaFormer illustrates the immense potential of hybrid models to advance the field of in-context learning. By combining the strengths of the SSM and Transformer models, MambaFormer addresses the limitations of each, offering a versatile and powerful new tool for ai research.

- The performance of MambaFormer on various ICL tasks shows the efficiency and adaptability of the model. This confirms the importance of innovative architectural designs in creating ai systems.

- The success of MambaFormer opens new avenues for research, particularly in exploring how hybrid architectures can be further optimized for in-context learning. The findings also suggest the potential of these models to transform other areas of ai beyond language modeling.

In conclusion, the research on MambaFormer illuminates the unexplored potential of hybrid models in ai and sets a new benchmark for learning in context. As ai continues to evolve, exploring innovative models like MambaFormer will be crucial to overcoming the challenges facing current technologies and unlocking new possibilities for the future of artificial intelligence.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter and Google news. Join our 36k+ ML SubReddit, 41k+ Facebook community, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

![]()

Hello, my name is Adnan Hassan. I'm a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a double degree from the Indian Institute of technology, Kharagpur. I am passionate about technology and I want to create new products that make a difference.

<!– ai CONTENT END 2 –>