Artificial intelligence models are becoming very powerful recently due to the increase in the size of the data set used for the training process and the computational power required to run the models.

This increase in model resources and capabilities generally leads to higher accuracy than smaller architectures. Small data sets also affect the performance of neural networks in a similar way, given the small sample size compared to data variation or lopsided class samples.

While model capabilities and accuracy are increased, in these cases, the tasks performed are restricted to very few and specific ones (for example, content generation, image repaint, image repaint, or frame interpolation).

A novel framework known as MAsked Generative Video Transformer,

MAGVIT (MAGVIT), which includes ten different generation tasks, has been proposed to overcome this limitation.

As reported by the authors, MAGVIT was developed to address Frame Prediction (FP), Frame Interpolation (FI), Exterior Paint Center (OPC), Exterior Paint Vertical (OPV), Exterior Paint Horizontal (OPH), Exterior Paint Dynamics. (OPD), Central Exterior Painting (IPC) and Dynamic Painting (IPD), Conditional Class Generation (CG), Class Conditional Framework Prediction (CFP).

The overview of the architecture pipeline is presented in the following figure.

In a nutshell, the idea behind the proposed framework is to train a transformer-based model to retrieve a corrupted image. The corruption is modeled here as masked tokens, which refer to parts of the input framework.

Specifically, MAGVIT models a video as a sequence of visual tokens in latent space and learns to predict masked tokens with BERT (Bidirectional Encoder Representations of Transformers), a transformer-based machine learning approach originally designed for natural language processing ( NLP).

There are two main modules in the proposed framework.

First, vector embeds (or tokens) are produced by vector quantized (VQ) 3D encoders, which quantize and flatten video into a sequence of discrete tokens.

The 2D and 3D convolutional layers are exploited together with the 2D and 3D upsampled or downsampled layers to account for spatial and temporal dependencies efficiently.

Downsampling is done by the encoder, while upsampling is done by the decoder, whose goal is to reconstruct the image represented by the token vector provided by the encoder.

Second, a masked token modeling (MTM) scheme is exploited for multitasking video generation.

Unlike conventional MTM in image/video synthesis, an embedding method is proposed to model a video condition using a multivariate mask.

The multivariate masking scheme facilitates learning for video generation tasks with different conditions.

Conditions can be a spatial region for inside/outside painting or a few frames for frame prediction/interpolation.

The output video is generated according to the masked conditioning token, refined at each step after making the prediction.

Based on the reported experiments, the authors of this research state that the proposed architecture establishes the best published FVD (Fréchet Video Distance) across three video generation benchmarks.

In addition, according to its results, MAGVIT outperforms existing methods in inference time by two orders of magnitude compared to diffusion models and 60 times compared to autoregressive models.

Finally, a single MAGVIT model has been developed to support ten diverse rendering tasks and generalize videos from different visual domains.

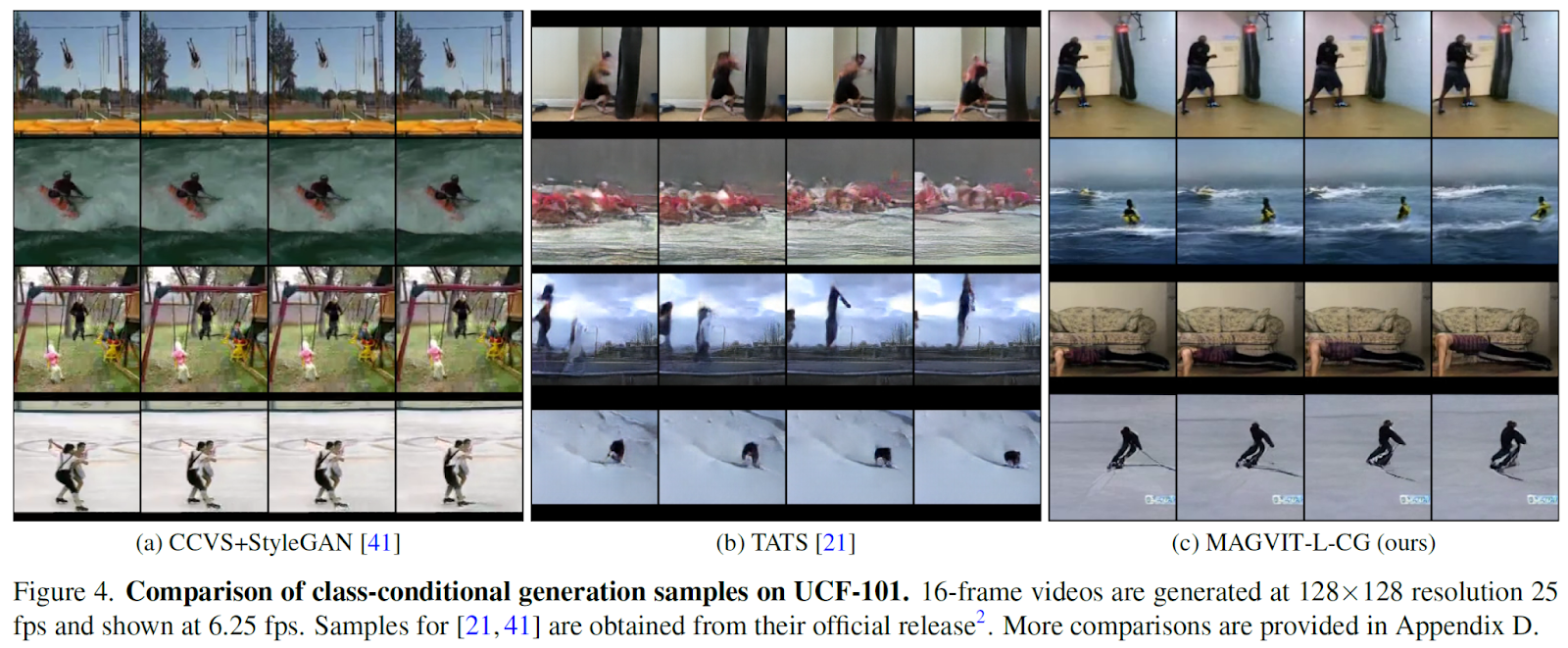

In the figure below, some results related to class conditioning sample generation compared to more advanced approaches are reported. For other tasks, see the document.

This was the brief for MAGVIT, a novel AI framework for tackling various video generation tasks together. If you are interested, you can find more information in the following links.

review the Paper Y Project. All credit for this research goes to the researchers of this project. Also, don’t forget to join our reddit page, discord channel, Y electronic newsletterwhere we share the latest AI research news, exciting AI projects, and more.

Daniele Lorenzi received his M.Sc. in ICT for Internet and Multimedia Engineering in 2021 from the University of Padua, Italy. He is a Ph.D. candidate at the Institute of Information Technology (ITEC) at the Alpen-Adria-Universität (AAU) Klagenfurt. He currently works at the Christian Doppler ATHENA Laboratory and his research interests include adaptive video streaming, immersive media, machine learning and QoS / QoE evaluation.

NEWSLETTER

NEWSLETTER