Video editing is an essential artificial intelligence (AI) process critical to creating visual content. Video editing involves manipulating, rearranging, and enhancing video footage to produce a final product with the desired characteristics. This process can be time consuming and labor intensive, but AI advancements have made video editing easier and faster.

The use of AI in video editing has revolutionized the way we create and analyze video content. With the help of advanced algorithms and machine learning models, video editors and researchers can now achieve previously unattainable results.

A popular AI technique for video editing is based on GAN inversion, which involves projecting a real image onto the latent space of a pretrained GAN to get a latent code. In this way, the input image can be reconstructed by feeding the latent code into the previously trained GAN. By changing the latent code, many creative semantic editing effects for images can be achieved.

However, these approaches often lack identity preservation or semantically accurate reconstructions.

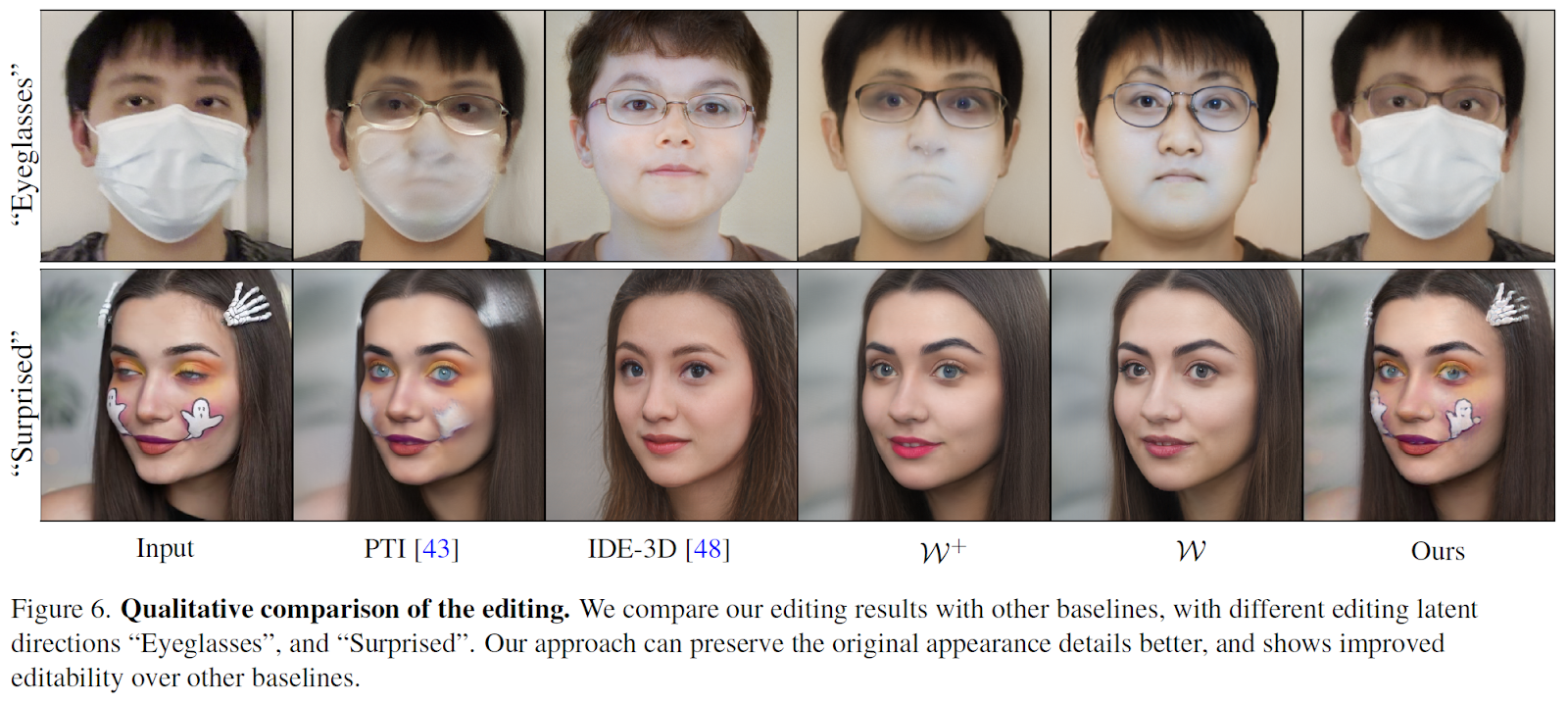

For example, GAN inversion techniques such as IDE-3D or PTI cannot handle out-of-distribution (OOD) elements, which refer to unusual data distributions, such as object occlusions in face frames. IDE-3D can produce a faithful edit but does not preserve the identity of the input face. PTI provides better identity preservation, but semantic accuracy suffers.

To obtain both identity preservation and faithful reconstruction, a GAN-based video editing and inversion framework called In-N-Out has been proposed.

In this work, the authors rely on composite volume rendering to generate multiple radiation fields during rendering.

An overview of the architecture is available below.

The central idea is to decompose the 3D representation of the video with the OOD object into an inside distribution part and an outside distribution part and composite them together to reconstruct the video in a composite volumetric representation form. In the two-dimensional case, it would be like pasting one image (representing an occlusion object, like a ball) onto another (in this case, a face).

The authors exploit EG3D as the backbone of the 3D-compatible GAN and take advantage of its three-plane rendering to model this composite rendering pipeline. For the distribution element (ie, the natural face), the pixels are projected into the latent space of EG3D. For the out-of-range part, the authors use an additional triplane to represent it. Subsequently, these two radiation fields are combined in a composite volumetric representation to reconstruct the input. During the editing stage, the distribution part, i.e. the latent code, is independent of the OOD part and is edited separately. Also, the reconstructed pixels related to the masked OOD part are not considered in the process.

According to the authors, this proposed approach brings three main advantages. First, by compositing the input distribution and the output distribution together, the model achieves a higher fidelity reconstruction. Second, by editing only the part in distribution, editability is maintained. Third, by leveraging 3D-compatible GANs, input face video can be rendered from novel viewpoints.

Below is a comparison of the mentioned method and other state-of-the-art approaches.

This was the brief for In-N-Out, a novel AI framework for facial video inversion and editing with volumetric decomposition.

review the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 15k+ ML SubReddit, discord channeland electronic newsletterwhere we share the latest AI research news, exciting AI projects, and more.

Daniele Lorenzi received his M.Sc. in ICT for Internet and Multimedia Engineering in 2021 from the University of Padua, Italy. He is a Ph.D. candidate at the Institute of Information Technology (ITEC) at the Alpen-Adria-Universität (AAU) Klagenfurt. He currently works at the Christian Doppler ATHENA Laboratory and his research interests include adaptive video streaming, immersive media, machine learning and QoS / QoE evaluation.