Big language models have left an indelible mark on the artificial intelligence community. Models like GPT, T5, PaLM, etc., are becoming exponentially popular. These models mimic humans learning to read, summarize, and generate textual data. Its recent impact on AI has helped contribute to a wide range of industries like healthcare, finance, education, entertainment, etc.

Aligning big language models with human values and intentions has been a constant challenge in the field of generative AI, specifically in terms of being comprehensive, respectful, and compliant. With the immense popularity of GPT-based ChatGPT, this problem has come into the spotlight. Current AI systems rely heavily on supervised fine-tuning with human instructions and annotations, and Reinforcement Learning from Human Feedback (RLHF) to align models with human preferences. However, this approach requires extensive human supervision, which is expensive and potentially problematic. This leads to quality issues, reliability, diversity, and undesirable biases present in human-provided annotations.

To address these issues and minimize LLMs’ reliance on intensive human annotations, a team of researchers proposed an approach called SELF-ALIGN. SELF-ALIGN was introduced to process the alignment of LLM-based AI agents with human values, and that too virtually and without annotations. It uses a small set of human-defined principles or rules to guide the behavior of AI agents when generating responses to user queries.

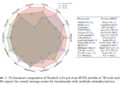

Researchers have applied the SELF-ALIGN approach to the LLaMA-65b base language model. An AI assistant called Dromedary has been developed, which achieves significant performance improvements compared to current AI systems, including Text-Davinci-003 and Alpaca, using less than 300 lines of human annotation. The Dromedary code, LoRA weights, and synthetic training data have been open-sourced to encourage further research in aligning LLM-based AI agents with increased monitoring efficiency, reduced biases, and improved controllability.

The approach consists of four stages:

1. Self-instruction: This stage employs the self-instruction mechanism by generating synthetic instructions using 175 initial prompts and an additional 20 topic-specific prompts. The purpose of these instructions is to provide a wide range of contexts and scenarios for the AI system to learn from.

2. Principle-Driven Self-Alignment: At this stage, a small set of 16 human-written principles in English are provided, describing the desirable quality of responses produced by the system. These principles serve as guidelines for generating helpful, ethical, and trustworthy responses. The approach uses in-context learning (ICL) with some demos to illustrate how the AI system adheres to the rules when formulating responses in different cases.

3. Early Recording: At this stage, the original LLM is adjusted using the self-aligned responses generated by the LLM via prompts. During the fine-tuning process, principles and demos are pruned. This refined LLM can directly generate answers that align well with the principles.

4. Detailed cloning: The final stage involves the use of context distillation to improve the system’s ability to produce more complete and elaborate responses. This technique allows the system to generate detailed and comprehensive responses.

In conclusion, Dromedary, the bootstrap LLM, looks promising to largely align with minimal human supervision.

review the Paper and github link. Don’t forget to join our 21k+ ML SubReddit, discord channel, and electronic newsletter, where we share the latest AI research news, exciting AI projects, and more. If you have any questions about the article above or if we missed anything, feel free to email us at [email protected]

🚀 Check out 100 AI tools at AI Tools Club

Tanya Malhotra is a final year student at the University of Petroleum and Power Studies, Dehradun, studying BTech in Computer Engineering with a specialization in Artificial Intelligence and Machine Learning.

She is a data science enthusiast with good analytical and critical thinking, along with a keen interest in acquiring new skills, leading groups, and managing work in an organized manner.