Object detection and image segmentation are crucial tasks in machine vision and artificial intelligence. They are critical in numerous applications, such as autonomous vehicles, medical imaging, and security systems.

Object detection involves the detection of object instances within an image or video stream. It consists of identifying the class of the object and its location within the image. The goal is to produce a bounding box around the object, which can then be used for further analysis or to track the object over time in a video stream. Object detection algorithms can be divided into two categories: one-stage and two-stage. One-stage methods are faster but less accurate, while two-stage methods are slower but more accurate.

On the other hand, image segmentation involves dividing an image into multiple segments or regions, where each segment corresponds to a different object or part of an object. The goal is to tag each pixel in the image with a semantic class, such as “person”, “car”, “sky”, etc. Image segmentation algorithms can be divided into two categories: semantic segmentation and instance segmentation. Semantic segmentation involves tagging each pixel with a class label, while instance segmentation refers to the detection and segmentation of individual objects within an image.

Both object detection and image segmentation algorithms have advanced significantly in recent years, mainly due to deep learning approaches. Due to their ability to learn hierarchical representations of image input, Convolutional Neural Networks (CNNs) have become the preferred choice for these problems. However, training these models requires specialized annotations, such as object boxes, masks, and localized points, which are challenging and time-consuming. Without taking overhead costs into account, manual annotation of 164,000 images into the COCO dataset with masks for only 80 classes required more than 28,000 hours.

Using a novel architecture called Cut-and-LEaRn (CutLER), the authors attempt to address these issues by studying unsupervised object detection and instance segmentation models that can be trained without human labels. The method consists of three simple architecture and data independent mechanisms. The pipeline for the proposed architecture is shown below.

The authors of CutLER present for the first time MaskCut, a tool capable of automatically generating several initial approximate masks for each image based on the features calculated by a self-monitored and pretrained ViT vision transformer. MaskCut has been developed to address the limitations of current masking tools such as Normalized Cuts (NCut). In fact, NCut applications are restricted to detecting a single object in an image, which can be very limiting. For this reason, MaskCut extends it to discover multiple objects per image by iteratively applying NCut to a masked similarity matrix.

Second, the authors implement a simple loss reduction strategy to train detectors using these thick masks, which are resistant to objects that MaskCut did not detect. Despite having been trained with these crude masks, detectors can refine the reality of the terrain and produce masks (and boxes) that are more accurate. Therefore, several rounds of self-training on the models’ predictions can allow the model to evolve from focusing on local pixel similarities to considering the overall geometry of the object, resulting in more accurate segmentation masks.

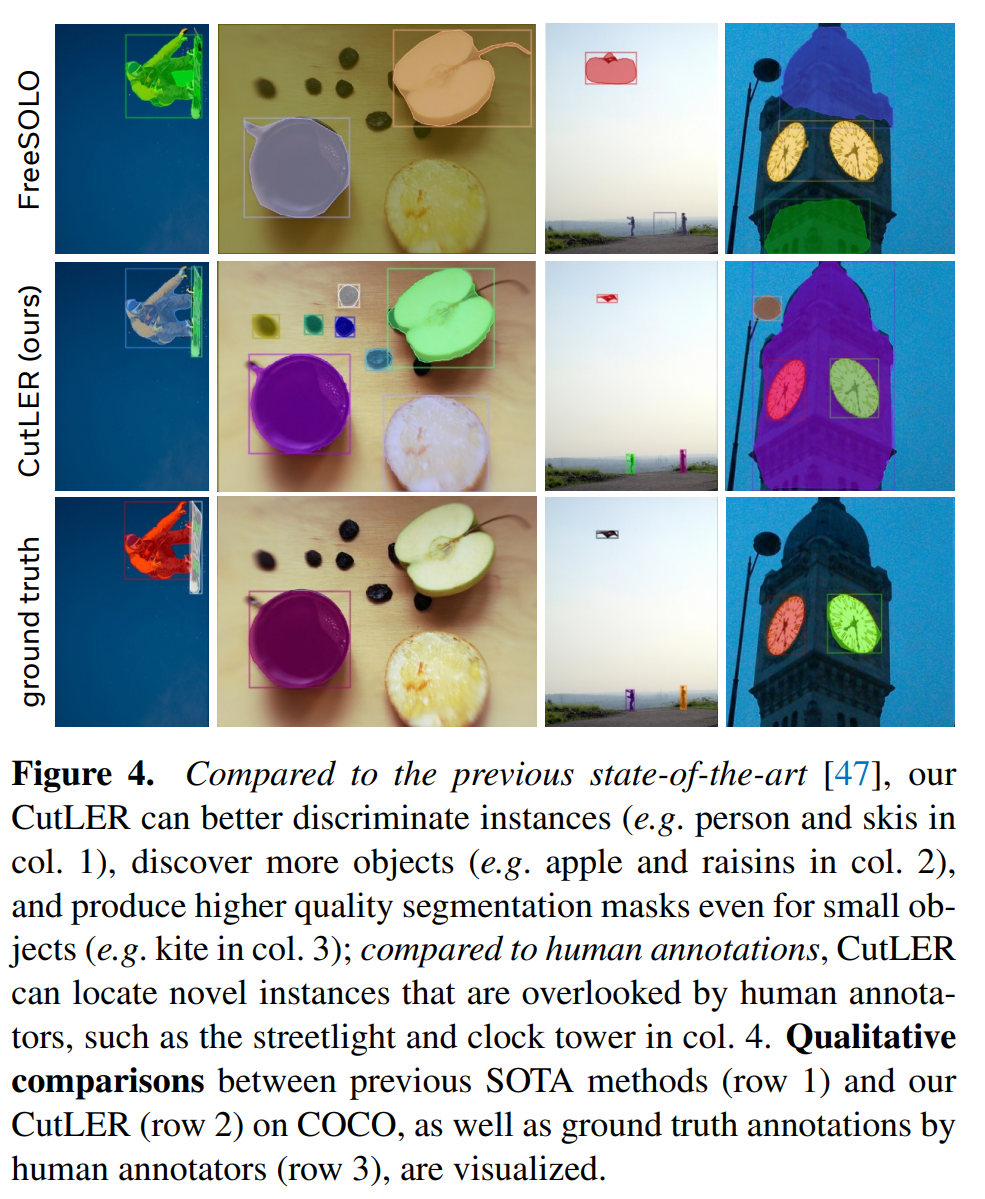

The following figure offers a comparison between the proposed framework and more advanced approaches.

This was the brief for CutLER, a new AI tool for accurate and consistent object detection and image segmentation.

If you are interested or would like more information on this framework, you can find a link to the document and the project page.

review the Paper, Githuband Project. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 13k+ ML SubReddit, discord channel, and electronic newsletterwhere we share the latest AI research news, exciting AI projects, and more.

Daniele Lorenzi received his M.Sc. in ICT for Internet and Multimedia Engineering in 2021 from the University of Padua, Italy. He is a Ph.D. candidate at the Institute of Information Technology (ITEC) at the Alpen-Adria-Universität (AAU) Klagenfurt. He currently works at the Christian Doppler ATHENA Laboratory and his research interests include adaptive video streaming, immersive media, machine learning and QoS / QoE evaluation.