Large language models (LLMs) sometimes learn things that we don't want them to learn and understand. It is important to find ways to remove or adjust this knowledge to keep the ai accurate and in control. However, editing or “unlearning” specific knowledge in these models is very difficult. The usual methods of doing this often end up affecting other information or general information of the model, which can affect its overall capabilities. Additionally, the changes made may not always last.

In recent work, researchers have used methods such as causal tracing to locate key components for generating results, while faster techniques such as patch attribution help identify important parts more quickly. Editing and unlearning methods attempt to remove or change certain information in a model to keep it safe and fair. But sometimes, models can learn or display unwanted information. Current methods for editing and unlearning knowledge often impact other capabilities of the model and lack robustness, as slight variations in cues can still generate the original knowledge. Even with safety measures in place, they can still produce harmful responses to certain cues, showing that it is still difficult to fully control their behavior.

A team of researchers from the University of Maryland, the Georgia Institute of technology, the University of Bristol, and Google Deep Mind propose Mechanistic unlearning. Mechanistic unlearning is a new ai method that uses mechanistic interpretability to locate and edit specific model components associated with fact retrieval mechanisms. This approach aims to make edits more robust and reduce unwanted side effects.

The study examines methods for removing information from ai models and finds that many fail when cues or outcomes change. By focusing on specific parts of models such as Gemma-7B and Gemma-2-9B that are responsible for fact retrieval, a gradient-based approach is more effective and efficient. This method reduces hidden memory better than others, as it requires only a few changes to the model and generalizes across diverse data. By focusing on these components, the method ensures that unwanted knowledge is effectively unlearned and resists relearning attempts. The researchers show that this approach leads to more robust edits across different input/output formats and reduces the presence of latent knowledge compared to existing methods.

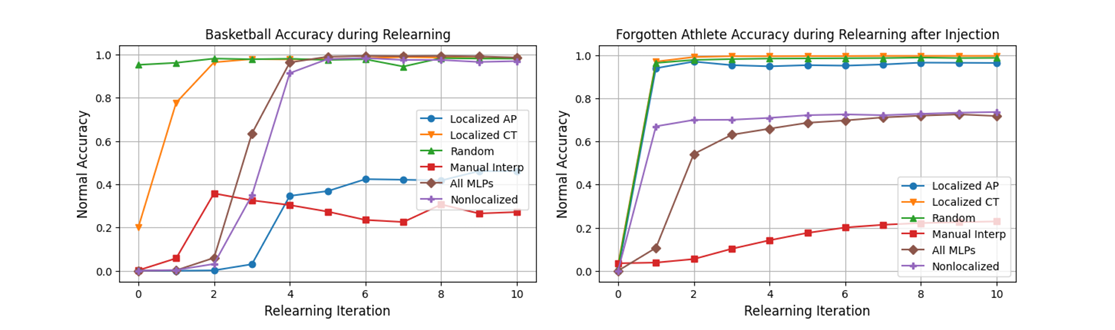

The researchers conducted experiments to test methods for unlearning and editing information on two data sets: Sports data and Contrafactt. In the Sports Facts data set, they worked to remove associations with basketball athletes and change sports from 16 golf athletes. In the CounterFact data set, they focused on exchanging correct and incorrect answers for 16 facts. They used two main techniques: Departure tracking (including causal tracing and attribution patches) and Fact finding location. The results showed that manual localization led to higher accuracy and robustness, especially on multiple-choice tests. The manual interpretability method was also robust against attempts to relearn the information. Furthermore, analysis of the underlying knowledge suggested that effective editing hinders the retrieval of prior information in the model layers. Weight masking tests showed that the optimization methods mainly change the parameters related to fact extraction rather than those used to search for facts, emphasizing the need to improve the fact search process for greater robustness. Therefore, this approach aims to make edits more robust and reduce unwanted side effects.

In conclusion, this article presents a promising solution to the problem of robust knowledge unlearning in LLMs by using Mechanistic interpretability to precisely target and edit specific model components, thereby improving the effectiveness and robustness of the unlearning process. The proposed work also suggests unlearning/editing as a potential testbed for different interpretability methods, which could circumvent the inherent lack of fundamental truth in interpretability.

look at the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 55,000ml.

(Next live webinar: October 29, 2024) Best platform to deliver optimized models: Predibase inference engine (promoted)

Divyesh is a Consulting Intern at Marktechpost. He is pursuing a BTech in Agricultural and Food Engineering from the Indian Institute of technology Kharagpur. He is a data science and machine learning enthusiast who wants to integrate these leading technologies in agriculture and solve challenges.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER