Picture of ai-site-helping-with-software-production_41673046.htm#fromView=search&page=1&position=0&uuid=c9c5be64-dc30-4a63-b268-62f14bc67126″>freepik

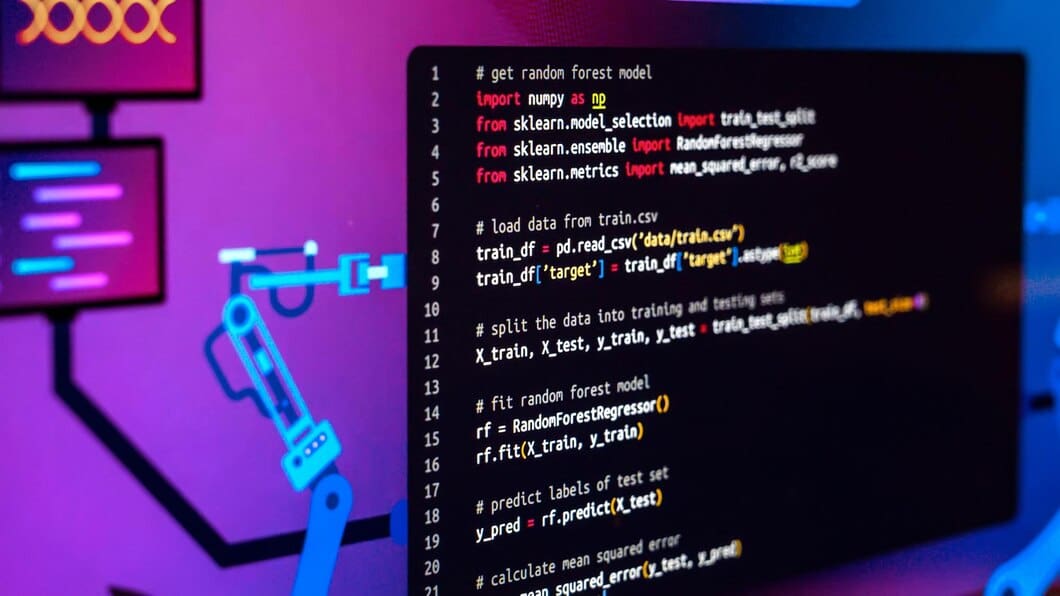

Python reigns supreme in the world of data science, yet many aspiring (and even veteran) data scientists only scratch the surface of its true capabilities. To truly master data analysis with Python, you must venture beyond the basics and use advanced techniques Designed for efficient data manipulation, parallel processing, and leveraging specialized libraries.

The large, complex data sets and computationally intensive tasks you will face demand more than basic Python skills.

This article serves as a detailed guide aimed at improving your Python skills. We'll delve into techniques to speed up your code, use Python with large data sets, and convert models into web services. Throughout, we'll explore ways to handle complex data problems effectively.

Mastering advanced Python techniques for data science is essential in today's job market. Most companies require data scientists who have a knack for Python. Django and flask.

These components streamline the inclusion of key security features, especially in adjacent niches such as running PCI-compliant hostingcreate a SaaS product for digital payments or even accept payments on a website.

So what about practical steps? These are some of the techniques you can start mastering now:

Efficient data manipulation with Pandas

Efficient data manipulation with Pandas revolves around leveraging its powerful DataFrame and Series objects to manage and analyze data.

Pandas excels at tasks like filtering, grouping, and merging data sets, enabling complex data manipulation operations with minimal code. Its indexing functionality, including multi-level indexing, allows for fast data retrieval and splitting, making it ideal for working with large data sets.

Additionally, Pandas' integration with other data visualization and analysis libraries in the Python ecosystem, such as NumPy and Matplotlib, further enhances its ability for efficient data analysis.

These functionalities make Pandas an indispensable tool in the data science toolkit. So although Python is an extremely common language, you shouldn't see this as a drawback. It's as versatile as it is ubiquitous, and mastering Python lets you do everything from statistical analysis, data cleaning, and visualization to more specific things like using vapt tools and even natural language processing applications.

High performance computing with NumPy

NumPy significantly improves Python's ability for high-performance computing, especially through its support for large, multidimensional arrays and matrices. It achieves this by providing a full range of mathematical functions designed for efficient operations on these data structures.

One of the key features of NumPy is its implementation in C, which allows the rapid execution of complex mathematical calculations using vectorized operations. This results in a noticeable performance improvement compared to using native Python loops and data structures for similar tasks. For example, tasks such as matrix multiplication, which are common in many scientific calculations, can be performed quickly using functions like np.dot().

Data scientists can use NumPy's efficient array handling and powerful computational capabilities to achieve significant speedups in their Python code, making it viable for applications that require high levels of numerical computation.

Improving performance through multiprocessing

Improving performance through multiprocessing in Python involves using the 'multiprocessing' module to execute tasks in parallel on multiple CPU cores instead of sequentially on a single core.

This is particularly advantageous for CPU-bound tasks that require significant computational resources, as it allows the splitting and simultaneous execution of tasks, thus reducing the total execution time. Basic usage involves creating 'Process' objects and specifying the target function to execute in parallel.

Furthermore, the 'Pool' The class can be used to manage multiple work processes and distribute tasks between them, abstracting much of the manual process management. Communication mechanisms between processes such as 'Line' and 'Tube' facilitate data exchange between processes, while synchronization primitives such as 'Close with key' and 'Traffic light' Ensure that processes do not interfere with each other when accessing shared resources.

To further improve code execution, techniques such as JIT compilation with libraries. like Numba can significantly speed up Python code by dynamically compiling parts of the code at runtime.

Leveraging specialized libraries for high data analysis

Using specific Python libraries for data analysis can significantly boost your work. For example, Pandas is perfect for organizing and manipulating data, while PyTorch offers advanced deep learning capabilities with GPU support.

On the other hand, Plotly and Seaborn can help make your data more understandable and engaging by creating visualizations. For more computationally demanding tasks, libraries such as LightGBM and XGBoost offer efficient implementations of gradient boosting algorithms that handle large data sets with high dimensionality.

Each of these libraries specializes in different aspects of data analysis and machine learning, making them valuable tools for any data scientist.

Data visualization in Python has advanced significantly and offers a wide range of techniques to display data in a meaningful and engaging way.

Advanced data visualization not only improves data interpretation, but also helps uncover underlying patterns, trends, and correlations that might not be evident using traditional methods.

Mastering what you can do with Python individually is a must, but having an overview of how You can use a Python platform. to the maximum in a business environment is a point that will surely differentiate you from other data scientists.

Here are some advanced techniques to consider:

- Interactive visualizations. Libraries like bokeh and Plotly allow you to create dynamic charts that users can interact with, such as zooming in on specific areas or hovering over data points to see more information. This interactivity can make complex data more accessible and understandable.

- Types of complex graphics. Beyond basic line and bar charts, Python supports advanced chart types such as heat maps, box plots, violin plots, and even more specialized diagrams such as rain cloud diagrams. Each type of graph has a specific purpose and can help highlight different aspects of the data, from distributions and correlations to comparisons between groups.

- Customization with matplotlib. Matplotlib offers extensive customization options, allowing precise control over the appearance of plots. Techniques such as adjusting plot parameters with plt.getp and plt.setp Functioning or manipulating the properties of plot components allows you to create publication-quality figures that convey your data in the best way possible.

- Viewing time series. For temporal data, time series charts can effectively display values over time, helping to identify trends, patterns, or anomalies over different periods. Libraries like Seaborn make it easy to create and customize time series charts, improving time-based data analysis.

Improve performance through multiprocessing in python It allows code execution in parallel, making it ideal for CPU-intensive tasks without the need for I/O or user interaction.

Different solutions are suitable for different purposes, from creating simple line charts to complex interactive dashboards and everything in between. These are some of the popular ones:

- Infogram It stands out with its easy-to-use interface and diverse template library, serving a wide range of industries, including media, marketing, education, and government. It offers a free basic account and various pricing plans for more advanced features.

- FusionCharts allows the creation of more than 100 different types of interactive charts and maps, designed for both web and mobile projects. Supports customization and offers various export options.

- plot It offers a simple syntax and multiple interactivity options, suitable even for those without technical knowledge, thanks to its GUI. However, its community version has limitations such as public views and a limited number of aesthetics.

- RAWGraphs is an open source framework that emphasizes code-free, drag-and-drop data visualization, making complex data visually easy for everyone to understand. It is particularly suitable for bridging the gap between spreadsheet applications and vector graphics editors.

- QlikView It is preferred by well-established data scientists to analyze large-scale data. It integrates with a wide range of data sources and is extremely fast in data analysis.

Mastering advanced Python techniques is crucial for data scientists to unleash the full potential of this powerful language. While basic Python skills are invaluable, mastering sophisticated data manipulation, performance optimization, and leveraging specialized libraries elevates your data analysis capabilities.

Continuous learning, accepting challenges, and staying up to date on the latest Python developments are keys to becoming a competent professional.

So, invest time in mastering advanced Python features so you can tackle complex data analysis tasks, drive innovation, and make data-driven decisions that drive real impact.

Nahla Davies is a software developer and technology writer. Before dedicating her full-time job to technical writing, she managed, among other interesting things, to work as a lead programmer at an Inc. 5,000 experiential brand organization whose clients include Samsung, Time Warner, Netflix, and Sony.

NEWSLETTER

NEWSLETTER