Editor's Image | Ideogram and Canva

In a previous post, we covered the stimulus framework highlighting the role of the person, the context, the tone, the expected outcome, etc. to design a comprehensive stimulus.

However, despite the framework, there are still challenges, such as data privacy, hallucinations, and more. This article focuses on various stimulation techniques and describes best practices for prompting the model with the most appropriate response.

Let's get started.

Types of stimulation techniques

Image by author

1. Zero Shot Versus Few Shot Indications

Zero-shot and low-shot indications are fundamental techniques in the indication engineering toolkit.

Zero trigger indications are the simplest way to request the model response. Since the model is trained on massive data sets, its response generally works well without additional examples or specific domain knowledge.

Brief prompts involve showing specific nuances or highlighting the complexities of the task by showing a few examples. It is particularly useful for tasks that require domain-specific knowledge or that require additional context.

For example, if I say “cheese” is “fromage”, then “apple” is “pomme” in French, the model learns information about a task from a very limited number of examples.

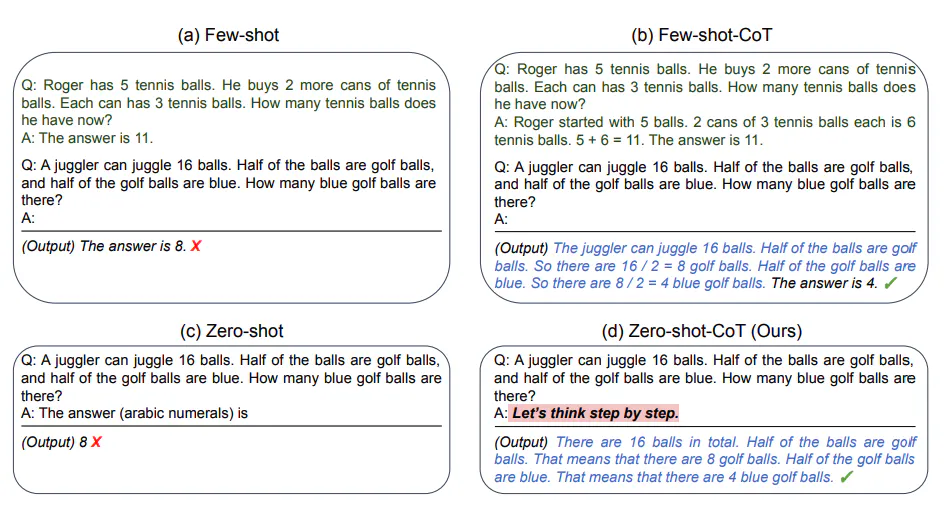

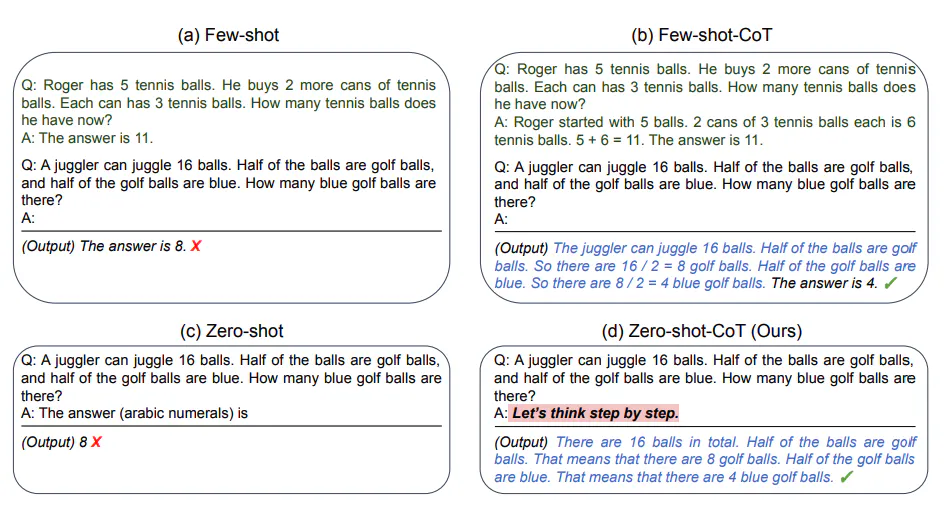

2. Chain of Thought (CoT) Prompt

In our prompting framework, we asked the model to show the step-by-step approach to arriving at the answer to ensure that you do not suffer from hallucinations. Similarly, Chain of Thought encourages the model to break down complex problems into steps, the same way a human would reason. This approach is particularly effective for tasks that require multi-step reasoning or problem solving.

The highlight of CoT prompts is that the step-by-step thinking process ensures that the model shows its work, thus preventing it from discovering the answer on its own.

Image from Promptingguide.ai

3. Recovery Augmented Generation (RAG)

Augmented retrieval generation combines the power of large language models with external knowledge retrieval. But why is external knowledge needed? Aren't these models trained on large enough data to generate a meaningful response?

Well, despite seeing massive training data, the model can benefit from additional information derived from specialized domains. Therefore, RAG helps by providing more accurate and contextually relevant answers, thereby reducing ambiguity and guesswork, mitigating hallucinations.

For example, in legal or medical fields where accurate and current information is critical, experts in the field often reference up-to-date cases or specialized knowledge that help them make more informed decisions to properly perform their tasks. Similarly, RAG becomes the go-to expert on the model, providing specific and authoritative sources.

Be careful with data privacy

Despite the power of these techniques, rapid engineering faces several challenges, with data privacy being one of the most prominent.

With growing awareness of how models train and process data, users are increasingly concerned that models will even access their requested data to further fine-tune and improve results. And this fear is legitimate.

Ways of working are evolving rapidly. Organizations must adopt robust data governance frameworks, thereby ensuring the privacy and security of sensitive business data.

Best Practices for Effective Guidance

Speaking of revised ways of working, it's time to follow best practices to get the most out of rapid engineering:

1. Fact Check

There has been a recent case where a model fabricated a false legal case, showing the responsible lawyers in a bad light. As reported in ReutersThey confessed to having made “a good faith mistake in not believing that a piece of technology could be inventing cases out of thin air.”

This highlights the lack of knowledge of the tool in question. You not only have to know what the model is capable of but also its limitations.

Hence, it is recommended to always verify the information generated by ai models, especially for critical or sensitive tasks. Don't limit your task to this, but also cross-reference with reliable sources to ensure accuracy.

An example suggestion in such a case could be: “Provide three key statistics about the adoption of ai in the industry of your interest. For each statistic, include a reliable source you can use to verify the information.”

<img decoding="async" alt="Risks of using ai-generated content” width=”100%” src=”https://technicalterrence.com/wp-content/uploads/2024/10/1728313473_942_Master-rapid-engineering-in-2024.png”/><img decoding="async" src="https://technicalterrence.com/wp-content/uploads/2024/10/1728313473_942_Master-rapid-engineering-in-2024.png" alt="Risks of using ai-generated content” width=”100%”/>

Image 1 of ai” target=”_blank” rel=”noopener”>Guardian | Image 2 of Reuters

Or you can ask the model to: “Summarize the latest developments in the ai landscape. For each major breakthrough, provide a reference to a relevant research article or reputable technology news article.”

2. Think carefully

Before generating an answer, apply the model to think through the problem by considering various aspects of the task.

For example, you can ask the model: “Consider the ethical, technical, and economic implications before responding. Generate a response only when you have thought it through.”

3. User confirmation

To ensure that the model's response aligns with the user's intent, you can ask it to verify and confirm with you before proceeding to the next steps. In case of any ambiguity, you can nudge the model to ask clarifying questions to better understand the specific task.

For example, you might ask: “Describe a marketing strategy for an ai-based healthcare app. After each main point, pause and ask if you need clarification.”

Or you can also ask: “If you need clarification on specific industries or regions to focus on, please ask before continuing with the analysis.”

Concluding

I hope you find these prompts and best practices helpful in your next best use of ai. All in all, prompts involve creativity and critical thinking, so let's get creative and start pushing.

Vidhi Chugh is an ai strategist and digital transformation leader working at the intersection of product, science, and engineering to build scalable machine learning systems. She is an award-winning innovation leader, author and international speaker. Their mission is to democratize machine learning and break down the jargon so that everyone is part of this transformation.

Our Top 3 Partner Recommendations

1. Best VPN for Engineers: 3 Months Free – Stay safe online with a free trial

1. Best VPN for Engineers: 3 Months Free – Stay safe online with a free trial

2. The best project management tool for technology teams – Drive team efficiency today

2. The best project management tool for technology teams – Drive team efficiency today

4. The best password management tool for tech teams – zero trust and zero knowledge security

4. The best password management tool for tech teams – zero trust and zero knowledge security

NEWSLETTER

NEWSLETTER