Language models have become the cornerstone of modern NLP, enabling significant advances in various applications, including text generation, machine translation, and question answering systems. Recent research has focused on scaling these models in terms of the amount of training data and the number of parameters. These scaling laws have shown that increasing data and model parameters produces substantial improvements in performance. However, a new dimension of scale is now being explored: the size of external data stores available at the time of inference. Unlike traditional parametric models, which rely solely on training data, retrieval-based language models can dynamically access a much larger knowledge base during inference, improving their ability to generate more accurate responses. and contextually relevant. This novel approach of integrating vast data stores opens up new possibilities for efficiently managing knowledge and improving the factual accuracy of LMs.

A major challenge in NLP is retaining and using vast knowledge without incurring significant computational costs. Traditional language models are typically trained on large static data sets encoded in the model parameters. Once trained, these models cannot integrate new information dynamically and require costly retraining to update their knowledge base. This is particularly problematic for knowledge-intensive tasks, where models need to reference extensive external sources. The problem is compounded when these models are required to handle various domains, such as general web data, scientific articles, and technical code. The inability to dynamically adapt to new information and the computational burden associated with retraining limit the effectiveness of these models. Therefore, a new paradigm is needed to allow language models to dynamically access and use external knowledge.

Existing approaches to improving the capabilities of language models include the use of retrieval-based mechanisms that rely on external data stores. These models, known as retrieval-based language models (RIC-LM), can access additional context during inference by querying an external data store. This strategy contrasts with parametric models, limited by the knowledge incorporated within their parameters. Notable efforts include using Wikipedia-sized data warehouses with a few billion tokens. However, these data stores are usually domain-specific and do not cover all the information needed for subsequent complex tasks. Furthermore, previous retrieval-based models have limitations in computational efficiency and feasibility, as large-scale data warehouses present challenges in maintaining the speed and accuracy of retrieval. Although some models such as RETRO have used proprietary data warehouses, their results have not been fully replicable due to the closed nature of the data sets.

A research team from the University of Washington and the Allen Institute for ai built a new data warehouse called MassiveDScomprising 1.4 billion tokens. This open source data warehouse is the largest and most diverse available for recovery-based LM. It includes data from eight domains: books, scientific articles, Wikipedia articles, GitHub repositories, and mathematical texts. MassiveDS was specifically designed to facilitate large-scale retrieval during inference, allowing language models to access and use more information than ever before. The researchers implemented an efficient pipeline that reduces the computational overhead associated with data warehouse scaling. This pipeline enables systematic evaluation of data warehouse scaling trends by retrieving a subset of documents and applying operations such as indexing, filtering, and subsampling only to these subsets, making the construction and utilization of large data stores are computationally accessible.

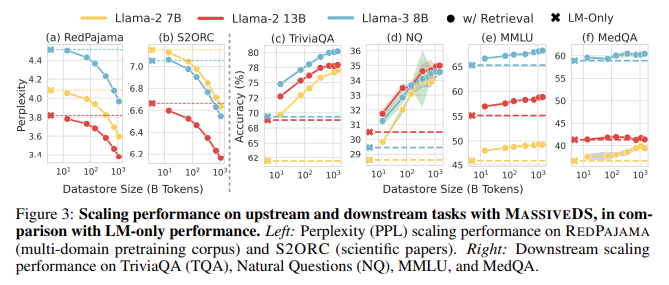

Research showed that MassiveDS significantly improves the performance of retrieval-based language models. For example, a smaller LM using this data warehouse outperformed a larger parametric LM on multiple subsequent tasks. Specifically, MassiveDS models achieved lower perplexity scores on web and general scientific data, indicating higher quality of language modeling. Furthermore, in knowledge-intensive question answering tasks such as TriviaQA and Natural Questions, LMs using MassiveDS consistently outperformed their larger counterparts. In TriviaQA, models with access to less than 100 billion MassiveDS tokens could outperform much larger language models that did not use external data stores. These findings suggest that increasing the size of the data warehouse allows models to perform better without improving their internal parameters, reducing the overall training cost.

The researchers attribute these performance improvements to MassiveDS's ability to provide high-quality, domain-specific information during inference. Even for reasoning-intensive tasks, such as MMLU and MedQA, retrieval-based LMs using MassiveDS showed notable improvements compared to parametric models. Using multiple data sources ensures that the data warehouse can provide relevant context for various queries, making language models more versatile and effective across different domains. The results highlight the importance of using data quality filters and optimized recovery methods, further enhancing the benefits of data warehouse scaling.

In conclusion, this study demonstrates that retrieval-based language models equipped with a large data warehouse such as MassiveDS can perform better at lower computational cost than traditional parametric models. By leveraging a vast data warehouse of 1.4 trillion tokens, these models can dynamically access diverse, high-quality information, significantly improving their ability to handle knowledge-intensive tasks. This represents a promising direction for future research, as it offers a scalable and efficient method to improve the performance of language models without increasing model size or training cost.

look at the Paper, Data set, GitHuband Project. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet..

Don't forget to join our SubReddit over 50,000ml.

We are inviting startups, companies and research institutions that are working on small language models to participate in this next Magazine/Report 'Small Language Models' by Marketchpost.com. This magazine/report will be published in late October/early November 2024. Click here to schedule a call!

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for social good. Their most recent endeavor is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has more than 2 million monthly visits, which illustrates its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER