By Akshay Kumar & Vijendra Jain

To this day, a significant number of organizational processes rely on paper documents. Instances invoice processing, and customer onboarding processes in insurance companies. Advances in data science and data engineering have led to the development of Intelligent document processing (IDP) solutions. These solutions allow organizations to automate the extraction, analysis, and processing of paper documents using AI techniques minimizing manual intervention. Essentially, there are two major players in the optical character recognition (OCR) segment: Amazon’s API Textract and Google’s Vision API along with open-source API Tesseract.

According to a report by Forrester, 80% of an organization’s data is unstructured including PDFs, images, and more. Here is the true test of IDP solutions. Our hypothesis is that the accuracy of these OCR APIs might suffer due to various noises present in the scanned documents like blurs, watermarks, faded text, distortions, etc. This article attempts to measure the effectiveness of such noises on the performance of various APIs, In order to establish, “Is there a scope to make Intelligent Document Processing smarter?”

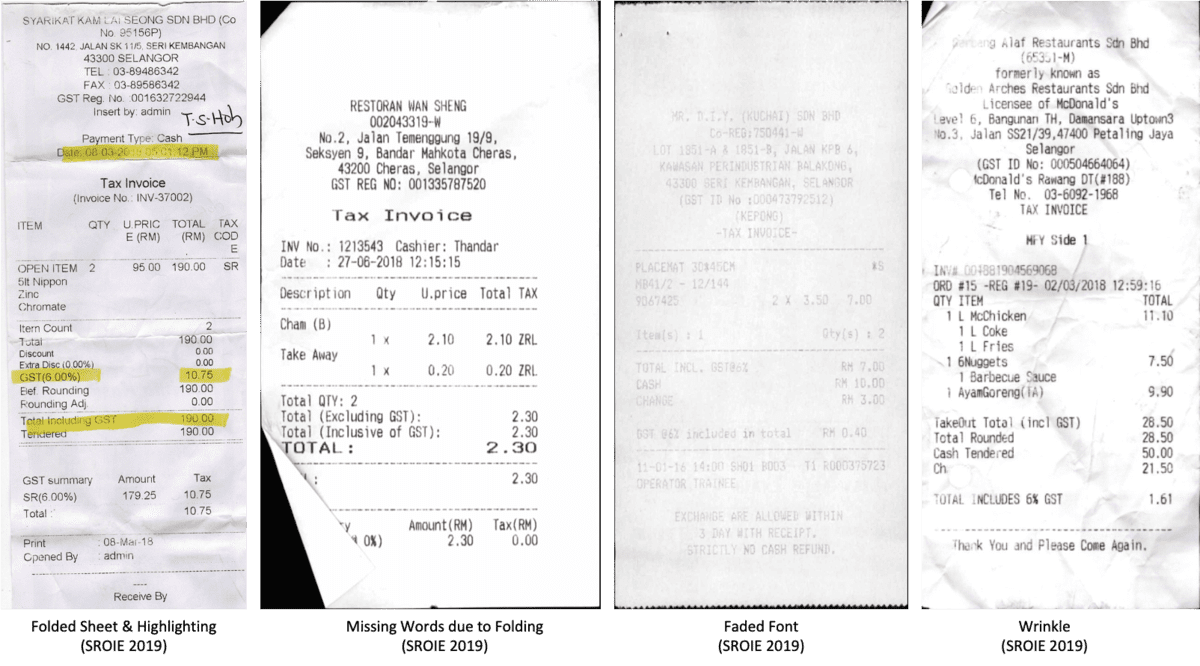

There are various types of noises in the documents which can lead to poor accuracy of OCR. These

noises can be divided into two categories:

Noises due to the document quality:

- Paper Distortion – Crumbled Paper, Wrinkled Paper, Torn Paper

- Stains – Coffee Stains, Liquid spill, ink spill

- Watermark, stamp

- Background Text

- Special Fonts

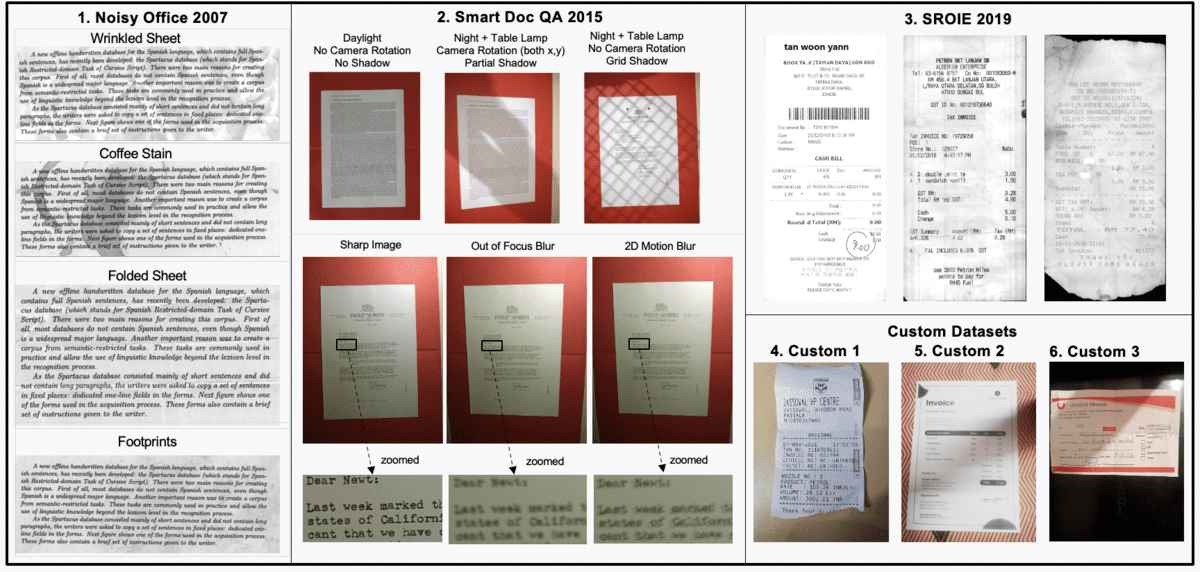

Fig 2.1 Noises due to the document quality

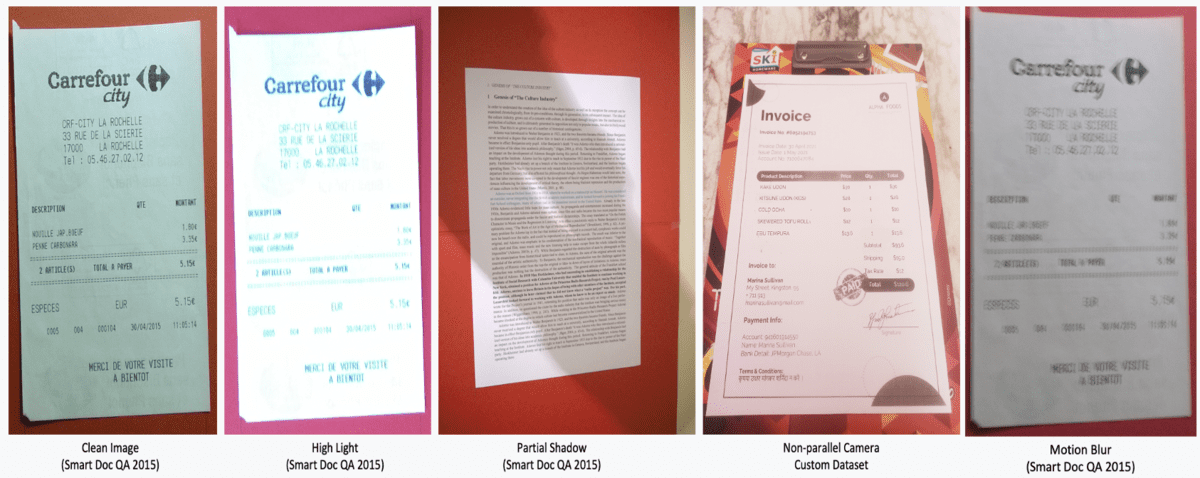

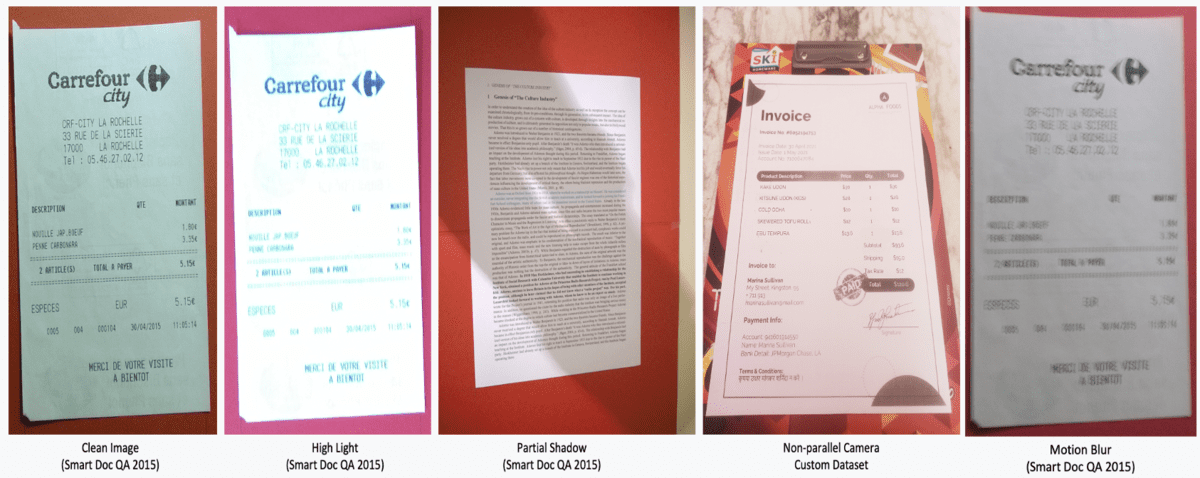

Noises due to image capturing process:

- Skewness – Warpage, Non-parallel camera

- Blur – Out of Focus Blur, Motion Blur

- Lighting Conditions – Low Light (Underexposed), High Light (Overexposed), Partial Shadow

Fig 2.2 Noises related to image capturing process

Because of the presence of these noises, images need pre-processing/ cleaning before being fed to an IDP/OCR pipeline. Some OCR engines have built-in pre-processing tools which can handle most of these noises. Our aim is to test the APIs with a variety of noises in order to determine the noises the OCR APIs can handle.

To measure the performance of an OCR engine, ground truth or actual text is compared with the OCR output or the text detected by the API. If the text detected by the API is exactly the same as the ground truth, that means accuracy is 100% for that document. But this is a very ideal case. In the real world, the detected text will differ from the ground truth because of the noises present in the document. This difference between ground truth and detected text is measured using various metrics.

The following table lists the metrics that we have considered to measure the performance of the APIs. Except for the first metric (Mean Confidence Score), the rest all the metrics compare the detected text with the ground truth.

| S. No. | Metric | Type | Brief Description |

| 1 | Mean Confidence Score | Given by API | Confidence score indicates the degree to which the OCR API is certain that it has recognized the text component correctly.

Mean confidence score is the average of all the word level confidence scores. |

| 2 | Character Error Rate (CER) | Error Rate | The CER compares the total number of characters (including spaces) in the ground truth, to the minimum number of insertions, deletions and substitution of characters that are required in the OCR output to obtain the ground truth result.

CER = (Substitutions + Insertions + Deletions) in OCR Output Number of Characters in Ground Truth |

| 3 | Word Error Rate (WER) | Error Rate | It is similar to CER but the only difference is that WER operates at word level instead of characters.

WER = (Substitutions + Insertions + Deletions) in OCR Output Number of Words in Ground Truth |

| 4 | Cosine Similarity | Similarity | If x is mathematical vector representation of the ground truth text and y is mathematical vector representation of the OCR output, the cosine similarity is defined as below:

Cos(x, y) = x . y / ||x|| * ||y|| |

| 5 | Jaccard Index | Similarity | If A is set of all the words from Ground Truth and B is set of all the words in OCR output, the Jaccard Index is defined as below: 𝐽= |𝐴∩𝐵”https://www.kdnuggets.com/”𝐴∪𝐵| |

Note that WER and CER are affected by the order of the text whereas Cosine Similarity, Jaccard Index and Mean Confidence Score are independent of the text order. Consider a case where an OCR API detects all the words correctly, but if the order of the detected words is different from the Ground Truth, then WER/CER will be very poor (high error i.e. poor performance) whereas Cosine Similarity will be very good (high similarity, i.e. good performance). Hence it is important to see all the metrics together to get a clear idea of the OCR API’s performance.

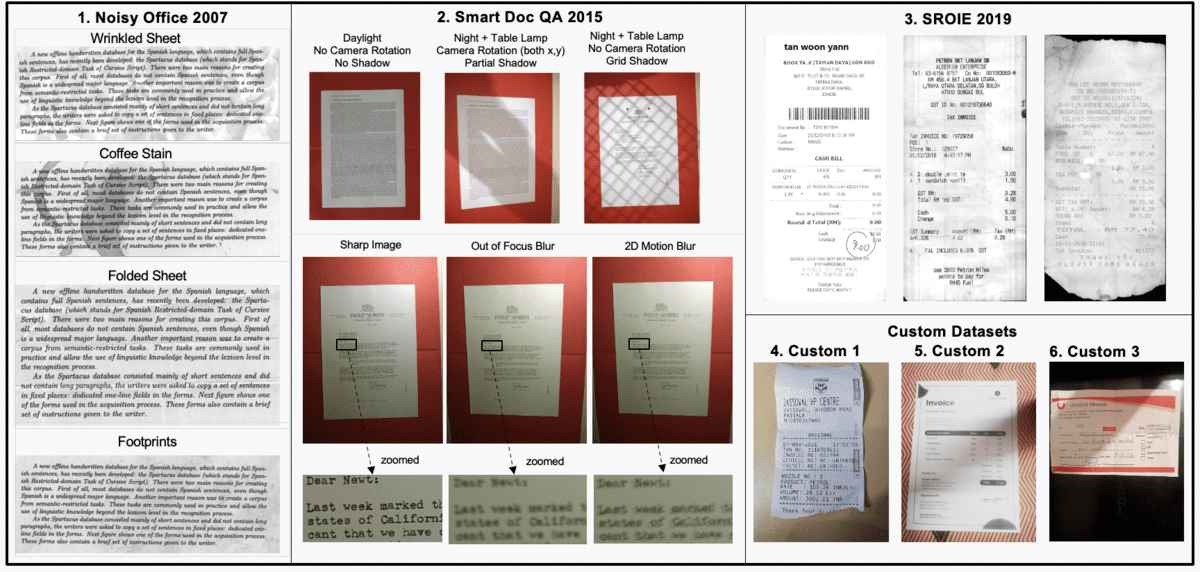

We have explored some standard datasets available in the literature and we also created some custom datasets using real-world invoices and dummy invoices. After exploring approximately 5900 documents including invoices, bills, receipts, text documents, and dummy invoices scanned under various noisy conditions. These noises include coffee stains, folding, wrinkles, small font sizes, skewness, blur, watermark, and more.

Fig 4.1 – Sample images from various datasets explored. Left box: Noisy Office; Middle Box: Smart Doc QA; Right Top Box: SROIE Dataset; Right Bottom Box: Custom Datasets.

As mentioned earlier, we tested three APIs Vision, Textract, and Tesseract on the mentioned datasets and calculated the performance metrics. We observed that Tesseract’s performance is significantly worse than Vision and Textract in almost all the cases, hence, in the result summary, Tesseract is excluded. Our result summary is divided into two parts, first based on the dataset and second based on the noise type.

Results Summary (Based on the Dataset)

We have classified the metrics into two sets, the first set includes error rates (WER & CER) and the second set includes similarity metrics (Cosine Similarity & Jaccard index). APIs are compared using means of these metrics for their respective sets. This is used to develop a rating system ranging from 1 to 10. Here a rating of 1 represents that the mean performance metric for that dataset is between 0-10% (Worst Performance), whereas a rating of 10 represents it is between 91-100% (Best Performance).

| Sl. No. | Data Set | Major Noise on which API performs poorly | API Performance Relative Rating

(1: Worst, 10: Best) |

||||

| Based on Error Rate | Based on Similarity Metrics | ||||||

| Vision | Textract | Vision | Textract | Vision | Textract | ||

| 1 | Noisy Office 2007 | Both APIs work well on all the noises of Noisy office dataset | 10 | 10 | 10 | 10 | |

| 2 | Smart Doc QA 2015 | Motion Blur, Out of Focus Blur, Invoice Type Documents | 7 | 9 | 9 | 8 | |

| 3 | SROIE 2019 | Dot Printer Font, Stamp | 6 | 9 | 10 | 10 | |

| 4 | Custom Dataset 1 | Blur and watermark | 6 | 9 | 10 | 9 | |

| 5 | Custom Dataset 2 (Alpha Foods) | Both APIs work good on all the noises | 5 | 7 | 10 | 9 | |

| 6 | Custom Dataset 3 | Without solid background (when there is a text present on both the sides) | 3 | 8 | 10 | 9 | |

An important point here is that Vision’s CER and WER error rates are generally higher than that of Textract. But the Cosine Similarity and Jaccard Index are similar for both the APIs. This is because of the order of the words or sorting method used by APIs. Our finding is that although both Vision and Textract are detecting texts with almost equal performance, but because of the different ordering in Vision’s output, its error rates are higher than that of Textract. Hence, Vision shows poor performance based on the error rate.

Results Summary (Based on Noise)

Here we provide a subjective evaluation of the API based on their observed performance. Right tick (✓) represents that the API can generally handle that particular noise and cross (X) represents that the API generally performs poorly with that particular noise. For example we observed that Textract cannot detect a vertical text in a document.

| S. No. | Noise / Variation | Google’s

Vision API |

Amazon’s

Textract API |

Observation |

| 1 | Light Variation

(Day Light, Night Light, Partial Shadow, Grid Shadow, Low Light) |

✓ | ✓ | Both Vision and Textract APIs can handle these kind of noises |

| 2 | Nonparallel camera (x, y, x-y) | ✓ | ✓ | |

| 3 | Uneven Surface | ✓ | ✓ | |

| 4 | 2x Zoom In | ✓ | ✓ | |

| 5 | Vertical Text | ✓ | X | Limitation of the Amazon API |

| 6 | Without solid background | X | X | Both Vision and Textract APIs tend to perform poor in these kind of noises |

| 7 | Watermark | X | X | |

| 8 | Blur (Out of Focus) | X | X | |

| 9 | Blur (Motion Blur) | X | X | |

| 10 | Dot Printer Font | X | X |

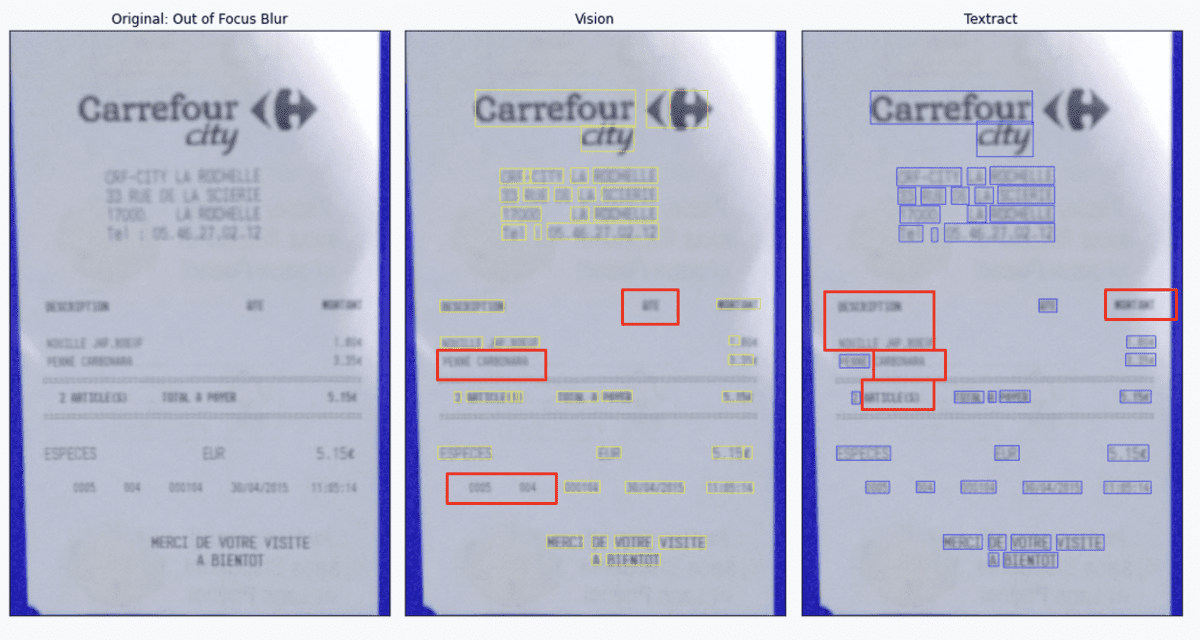

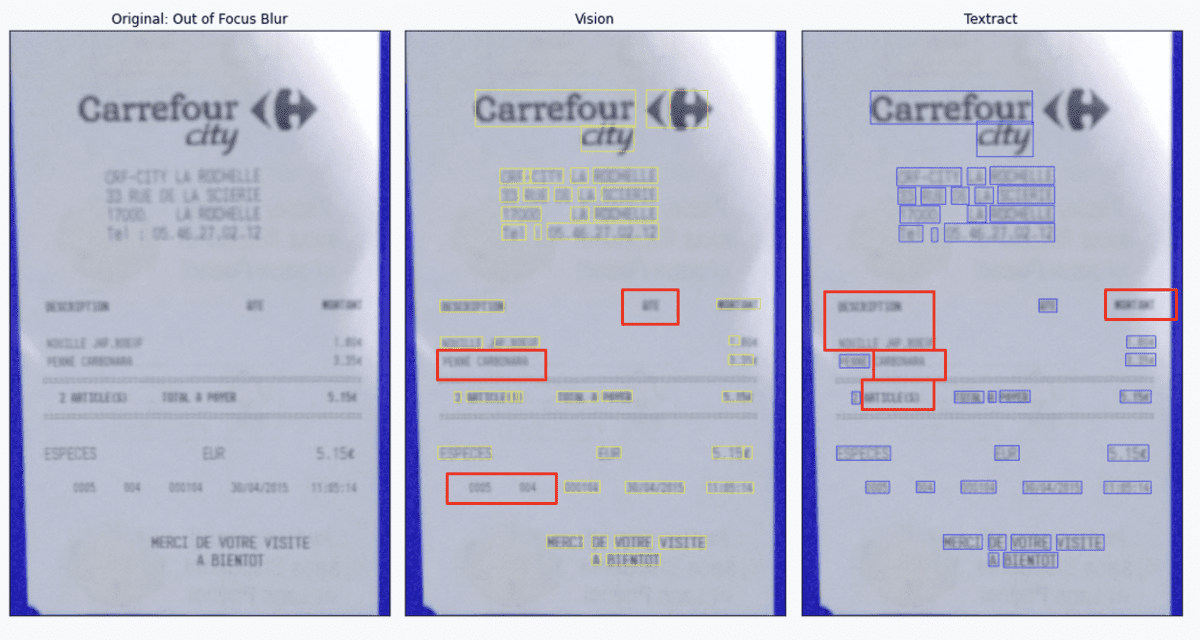

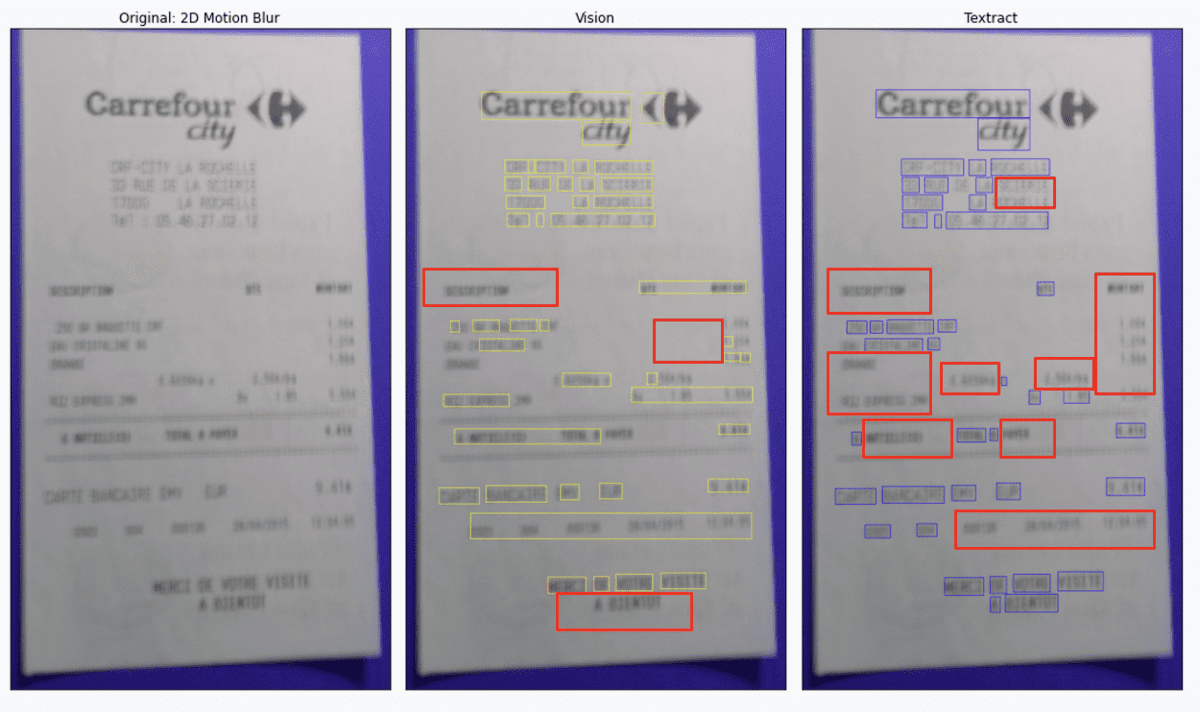

Some of the examples are given below:

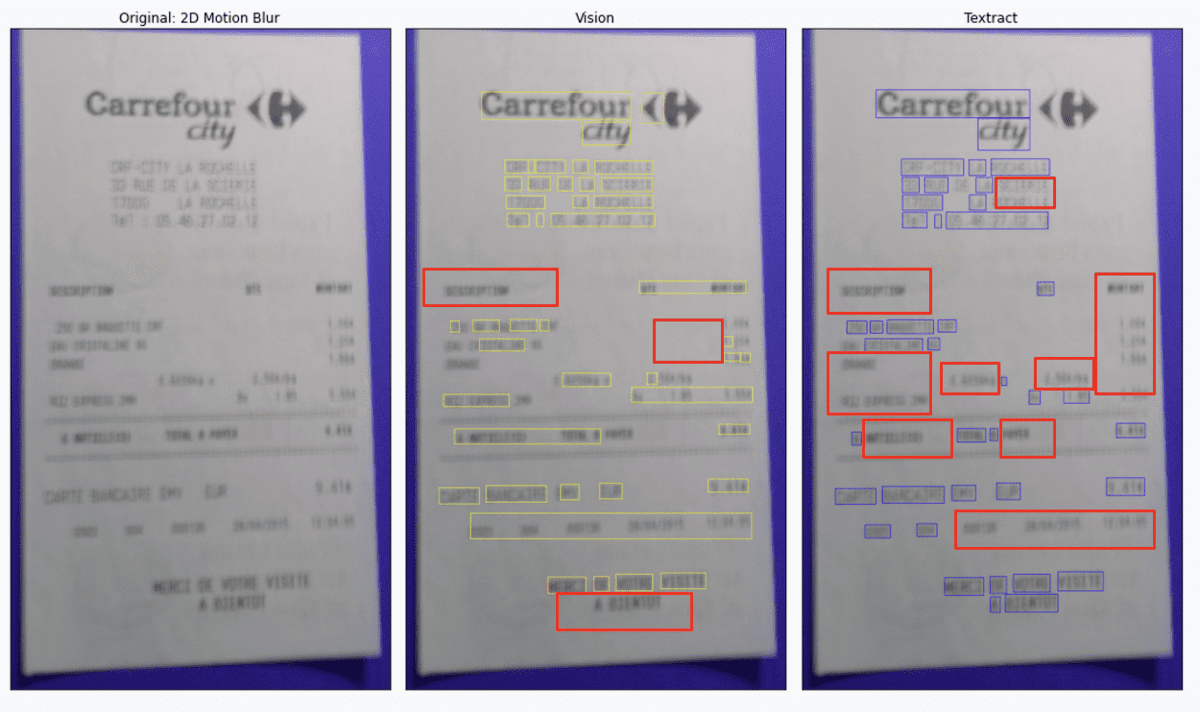

Fig 5.2 (a): SmartDocQA – Out of focus blur: Vision and Textract text output comparison. Left image is the input and middle one is Vision output where yellow boxes are word level bounding boxes and the right image is Textract output where blue boxes are word level bounding boxes. Red boxes indicate the words without bounding boxes, i.e. the words that have not been detected by the API.

Fig 5.2 (b): SmartDocQA – 2D Motion Blur: Vision and Textract text output comparison. Red boxes indicate the texts that are not recognized by the APIs.

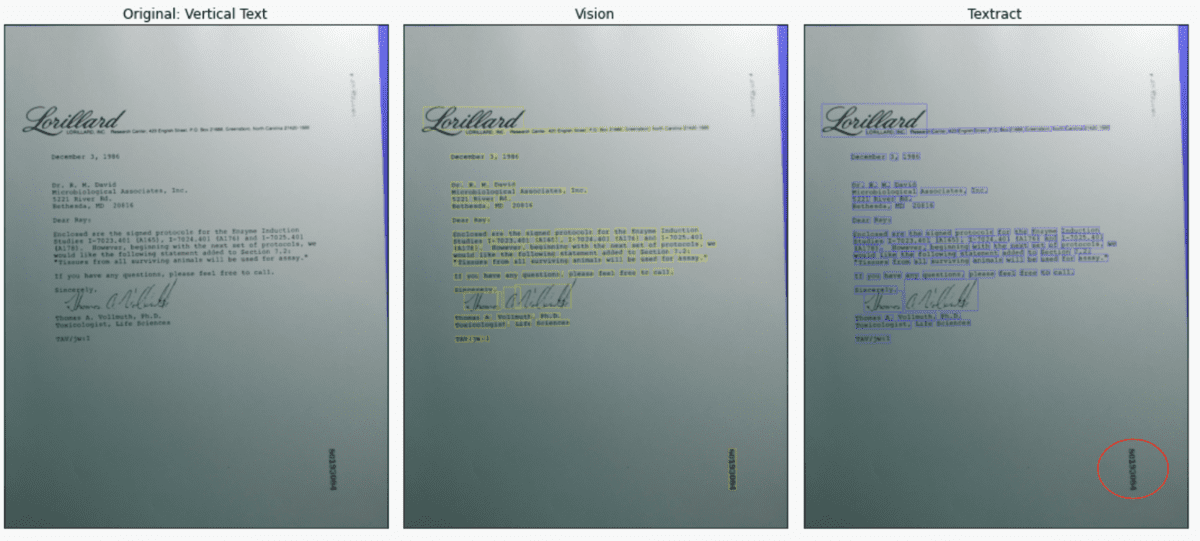

Fig 5.2 (c): SmartDocQA – Vertical text: Vision and Textract text output comparison. Red circle indicates that Textract API is not able to detect the vertical text in the image.

It is now established that some noises do affect API’s text recognition capabilities.

Hence we tried various methods to clean the images before feeding to the API and checked whether API performance improved or not. We have provided links in the reference section where these methods can be understood. Below is the summary of the observations:

| S. No. | Noise / Variation | Cleaning Method | Total Samples | Observation | |

| Vision | Textract | ||||

| 1 | Blur (Out of Focus) | Kernel Sharpening | 1 | Degraded | No Effect |

| Custom Pre-processing | 3 | 1: Improved

1: Slightly Improved 1: Slightly Degraded |

1: Improved

2: No Effect |

||

| 2 | Blur (2D Motion Blur) | Blurring (Average/Median) | 2 | 2: Improved | 1: Slightly Degraded

1: Improved |

| Kernel Sharpening | 2 | 1: No effect

1: Degraded |

1: Degraded

1: Improved |

||

| Custom Pre-processing | 1 | No effect | 1: No effect | ||

| 3 | Horizontal Motion Blur) | Blurring (Average/Median) | 2 | 1: Degraded

1: Improved |

1: Slightly Improved

1: Degraded |

| Custom Pre-processing | 1 | Slightly Improved | No Effect | ||

| 4 | Watermark | Morphological Filtering | 2 | 1: Improved

1: Degraded |

1: Improved

1: Degraded |

As seen from the table, these cleaning methods do not work on all the images and in fact sometimes the API performance degrades after applying these cleaning methods. Hence there is a need for a unified solution which can work on all kinds of noises.

After testing various datasets including Noisy Office, Smart Doc QA, SROIE and custom datasets to compare and evaluate the performance of Tesseract, Vision and Textract, we can conclude that the OCR output gets affected by the noises present in the documents. The inbuilt denoiser or pre-processor is not sufficient to handle most of the noises including motion blur, watermark etc. If the document images are denoised, the OCR output can improve significantly. The noises in the documents are diversified and we tried various non-model methods to clean the images. Different methods work for different kinds of noises. Currently, there is no unified option available which can handle all kinds of noises or at least major noises. Hence there is a scope to make Intelligent Document Processing smarter. There is a need for a unified (“one model fitting all”) solution which can denoise the document before inputting to the OCR API to improve the performance. In part 2 of this blog series we will explore denoising methods to enhance the API’s performance.

References

- F. Zamora-Martinez, S. España-Boquera and M. J. Castro-Bleda, Behaviour-based Clustering of Neural Networks applied to Document Enhancement, in: Computational and Ambient Intelligence, pages 144-151, Springer, 2007.

UCI Machine Learning Repository [https://archive.ics.uci.edu/ml/datasets/NoisyOffice] - Castro-Bleda, MJ.; España Boquera, S.; Pastor Pellicer, J.; Zamora Martínez, FJ. (2020). The NoisyOffice Database: A Corpus To Train Supervised Machine Learning Filters For Image Processing. The Computer Journal. 63(11):1658-1667. https://doi.org/10.1093/comjnl/bxz098

- Nibal Nayef, Muhammad Muzzamil Luqman, Sophea Prum, Sebastien Eskenazi, Joseph Chazalon, Jean-Marc Ogier: “SmartDoc-QA: A Dataset for Quality Assessment of Smartphone Captured Document Images – Single and Multiple Distortions”, Proceedings of the sixth international workshop on Camera Based Document Analysis and Recognition (CBDAR), 2015.

- Zheng Huang, Kai Chen, Jianhua He, Xiang Bai, Dimosthenis Karatzas, Shjian Lu, C. V. Jawahar , ICDAR2019 Competition on Scanned Receipt OCR and Information Extraction, (SROIE) 2021 [arXiv:2103.10213v1]

- https://cloud.google.com/vision

- https://aws.amazon.com/textract/

- https://docs.opencv.org/3.4/d4/d13/tutorial_py_filtering.html

- https://scikit-image.org/docs/stable/auto_examples/applications/plot_morphology.html

- https://pyimagesearch.com/2014/09/01/build-kick-ass-mobile-document-scanner-just-5-minutes/

Akshay Kumar is Principal Data Scientist at Sigmoid with 12 years of experience in data sciences and is an expert in marketing analytics, recommendation systems, time series forecasting, fraud risk modelling, image processing & NLP. He builds scalable data science based solutions & systems to solve tough business problems while keeping user experience at the centre.

Vijendra Jain is currently working with Sigmoid as an Associate Lead Data Scientist. With 7+ years of experience in Data Science, he has majorly worked in areas like in Marketing Mix Modelling, Image Classification and Segmentation, and Recommendation Systems.