Retrieval-augmented generation (RAG) methods improve the capabilities of large language models (LLMs) by incorporating external knowledge retrieved from vast corpora. This approach is particularly beneficial for answering open-domain questions, where detailed and accurate answers are crucial. By leveraging external information, RAG systems can overcome the limitations of relying solely on the parametric knowledge embedded in LLMs, making them more effective in handling complex queries.

A major challenge in RAG systems is the imbalance between the retriever and reader components. Traditional frameworks typically use short retrieval units, such as 100-word passages, requiring the retrieval to sift through large amounts of data. This design places a heavy burden on the retriever, while the reader's task remains relatively simple, leading to inefficiencies and potential semantic incompleteness due to document truncation. This imbalance restricts the overall performance of RAG systems, requiring a reevaluation of their design.

Current methods in RAG systems include techniques such as Dense Passage Retrieval (DPR), which focuses on finding short and accurate retrieval units from large corpora. These methods often involve recovering many units and employing complex reclassification processes to achieve high accuracy. While effective to some extent, these approaches still need to address inherent inefficiency and incomplete semantic representation due to their reliance on short retrieval units.

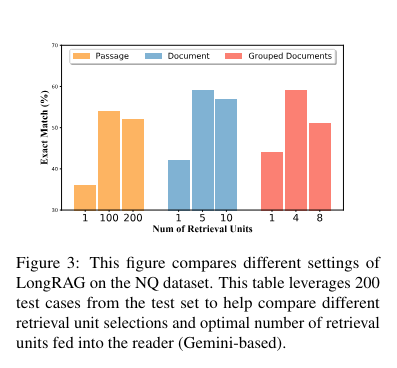

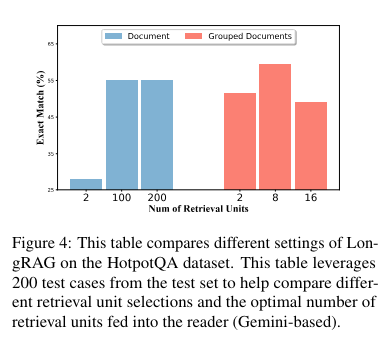

To address these challenges, the University of Waterloo research team introduced a novel framework called LongRAG. This framework comprises a “long retriever” and a “long reader” component, designed to process longer recovery units of around 4K tokens each. By increasing the size of recovery drives, LongRAG reduces the number of drives from 22 million to 600,000, significantly easing the workload of the recoverer and improving recovery scores. This innovative approach allows the retriever to handle more complete units of information, improving the efficiency and accuracy of the system.

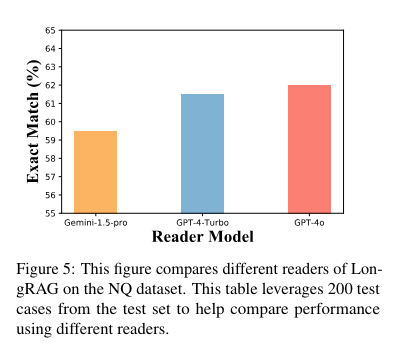

The LongRAG framework operates by grouping related documents into long retrieval units, which are then processed by the retrieval to identify relevant information. To extract the final responses, the retriever filters out the top 4 to 8 units, concatenates them, and feeds them into a long context LLM, such as Gemini-1.5-Pro or GPT-4o. This method leverages the advanced capabilities of long context models to process large amounts of text efficiently, ensuring comprehensive and accurate information extraction.

In depth, the methodology involves using one encoder to map the input question to a vector and a different encoder to map the retrieval units to vectors. The similarity between the question and the retrieval units is calculated to identify the most relevant units. The long retriever searches through these units, reducing the size of the corpus and improving the precision of the retriever. The retrieved units are then concatenated and fed into the long reader, which uses the context to generate the final response. This approach ensures that the reader processes a complete set of information, improving overall system performance.

The performance of LongRAG is truly remarkable. On the Natural Questions (NQ) dataset, it achieved an exact match (EM) score of 62.7%, a significant improvement over traditional methods. On the HotpotQA dataset, it achieved an EM score of 64.3%. These impressive results demonstrate the effectiveness of LongRAG, matching the performance of the latest generation RAG models. The framework reduced the corpus size by 30 times and improved response retrieval by approximately 20 percentage points compared to traditional methods, with a response@1 retrieval score of 71% in NQ and 72% in HotpotQA.

LongRAG's ability to process long retrieval units preserves the semantic integrity of documents, allowing for more accurate and complete responses. By reducing the load on the retriever and leveraging advanced long-context LLMs, LongRAG offers a more balanced and efficient approach to augmented recovery generation. The University of Waterloo research not only provides valuable insights into the modernization of RAG system design, but also highlights the exciting potential for future advances in this field, generating optimism for the future of recovery augmented generation systems.

In conclusion, LongRAG represents an important step forward in addressing the inefficiencies and imbalances of traditional RAG systems. Employing long recall units and leveraging the capabilities of advanced LLMs improves the accuracy and efficiency of open-domain question answering tasks. This innovative framework improves recovery performance and lays the foundation for future developments in recovery augmented generation systems.

Review the Paper and ai-Lab/LongRAG/” target=”_blank” rel=”noreferrer noopener”>GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter.

Join our Telegram channel and LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our SubReddit over 45,000ml

Create, edit, and augment tabular data with the first composite ai system, Gretel Navigator, now generally available! (Commercial)

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER