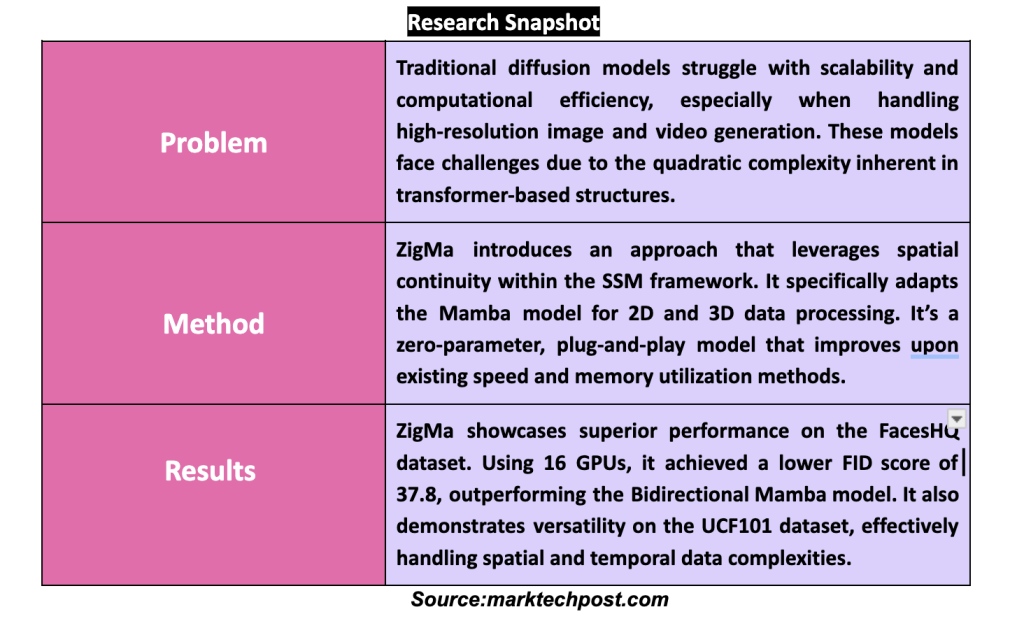

In the changing landscape of computational models for visual data processing, the search for models that balance efficiency with the ability to handle large-scale, high-resolution data sets is relentless. Although capable of generating stunning visual content, conventional models struggle with scalability and computational efficiency, especially when implemented for high-resolution image and video generation. This challenge arises from the quadratic complexity inherent in transformer-based structures, a basic element in the architecture of most diffusion models.

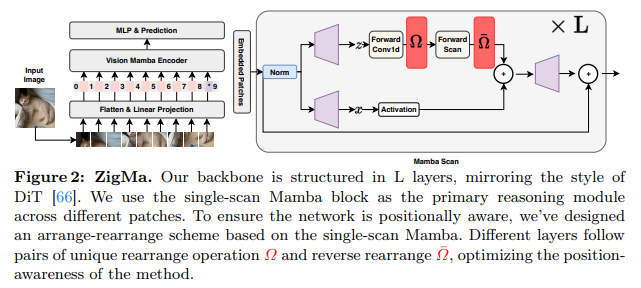

State-space models (SSM), where the Mamba model has emerged as an efficiency model for modeling long sequences. Mamba's prowess in 1D sequence modeling hinted at its potential to revolutionize the efficiency of diffusion models. However, adapting it to the complexities of 2D and 3D data, essential for image and video processing, could have been simpler. The crux is maintaining spatial continuity, a critical aspect to preserve the quality and coherence of the generated visual content but which is often overlooked in conventional approaches.

The breakthrough came with the introduction of Zigzag Mamba (ZigMa) by researchers at LMU Munich, an innovation of the diffusion model that incorporates spatial continuity into the Mamba framework. This method, described in the study as a simple, plug-and-play, zero-parameter paradigm, preserves the integrity of spatial relationships within visual data and does so with improvements in speed and memory efficiency. The effectiveness of ZigMa is underlined by its ability to outperform existing models on several benchmarks, demonstrating improved computational efficiency without compromising the fidelity of the generated content.

The research meticulously details the application of ZigMa on several datasets, including FacesHQ 1024×1024 and MultiModal-CelebA-HQ, showing its ability to handle high-resolution images and complex video sequences. A particular highlight of the study reveals ZigMa's performance on the FacesHQ dataset, where it achieved a lower Fréchet Inception Distance (FID) score of 37.8 using 16 GPUs, compared to the score of 51.1 of the bidirectional Mamba model.

ZigMa's versatility is demonstrated through its adaptability to various resolutions and its ability to maintain high-quality visual results. This is particularly evident in its application to the UCF101 data set for video generation. ZigMa, which employs a factorized 3D Zigzag approach, consistently outperformed traditional models, indicating its superior handling of the complexities of temporal and spatial data.

In conclusion, ZigMa emerges as a novel diffusion model that skillfully balances computational efficiency with the ability to generate high-quality visual content. Its unique approach to maintaining spatial continuity sets it apart and offers a scalable solution for generating high-resolution images and videos. With impressive performance metrics and versatility across multiple data sets, ZigMa advances the field of diffusion modeling and opens new avenues for research and application in visual data processing.

Review the Paper and Project. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter. Join our Telegram channel, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our 39k+ ML SubReddit

![]()

Hello, my name is Adnan Hassan. I'm a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a double degree from the Indian Institute of technology, Kharagpur. I am passionate about technology and I want to create new products that make a difference.

<!– ai CONTENT END 2 –>

NEWSLETTER

NEWSLETTER