Introduction

In language models, where the search for efficiency and precision is paramount, Flame 3.1 Storm 8B emerges as a remarkable achievement. This optimized version of Meta’s Llama 3.1 8B Instruct represents a major leap forward in improving the conversational and function calling capabilities within the 8B parameter model class. The path to this advancement is based on a meticulous approach focused on data curation, where high-quality training samples were carefully selected to maximize the model’s potential.

The tuning process did not stop there; it progressed through spectrum-based targeted tuning, culminating in strategic model fusion. This article discusses the innovative techniques that propelled Llama 3.1 Storm 8B to surpass its predecessors, setting a new benchmark in small language models.

What is Llama-3.1-Storm-8B?

Llama-3.1-Storm-8B builds on the strengths of Llama-3.1-8B-Instruct by improving conversational and function calling capabilities within the 8B parameter model class. This update demonstrates notable improvements across multiple benchmarks including instruction following, knowledge-based quality control, reasoning, hallucination reduction, and function calling. These advancements benefit ai developers and enthusiasts working with limited computational resources.

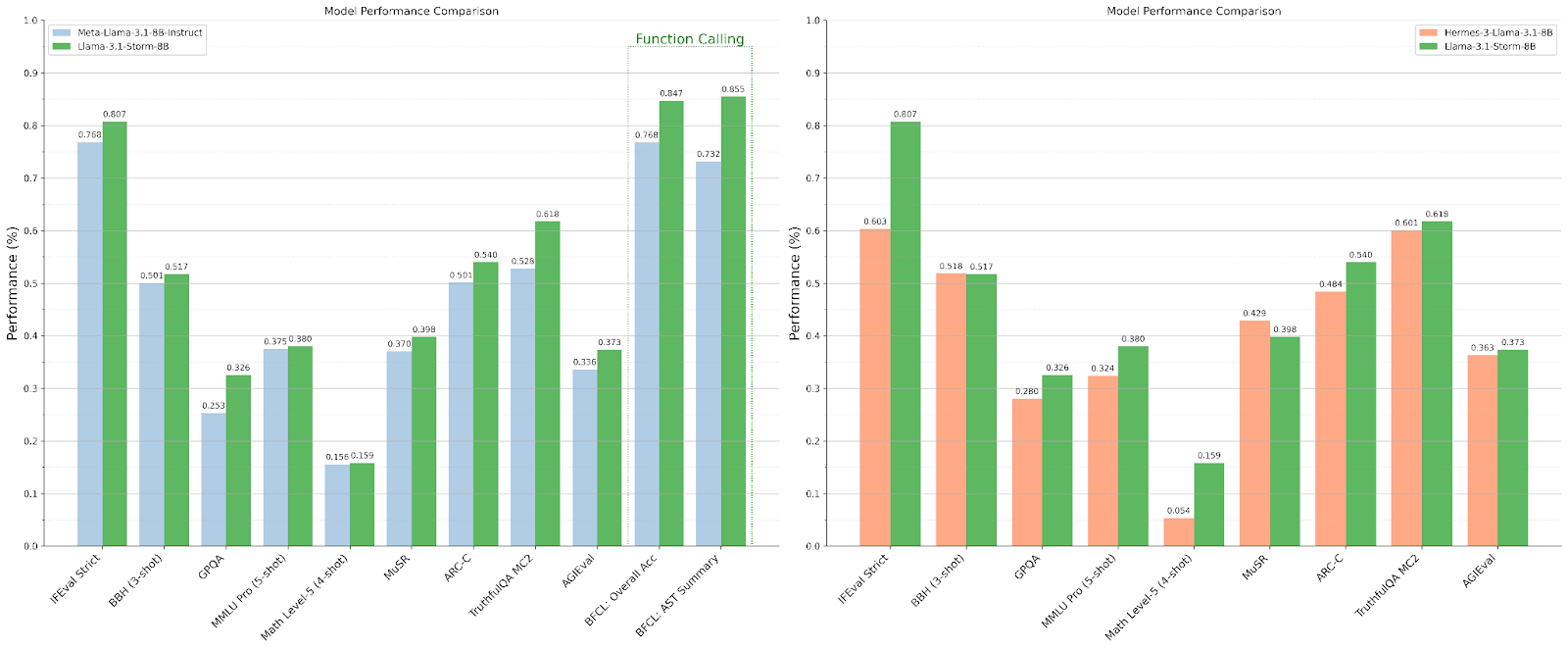

Compared to recent ones Hermes-3-Flame-3.1-8B ModelLlama-3.1-Storm-8B passes 7 out of 9 benchmarks. Hermes-3 only passes the MuSR benchmark, and both models have similar performance in the BBH benchmark.

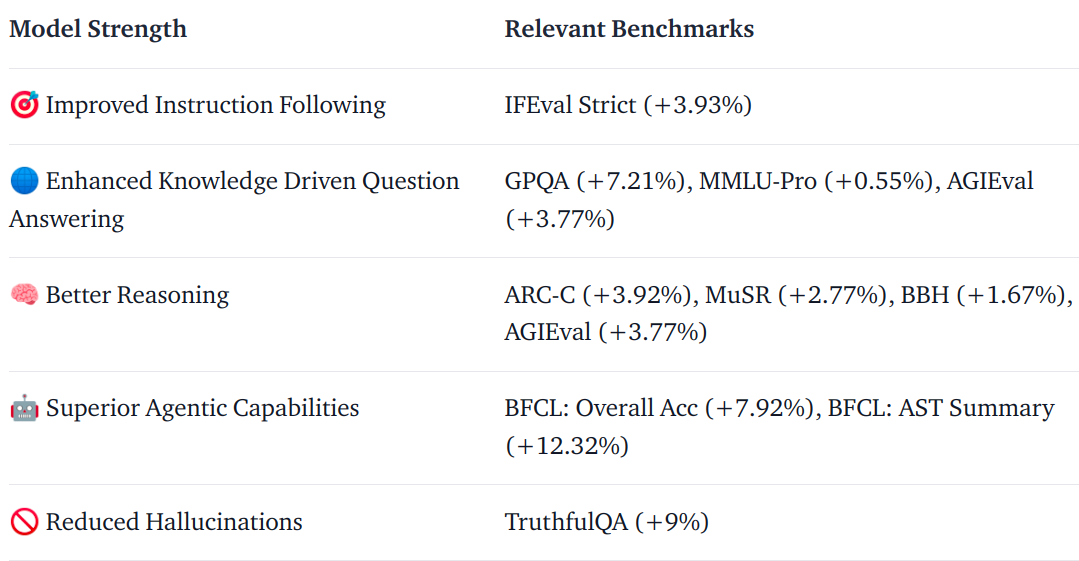

Llama 3.1 Storm 8B Strengths

The image above represents improvements (absolute gains) over Llama 3.1 8B Instruct.

Llama 3.1 Storm 8B Models

Here are the Llama 3.1 Storm 8B models:

- Flame 3.1 Storm 8B:This is the perfected primary model.

- Llama 3.1 Storm 8B FP8 Dynamic: This script quantizes the weights and activations of Llama-3.1-Storm-8B to the FP8 data type, resulting in a model that is ready for vLLM inference. By reducing the number of bits per parameter from 16 to 8, this optimization saves approximately 50% in GPU memory and disk space requirements.

The weights and activations of the linear operators are the only quantized elements in the transformer blocks. The FP8 representations of these quantized weights and activations are mapped using a unique linear scaling technique known as tensor symmetric quantization. 512 UltraChat sequences are quantized using the LLM compressor.

- Llama 3.1 Storm 8B GGUF:This is the quantized version of GGUF from Llama-3.1-Storm-8B, for use with llama.cpp. GGUF is a file format for storing models for inference with GGML and GGML-based runners. GGUF is a binary format that is designed for fast loading and saving of models, and for ease of reading. Models are traditionally developed using PyTorch or another framework and then converted to GGUF for use in GGML. It is a successor file format to GGML, GGMF, and GGJT and is designed to be unambiguous in containing all of the information needed to load a model. It is also designed to be extensible so that new information can be added to models without breaking compatibility.

Read also: Meta Llama 3.1: The latest open-source ai model takes on mini GPT-4o

The approach followed

The performance comparison chart shows that Llama 3.1 Storm 8B significantly outperforms Meta ai. Call 3.1 8B Instruction and Hermes 3 Flame 3.1 8B models at various benchmarks.

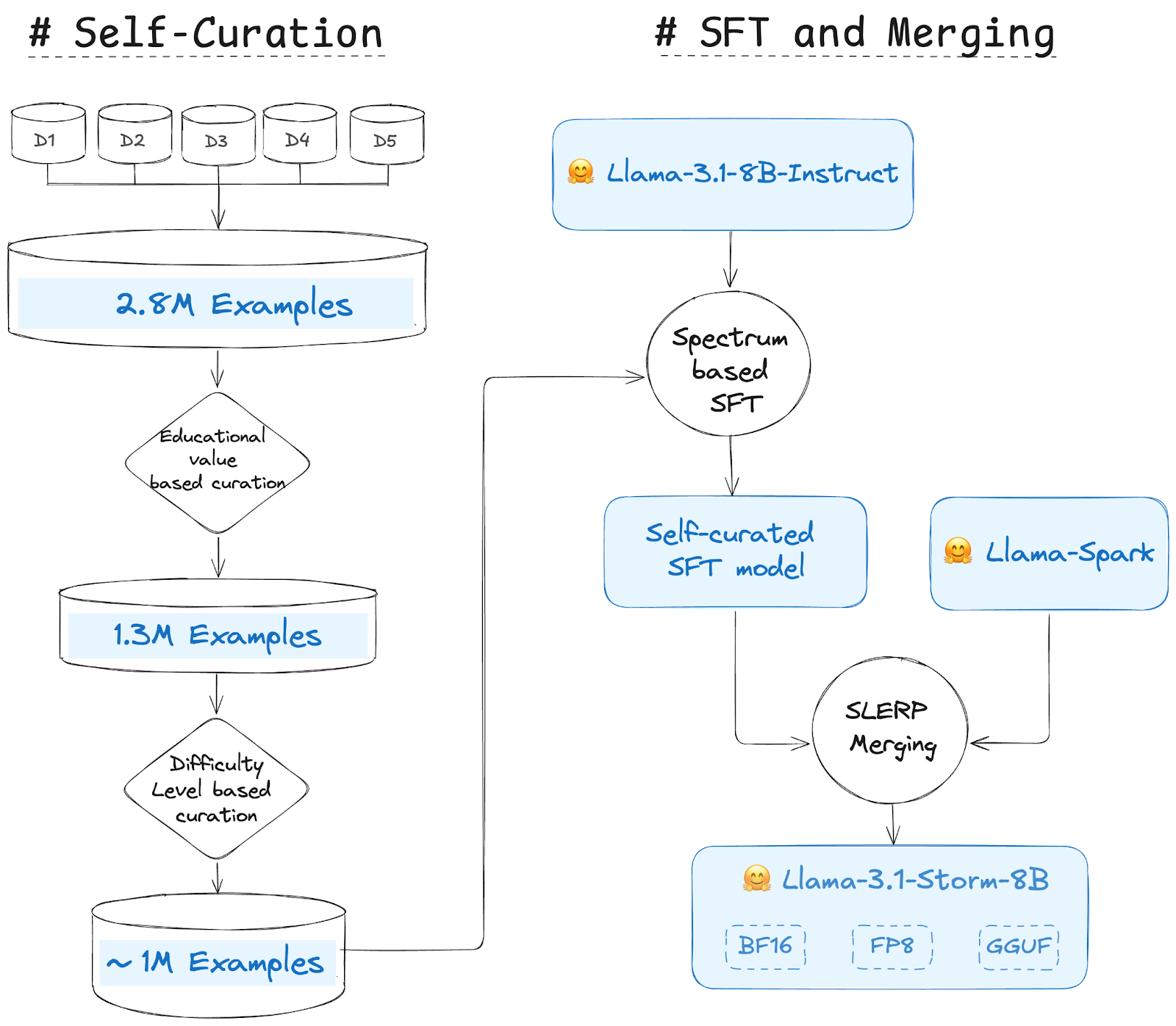

Their approach consists of three main steps:

Self-healing

The source datasets used for Llama 3.1 Storm 8B are these 5 open source datasets (ai/The-Tome” target=”_blank” rel=”noreferrer noopener nofollow”>The volume, ai/agent-data” target=”_blank” rel=”noreferrer noopener nofollow”>agent data, Magpie-Flame-3.1-Pro-300K-Filtered, openhermes_200k_unfilteredLlama-3-Magpie-PO-100K-SML). The combined datasets contain a total of ~2.8 million examples. Each example in the data curation is assigned a value(s), and then selection judgments are made based on the value(s) assigned to each sample. To assign such values, LLM or machine learning models are typically used. With LLM, there are numerous approaches to assign a value to an example. Educational value and difficulty level are two of the most commonly used metrics to evaluate examples.

The value or informativeness of the example (instruction + answer) is determined by its educational value and the degree of difficulty by its difficulty level. The educational value is between 1 and 5, where 1 is the least educational and 5 is the most instructive. There are 3 difficulty levels: Easy, Medium and Hard. The goal is to improve learning-based learning in the context of self-healing; therefore, we focus on applying the same model: using Llama-3.1-8B-Instruct instead of Llama-3.1-70B-Instruct, Llama-3.1-405B-Instruct and other larger LLMs.

Self-healing steps:

- Step 1: Education Value-based curation: They used Llama 3.1 Instruct 8B to assign an educational value (1-5) to all the examples (~2.8M). They then selected the samples with a score greater than 3. They followed the approach of the FineWeb-Edu datasetThis step reduced the total number of examples from 2.8 million to 1.3 million..

- Step 2: Difficulty Level-Based Curation: We followed a similar approach and used Llama 3.1 Instruct 8B to assign a difficulty level (Easy, Medium, and Hard) to 1.3 million examples from the previous step. After some experiments, they selected Medium and Hard level examples. This strategy is similar to the data pruning described in the Llama-3.1 White PaperThere were ~650K and ~325K examples of medium and high difficulty levels respectively.

The final selected dataset contained approximately 975,000 examples. Then, 960,000 and 15,000 were split for training and validation, respectively.

Fine-tuning supervised and directed instruction

The Self Curation model, fine-tuned on the Llama-3.1-8B-Instruct model with ~960K examples over 4 epochs, employs Spectrum, a method that accelerates LLM training by selectively targeting layer modules based on their signal-to-noise ratio (SNR) while freezing the rest. Spectrum effectively combines full fine-tuning performance with reduced GPU memory usage by prioritizing high-SNR layers and freezing 50% of low-SNR layers. Comparisons with methods such as QLoRA demonstrate Spectrum’s superior model quality and VRAM efficiency in distributed environments.

Merger of models

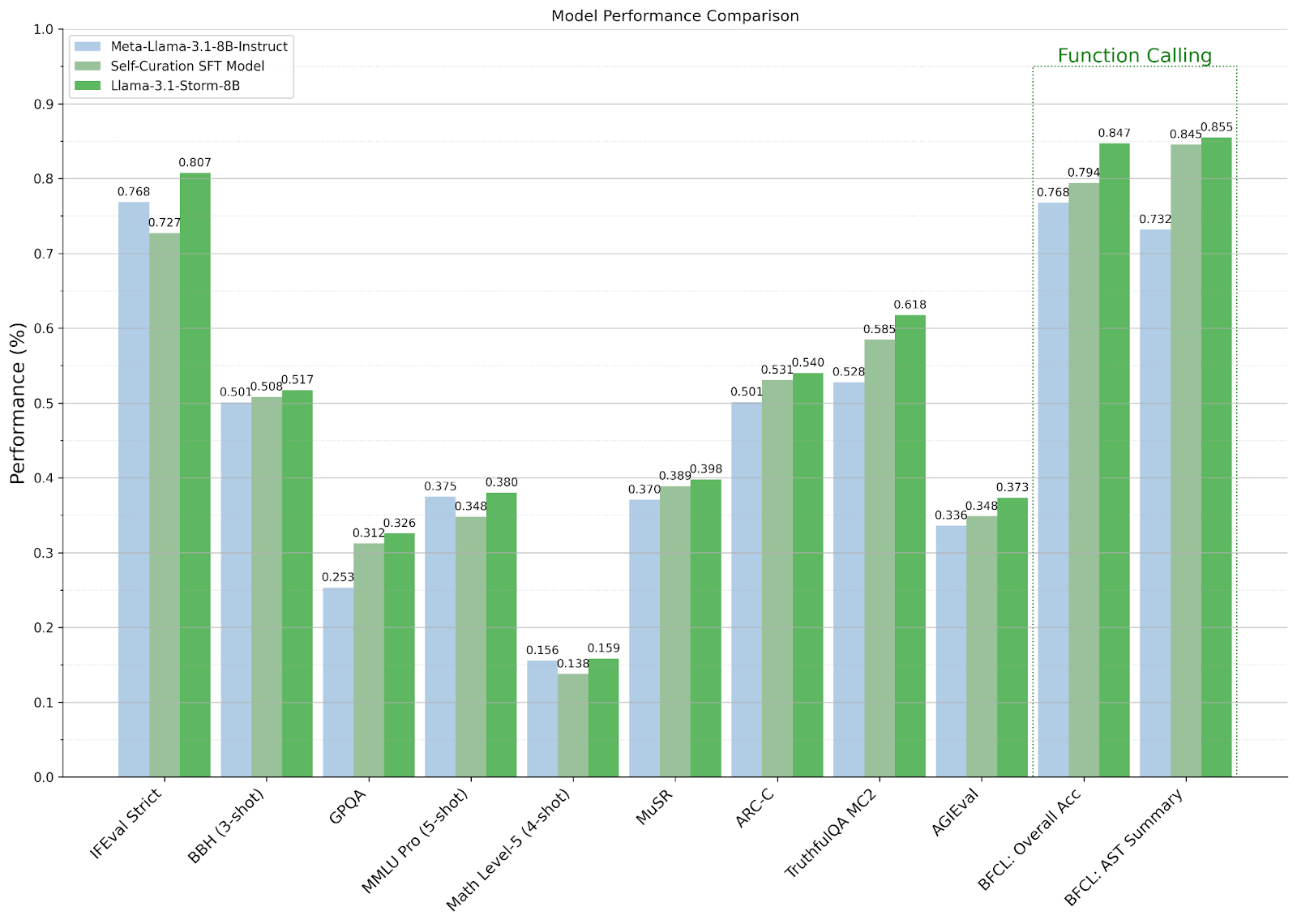

Since model fusion has resulted in some state-of-the-art models, they decided to merge the refined and fine-tuned self-healed model with the Llama Spark model, which is a derivative of Llama 3.1 8B Instruct. They used the SLERP method to merge the two models, creating a combined model that captures the essence of both parents through smooth interpolation. Spherical Linear Interpolation (SLERP) ensures a constant rate of change while preserving the geometric properties of spherical space, allowing the resulting model to maintain key features of both parent models. We can see from the benchmarks that the self-healing SFT model performs better than the Llama-Spark model on average. However, the merged model performs even better than either model.

The impact of self-healing and model fusion

As the figure above shows, the self-healing-based SFT strategy outperforms Llama-3.1-8B-Instruct on 7 out of 10 benchmarks, highlighting the importance of selecting high-quality examples. These results also suggest that choosing the right combined model can further improve performance across the benchmarks evaluated.

How to use the Llama 3.1 Storm 8B model

We will be using the Hugging Face Transformers library to use the Llama 3.1 Storm 8B model. By default, Transformers loads the model in bfloat16, which is the type used for fine tuning. It is recommended that you use it.

Method 1: Using transformer tubing

1st Step: Installing the necessary libraries

!pip install --upgrade "transformers>=4.43.2" torch==2.3.1 accelerate flash-attn==2.6.3Step 2: Load the Llama 3.1 Storm 8B model

import transformers

import torch

model_id = "akjindal53244/Llama-3.1-Storm-8B"

pipeline = transformers.pipeline(

"text-generation",

model=model_id,

model_kwargs={"torch_dtype": torch.bfloat16},

device_map="auto",

)Step 3: Create a utility method to create the model input

def prepare_conversation(user_prompt):

# Llama-3.1-Storm-8B chat template

conversation = (

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": user_prompt}

)

return conversationStep 4: Get the result

# User query

user_prompt = "What is the capital of Spain?"

conversation = prepare_conversation(user_prompt)

outputs = pipeline(conversation, max_new_tokens=128, do_sample=True, temperature=0.01, top_k=100, top_p=0.95)

response = outputs(0)('generated_text')(-1)('content')

print(f"Llama-3.1-Storm-8B Output: {response}")

Method 2: Using the Model API, tokenizer and model.generate

1st Step: Load the Llama 3.1 Storm 8B model and tokenizer

import torch

from transformers import AutoTokenizer, LlamaForCausalLM

model_id = 'akjindal53244/Llama-3.1-Storm-8B'

tokenizer = AutoTokenizer.from_pretrained(model_id, trust_remote_code=True)

model = LlamaForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.bfloat16,

device_map="auto",

load_in_8bit=False,

load_in_4bit=False,

use_flash_attention_2=False # Colab Free T4 GPU is an old generation GPU and does not support FlashAttention. Enable if using Ampere GPUs or newer such as RTX3090, RTX4090, A100, etc.

)Step 2: Apply the Llama-3.1-Storm-8B chat template

def format_prompt(user_query):

template = """<|begin_of_text|><|start_header_id|>system<|end_header_id|>\n\nYou are a helpful assistant.<|eot_id|><|start_header_id|>user<|end_header_id|>\n\n{}<|eot_id|><|start_header_id|>assistant<|end_header_id|>\n\n"""

return template.format(user_query)3rd Step: Get the output of the model

# Build final input prompt after applying chat-template

prompt = format_prompt("What is the capital of France?")

input_ids = tokenizer(prompt, return_tensors="pt").input_ids.to("cuda")

generated_ids = model.generate(input_ids, max_new_tokens=128, temperature=0.01, do_sample=True, eos_token_id=tokenizer.eos_token_id)

response = tokenizer.decode(generated_ids(0)(input_ids.shape(-1):), skip_special_tokens=True)

print(f"Llama-3.1-Storm-8B Output: {response}")

Conclusion

Llama 3.1 Storm 8B represents a significant advancement in the development of efficient and powerful language models. It demonstrates that smaller models can achieve impressive performance through innovative training and fusion techniques, opening up new possibilities for ai research and application development. As the field continues to evolve, we expect to see further improvements and applications of these techniques, potentially democratising access to advanced ai capabilities.

Dive into the future of ai with GenAI Pinnacle. Empower your projects with cutting-edge capabilities, from training custom models to solving real-world challenges like masking personally identifiable information. Start exploring.

Frequently Asked Questions

Answer: Llama 3.1 Storm 8B is an improved small language model (SLM) with 8 billion parameters, built on top of Meta ai's Instruct Llama 3.1 8B model using self-healing, targeted fine-tuning, and model fusion techniques.

Answer: It outperforms Meta's Llama 3.1 8B Instruct and Hermes-3-Llama-3.1-8B on several benchmarks, showing significant improvements in areas such as instruction following, knowledge-based quality control, reasoning, and function calling.

Answer: The model was built using a three-step process: self-healing of the training data, fine-tuning using the Spectrum method, and fusing the model with Llama-Spark using the SLERP technique.

Answer: Developers can easily integrate the model into their projects using popular libraries such as Transformers and vLLM. It is available in several formats (BF16, FP8, GGUF) and can be used for a variety of tasks including conversational ai and function calling.

NEWSLETTER

NEWSLETTER