Automated software engineering (ASE) has emerged as a transformative field, integrating artificial intelligence with software development processes to address debugging, feature improvement, and maintenance challenges. ASE tools increasingly employ large language models (LLMs) to assist developers, improve efficiency, and address the increasing complexity of software systems. However, most next-generation tools rely on proprietary, closed-source models, which limits their accessibility and flexibility, particularly for organizations with strict privacy requirements or resource constraints. Despite recent advances in the field, ASE continues to grapple with the challenges of implementing scalable, real-world solutions that can dynamically address the nuanced needs of software engineering.

A major limitation of existing approaches arises from their over-reliance on static data for training. While effective at generating feature-level solutions, models like GPT-4 and Claude 3.5 struggle with tasks that require a deep contextual understanding of project-wide dependencies or the iterative nature of real-world software development. These models are primarily trained on static codebases and fail to capture the dynamic problem-solving workflows of developers when interacting with complex software systems. The absence of process-level knowledge hinders your ability to effectively locate faults and propose meaningful solutions. Additionally, closed source models introduce data privacy concerns, especially for organizations working with confidential or proprietary code bases.

Researchers at Alibaba Group's Tongyi Lab developed the Lingma SWE-GPT Seriesa set of open source LLMs optimized for software improvement. The series includes two models, Lingma SWE-GPT 7B and 72B, designed to simulate real-world software development processes. Unlike their closed source counterparts, these models are accessible, customizable, and designed to capture the dynamic aspects of software engineering. By integrating insights from real-world code submission activities and iterative problem-solving workflows, Lingma SWE-GPT aims to close the performance gap between open and closed source models while maintaining accessibility.

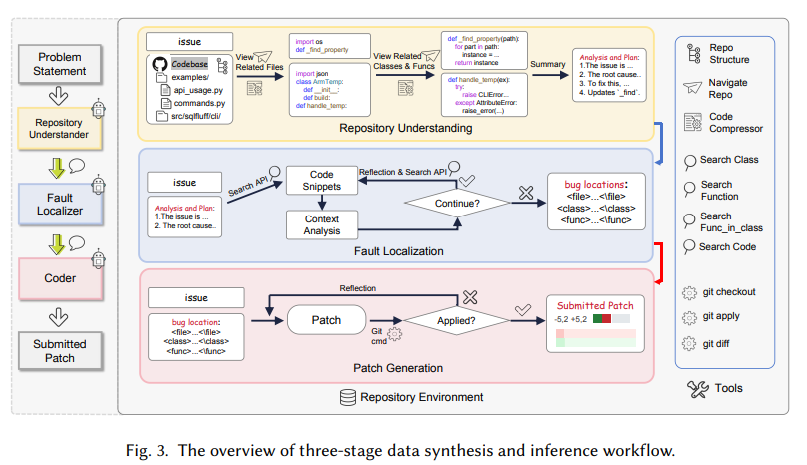

The development of Lingma SWE-GPT follows a structured three-stage methodology: understanding the repository, fault localization, and patch generation. In the first stage, the model analyzes a project's repository hierarchy, extracting key structural information from directories, classes, and functions to identify relevant files. During the fault localization phase, the model uses iterative reasoning and specialized APIs to accurately identify problematic code fragments. Finally, the patch generation stage focuses on creating and validating fixes, using git operations to ensure code integrity. The training process emphasizes process-oriented data synthesis, employing rejection sampling and curricular learning to iteratively refine the model and handle progressively more complex tasks.

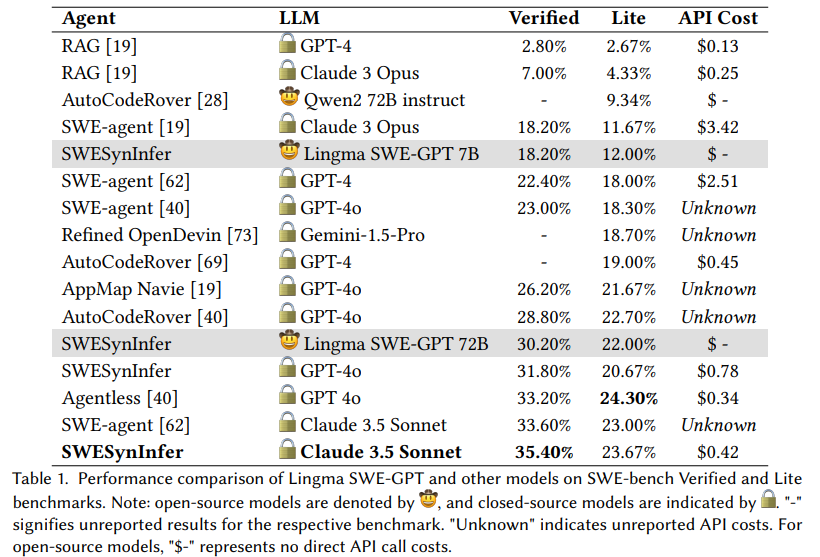

Performance evaluations demonstrate the effectiveness of Lingma SWE-GPT on benchmarks such as SWE-bench Verified and SWE-bench Lite, which simulate real-world GitHub issues. The Lingma SWE-GPT 72B model solved 30.20% of the problems in the SWE-bench Verified dataset, a significant achievement for an open source model. This performance is close to that of GPT-4o, which solved 31.80% of the problems and represented a 22.76% improvement over the open source Llama 3.1 405B model. Meanwhile, the smaller model Lingma SWE-GPT 7B achieved a success rate of 18.20% in SWE-bench Verified, surpassing the 17.20% of the Llama 3.1 70B. These results highlight the potential of open source models to close performance gaps while remaining cost-effective.

SWE-bench evaluations also revealed the robustness of Lingma SWE-GPT across multiple repositories. For example, in repositories such as Django and Matplotlib, the 72B model consistently outperformed its competitors, including major open and closed source models. Furthermore, the smaller 7B variant proved to be very efficient for resource-constrained scenarios, demonstrating the scalability of the Lingma SWE-GPT architecture. The cost advantage of open source models further strengthens their appeal as they eliminate the high API costs associated with closed source alternatives. For example, solving the 500 tasks in the SWE-bench Verified dataset using GPT-4o would cost approximately $390, while Lingma SWE-GPT incurs no direct API costs.

The research also highlights several key findings that illustrate the broader implications of the development of Lingma SWE-GPT:

- Open Source Accessibility: Lingma SWE-GPT models democratize the advanced capabilities of ASE, making them accessible to various developers and organizations.

- Performance Parity: The 72B achieves performance comparable to state-of-the-art closed source models and solves 30.20% of the issues in SWE-bench Verified.

- Scalability: The 7B model demonstrates strong performance in constrained environments and offers a cost-effective solution for organizations with limited resources.

- Dynamic understanding: By incorporating process-oriented training, Lingma SWE-GPT captures the iterative and interactive nature of software development, closing the gaps left by static data training.

- Improved fault localization: The model's ability to identify specific fault locations using iterative reasoning and specialized APIs ensures high accuracy and efficiency.

In conclusion, Lingma SWE-GPT represents an important step forward in ASE, addressing the critical limitations of static data training and closed-source dependency. Its innovative methodology and competitive performance make it a compelling alternative for organizations seeking scalable, open source solutions. By combining process-oriented insights with high accessibility, Lingma SWE-GPT paves the way for broader adoption of ai-assisted tools in software development, making advanced capabilities more inclusive and cost-effective.

look at the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 55,000ml.

(FREE VIRTUAL CONFERENCE ON ai) SmallCon: Free Virtual GenAI Conference with Meta, Mistral, Salesforce, Harvey ai and More. Join us on December 11 for this free virtual event to learn what it takes to build big with small models from ai pioneers like Meta, Mistral ai, Salesforce, Harvey ai, Upstage, Nubank, Nvidia, Hugging Face and more.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for social good. Their most recent endeavor is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has more than 2 million monthly visits, which illustrates its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>