Today, building a large-scale data set is the prerequisite for accomplishing the task at hand. Sometimes the task is niche and it would be too expensive or even impossible to build a large scale dataset to train a complete model from scratch. Do we need to train a model from scratch in all cases?

Let’s imagine that we would like to detect a certain animal, say an otter, in images. First we need to collect many images of otters and build a training data set. Next, we need to train a model with those images. Now, imagine that we want our model to learn to detect koalas. What do we do now? Once again, we collected many images of koalas and built our data set with them. Do we need to retrain our model from scratch on the combined dataset of otters and koalas? We already had a model trained on otter images. Why do we waste it? You learned some features for detecting animals that might also be useful for detecting koalas. Can we use this pretrained model to make things faster and simpler?

Yes you can, and that’s called transfer learning. It is a machine learning technique that allows a model trained on one task to be used as a starting point for another related task. Rather than starting from scratch, this leads to faster, more efficient training and, in most cases, better performance on the new task.

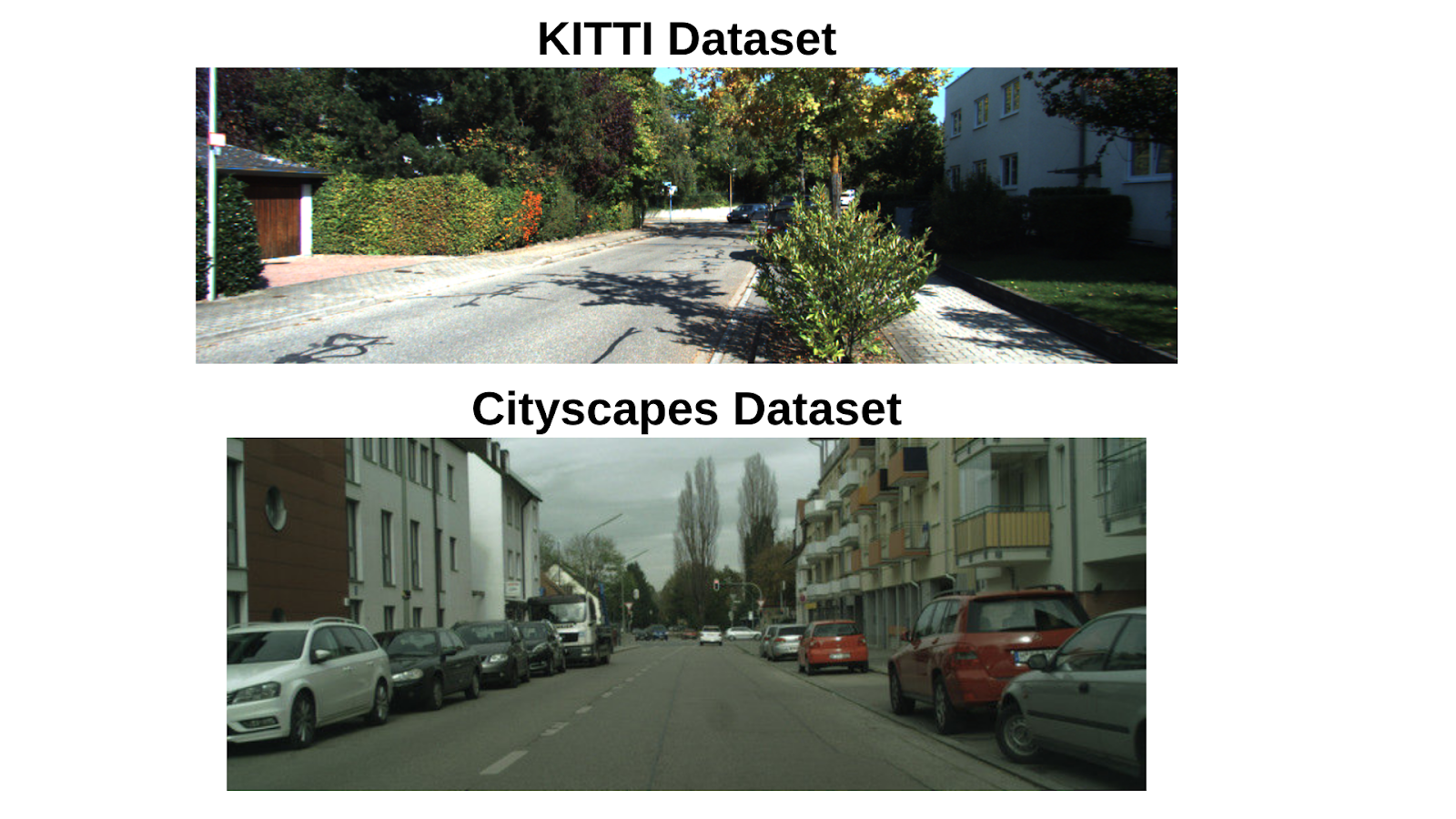

So all we have to do is find an existing model and use it as a starting point for our new training. Is it that simple though? What happens if we change the problem to a more complicated one? As an image that segments objects on the road for autonomous driving. We can’t just take a pre-trained model and use it as it is. If the model was previously trained on urban roads, it may not perform well when applied to rural roads. Just look at the difference!

One of the biggest, if not the biggest, challenges in transfer learning is adapting the model to the difference between the source and target dataset. We use the term domain gap to refer to the significant difference between the distribution of features in the source and target data sets. This difference can cause problems for the pretrained model, as it would be difficult for the model to transfer the knowledge from the source domain to the target domain. Therefore, identifying and reducing proficiency gaps is crucial when we plan to transfer learning. These breaches can occur in any field, but are particularly important for security-critical fields where the cost of failure is too high.

However, identifying domain gaps is not a simple task. We need to do certain assessments to identify the domain gap between the data sets:

- Analyze statistical properties, such as distributions of class features, to identify any significant differences.

- Visualize the data in low-dimensional space, preferably in latent space, to see if they form distinct groups, and compare their distribution.

- Evaluate the pretrained model on the target dataset to assess its initial performance. If the model performs poorly, it could indicate a domain breach.

- Perform some ablation studies by removing certain components from the pretrained model. In this way, we can know which components are transferable and which are not.

- Apply domain adaptation techniques, such as domain adversarial training or fine tuning.

They all sound nice and good, but all of these operations are manual labor intensive and time consuming. Let’s discuss this using a solid example that should clear things up.

Suppose we have an image segmentation model, DeepLab V3 Pluswho is trained in urban landscapes data set containing data for more than fifty European cities. For simplicity, let’s say we work with a subset of the urban landscapes data set using two cities, Aschen and Zurich. To train our model, we want to use the KITTEN data set that is constructed using data captured while driving in a medium-sized city, a rural area, and a highway. We must identify the domain gap between those data sets to fit our model correctly and eliminate potential errors. How can we do it?

First, we need to find out if we have a domain breach. To do that, we can take the pre-trained model and run it on both data sets. Of course, first, we need to prepare both data sets for evaluation, find their error, and then compare the results. If the average error between the source and target dataset is too high, that indicates we have a domain gap to fix.

Now that we know we have a domain breach, how can we identify the root cause? We can start by finding the samples with the highest loss and comparing them to find their common characteristics. It could be the color variation, the variation of roadside objects, the variation of cars, the area covered by the sky, etc. We must first try to correct for each of these differences, normalizing them appropriately to ensure they fit the characteristics of the source dataset, and re-evaluate our model to see if the “root” cause we found was actually the root cause of the gap. Of domain.

What if we had a tool that could do all of this for us automatically so we could focus on the real deal, solving the problem at hand? Fortunately, someone thought of it and came up with the TensorLeap.

TensorLeap is a platform to improve the development of solutions based on deep neural networks. TensorLeap offers an advanced set of tools to help data scientists refine and explain their models. It provides valuable information about the models and identifies their strengths and weaknesses. On top of that, the included tools for error analysis, unit testing, and dataset architecture are extremely helpful in finding the root cause of the problem and making the final model effective and reliable.

You can read this blog post to learn how it can be used to solve the domain gap problem in the Cityscapes and KITTI data sets. In this example, TensorLeap Automatic optimal latent space preparation and various analytical tools, dashboards, and insights helped quickly detect and reduce three domain gaps, significantly improving model performance. Identifying and fixing those domain breaches would have taken months of manual labor, but with TensorLeapit can be done in a matter of hours.

Note: Thanks to the Tensorleap team for the thought leadership/educational article above. Tensorleap has endorsed this Content.

Ekrem Çetinkaya received his B.Sc. in 2018 and M.Sc. in 2019 from Ozyegin University, Istanbul, Türkiye. She wrote her M.Sc. thesis on denoising images using deep convolutional networks. She is currently pursuing a PhD. She graduated from the University of Klagenfurt, Austria, and working as a researcher in the ATHENA project. Her research interests include deep learning, computer vision, and multimedia networks.

NEWSLETTER

NEWSLETTER

Read our latest AI newsletter

Read our latest AI newsletter