It’s no secret that OpenAI’s ChatGPT has some amazing capabilities; For example, the chatbot can write poetry that resembles Shakespeare’s sonnets or debug code for a computer program. These abilities are made possible by the massive machine learning model on which ChatGPT is based. Researchers have found that when these kinds of models get big enough, extraordinary capabilities emerge.

But larger models also require more time and money to train. The training process involves showing hundreds of billions of examples to a model. Collecting so much data is a complicated process in itself. Then come the monetary and environmental costs of running many powerful computers for days or weeks to train a model that can have billions of parameters.

“It has been estimated that training models at the scale that ChatGPT is supposed to run on could cost millions of dollars, just for a single training run. Can we improve the efficiency of these training methods, so that we can still get good models in less time and for less money? We propose to do this by taking advantage of smaller language models that have been previously trained,” says Yoon Kim, an assistant professor in MIT’s Department of Electrical Engineering and Computer Science and a member of the Computer Science and Artificial Intelligence Laboratory (CSAIL). .

Instead of discarding an older version of a model, Kim and his collaborators use it as the basis for building a new model. Using machine learning, the method of it learns to “grow” a larger model from a smaller model in a way that encodes the knowledge that the smaller model has already acquired. This allows for faster training of the larger model.

His technique saves about 50 percentage of the computational cost required to train a large model, compared to methods that train a new model from scratch. In addition, models trained with the MIT method performed as well or better than models trained with other techniques that also use smaller models to allow faster training of larger models.

Reducing the time it takes to train huge models could help researchers move faster with less expense, while reducing carbon emissions generated during the training process. It could also allow smaller research groups to work with these massive models, potentially opening the door to many new breakthroughs.

“As we look to democratize these types of technologies, it will become more important to make training faster and less expensive,” says Kim, lead author of a paper about this technique.

Kim and her graduate student Lucas Torroba Hennigen wrote the paper with lead author Peihao Wang, a graduate student at the University of Texas at Austin, as well as others at the MIT-IBM Watson AI Lab and Columbia University. The research will be presented at the International Conference on Representations of Learning.

The bigger the better

Large language models like GPT-3, which is the core of ChatGPT, are built using a neural network architecture called a transformer. A neural network, loosely based on the human brain, is made up of layers of interconnected nodes, or “neurons.” Each neuron contains parameters, which are variables learned during the training process that the neuron uses to process data.

Transformer architectures are unique in that as these types of neural network models grow larger, they achieve much better results.

“This has led to an arms race of companies trying to train bigger and bigger transformers on bigger and bigger data sets. More than other architectures, it seems that transformer networks get much better with scaling. We’re just not exactly sure why that is,” says Kim.

These models typically have hundreds of millions or billions of parameters that can be learned. Training all of these parameters from scratch is expensive, so the researchers are looking to speed up the process.

One effective technique is known as a growth model. Using the model growth method, researchers can increase the size of a transformer by copying neurons, or even entire layers from an earlier version of the network, and then stacking them on top. They can extend a network by adding new neurons to a layer or deepen it by adding additional layers of neurons.

Unlike previous approaches to model growth, the parameters associated with the new neurons in the expanded transformer are not just copies of the parameters in the smaller network, Kim explains. Rather, they are learned combinations of the parameters of the smaller model.

learning to grow

Kim and her collaborators use machine learning to learn a linear mapping of the parameters of the smaller model. This linear map is a mathematical operation that transforms a set of input values, in this case the parameters of the smaller model, into a set of output values, in this case the parameters of the larger model.

Their method, which they call the learned linear growth operator (LiGO), learns to expand the width and depth of a larger network from the parameters of a smaller network in a data-driven way.

But the smallest model can actually be quite large, maybe it has a hundred million parameters, and researchers might want to make a model with a billion parameters. So the LiGO technique splits the linear map into smaller parts that a machine learning algorithm can handle.

LiGO also expands width and depth simultaneously, making it more efficient than other methods. A user can adjust how wide and deep they want the larger model to be by entering the smaller model and its parameters, Kim explains.

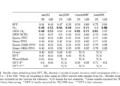

When they compared their technique to the process of training a new model from scratch, as well as methods of growing the model, it was faster than all baselines. His method saves about 50 percent of the computational costs needed to train the vision and language models, while often improving performance.

The researchers also found that they could use LiGO to speed up transformer training even when they didn’t have access to a smaller pretrained model.

“I was surprised at how well all the methods, including ours, performed compared to random initialization, training baselines from scratch.” Kim says.

In the future, Kim and his collaborators hope to apply LiGO to even larger models.

The work was funded, in part, by the MIT-IBM Watson AI Lab, Amazon, IBM Research AI Hardware Center, Center for Computational Innovation at Rensselaer Polytechnic Institute, and the US Army Office of Research.