Theorem proving in mathematics faces increasing challenges due to the increasing complexity of proofs. Formalized systems such as Lean, Isabelle, and Coq offer computer-verifiable proofs, but their creation demands substantial human effort. Large language models (LLMs) show promise for solving high school-level mathematical problems using proof assistants, but their performance still needs to improve due to data sparsity. Formal languages require a great deal of expertise, resulting in limited corpora. Unlike conventional programming languages, formal proof languages contain hidden intermediate information, making raw language corpora unsuitable for training. This scarcity persists despite the existence of valuable human-written corpora. Self-formalization efforts, while useful, cannot fully substitute human-written data in quality and diversity.

Existing attempts to address theorem proving challenges have evolved significantly with modern proof assistants such as Coq, Isabelle, and Lean expanding formal systems beyond first-order logic, increasing interest in automated theorem proving (ATP). The recent integration of large language models has further advanced this field. Early ATP approaches used traditional methods such as KNN or GNN, and some employed reinforcement learning. Recent efforts use deep transformer-based methods, treating theorems as plain text. Many learning-based systems (e.g., GPT-f, PACT, Llemma) train language models in pairs (proof state, next tactic) and use tree search for theorem proving. Alternative approaches involve LLMs generating full proofs independently or based on human-provided proofs. Data extraction tools are crucial for ATP, as they capture intermediate states invisible in the code but visible during runtime. Tools exist for various test assistants, but Lean 4 tools face challenges in bulk extraction across multiple projects due to the design limitations of a single project. Some methods also explore incorporating informal testing into formal testing, expanding the scope of ATP research.

Researchers at the Chinese University of Hong Kong propose LEAN-GitHuba large-scale Lean dataset that complements the successfully used Mathlib dataset. This innovative approach provides open-source Lean repositories on GitHub, significantly expanding the data available for training theorem proving models. The researchers developed a scalable pipeline to improve mining efficiency and parallelism, enabling the exploitation of valuable data from a previously uncompiled and unmined Lean corpus. Furthermore, they provide a solution to the state duplication problem common in tree-proof search methods.

The process of building the LEAN-GitHub dataset involved several key steps and innovations:

- Repository selection: The researchers identified 237 Lean 4 repositories (GitHub does not differentiate between Lean 3 and Lean 4) on GitHub, and computed approximately 48,091 theorems. After discarding 90 repositories with outdated Lean 4 versions, 147 remained. Only 61 of these could be compiled without modifications.

- Build Challenges: The team developed automated scripts to find the closest official releases for projects using unofficial Lean 4 releases. They also addressed the issue of isolated files within empty Lean projects.

- Source code compilation: Instead of using the Lake tool, they called the Leanc compiler directly. This approach allowed them to compile unsupported Lean projects and isolated files, which Lake could not handle. They extended Lake’s import graph and created a custom build script with higher parallelism.

- Extraction Process: Building on LeanDojo, the team implemented data extraction for isolated files and restructured the implementation to increase parallelism. This approach overcame network connection bottlenecks and computational redundancies.

- Results: From 8639 Lean source files, 6352 and 42,000 theorems were successfully extracted. The final dataset includes 2133 files and 28,000 theorems with valid tactical information.

The resulting LEAN-GitHub dataset is diverse and spans several mathematical fields, including logic, first-order logic, matroid theory, and arithmetic. It contains cutting-edge mathematical topics, data structures, and Olympic-level problems. Compared to existing datasets, LEAN-GitHub offers a unique combination of human-written content, intermediate states, and various levels of complexity, making it a valuable resource for advancing automated theorem proving and formal mathematics.

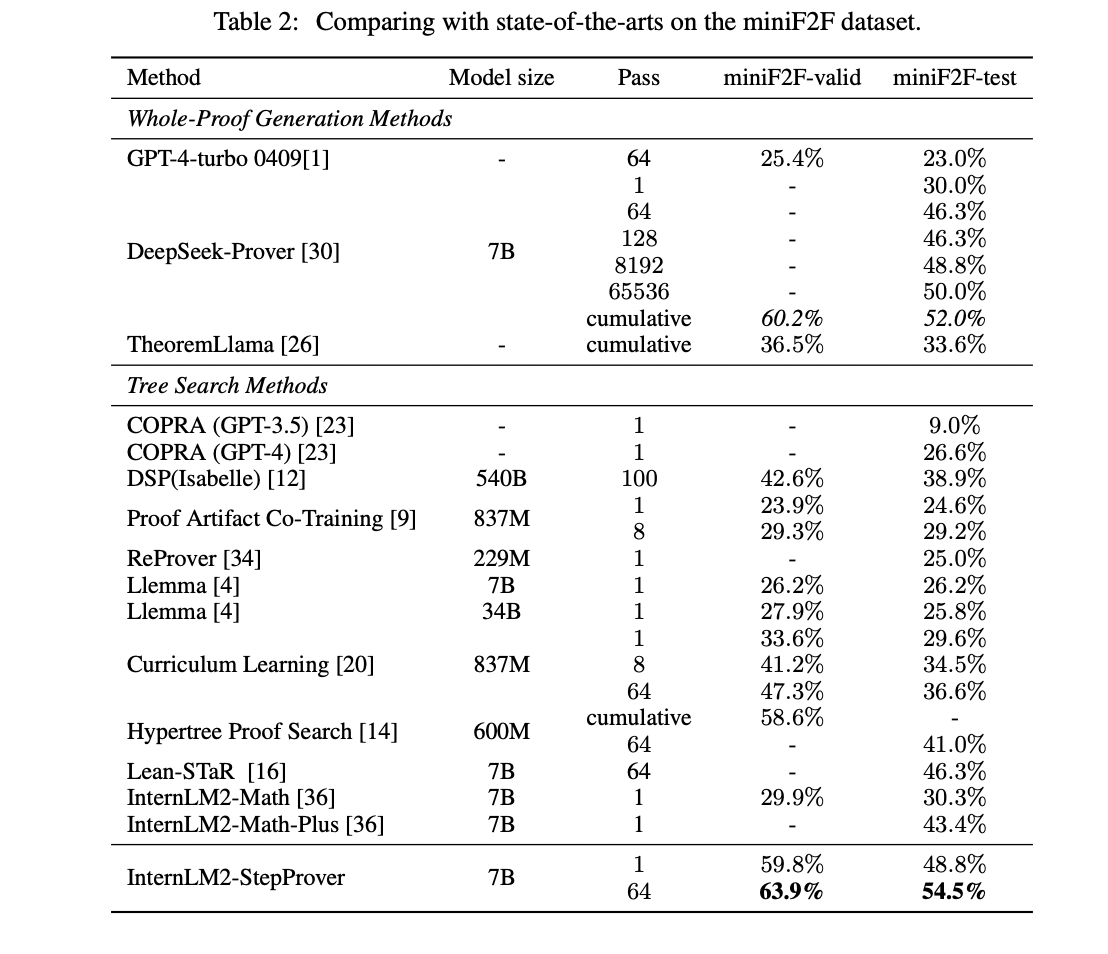

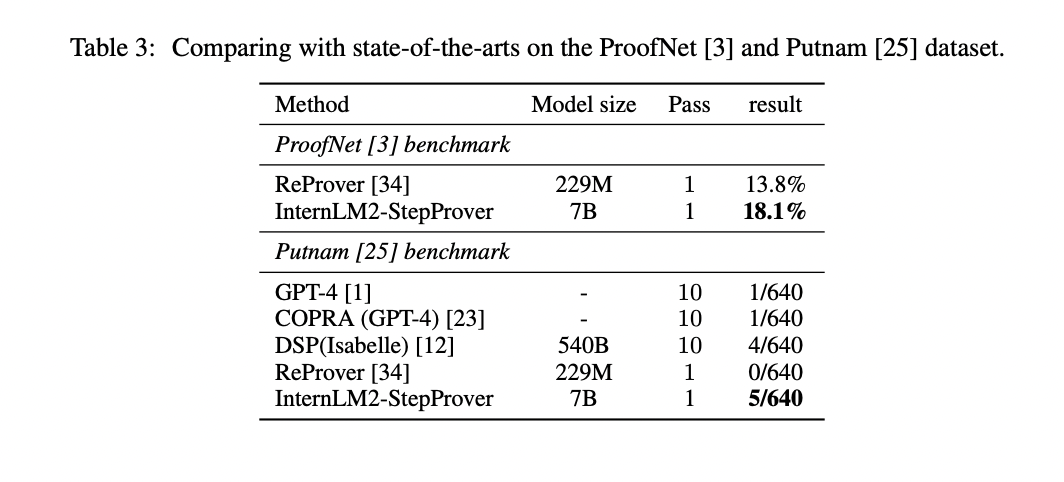

Trained on the diverse LEAN-GitHub dataset, InternLM2-StepProver demonstrates exceptional formal reasoning abilities on multiple benchmarks. It achieves state-of-the-art performance on miniF2F (63.9% on Valid, 54.5% on Test), outperforming previous models. On ProofNet, it achieves a Pass@1 rate of 18.1%, outperforming the previous leader. Putnam Banksolve 5 problems in one go, including the one that was previously unsolved. Putnam 1988 B2. These results span from high school to advanced undergraduate mathematics, and show the versatility of InternLM2-StepProver and the effectiveness of the LEAN-GitHub dataset in training advanced theorem proving models.

LEAN-GitHub, a large-scale dataset mined from open Lean 4 repositories, contains 28,597 theorems and 218,866 tactics. This diverse dataset was used to train InternLM2-StepProver, achieving state-of-the-art performance in Lean 4 formal reasoning. Models trained on LEAN-GitHub demonstrate improved performance across multiple mathematical domains and difficulty levels, highlighting the dataset’s effectiveness in improving reasoning capabilities. By releasing LEAN-GitHub as open source, the researchers aim to help the community better utilize underexploited information in raw corpora and advance mathematical reasoning. This contribution could significantly accelerate progress in automated theorem proving and formal mathematics.

Review the Paper and Data set. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram Channel and LinkedIn GrAbove!. If you like our work, you will love our Newsletter..

Don't forget to join our Over 47,000 ML subscribers on Reddit

Find upcoming ai webinars here

Asjad is a consultant intern at Marktechpost. He is pursuing Bachelors in Mechanical Engineering from Indian Institute of technology, Kharagpur. Asjad is a Machine Learning and Deep Learning enthusiast who is always researching the applications of Machine Learning in the healthcare domain.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER