In today's data-driven world, organizations depend on data analysts to interpret complex data sets, uncover actionable insights, and drive decision making. But what if we could improve the efficiency and scalability of this process using ai? Enter the Data Analytics Agent to automate analytical tasks, execute code, and adaptively respond to data queries. LangGraph, CrewAI, and AutoGen are three popular frameworks used to create ai agents. We will use and compare all three in this article to create a simple data analysis agent.

Data Analysis Agent Job

The data analysis agent will first take the user's query and generate the code to read the file and analyze the data in the file. The generated code will then be executed using the Python response tool. The result of the code is sent back to the agent. The agent then analyzes the output received from the code execution tool and responds to the user's query. LLMs can generate arbitrary code, so we must carefully run the code generated by LLM in a local environment.

Creating a data analysis agent with LangGraph

If you are new to this topic or want to brush up on your LangGraph knowledge, here is an article I would recommend: What is LangGraph?

Prerequisites

Before creating agents, make sure you have the necessary API keys for the required LLMs.

Upload the .env file with the necessary API keys.

from dotenv import load_dotenv

load_dotenv(./env)Required key libraries

long string – 0.3.7

langchain-experimental – 0.3.3

langgraph – 0.2.52

crew – 0.80.0

Crewai Tools – 0.14.0

autogen-agentchat – 0.2.38

Now that we are all ready, let's start building our agent.

Steps to create a data analysis agent with LangGraph

1. Import the necessary libraries.

import pandas as pd

from IPython.display import Image, display

from typing import List, Literal, Optional, TypedDict, Annotated

from langchain_core.tools import tool

from langchain_core.messages import ToolMessage

from langchain_experimental.utilities import PythonREPL

from langchain_openai import ChatOpenAI

from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import add_messages

from langgraph.prebuilt import ToolNode, tools_condition

from langgraph.checkpoint.memory import MemorySaver

2. Let's define the state.

class State(TypedDict):

messages: Annotated(list, add_messages)

graph_builder = StateGraph(State)

3. Define the LLM and the code execution function and bind the function to the LLM.

llm = ChatOpenAI(model="gpt-4o-mini", temperature=0.1)

@tool

def python_repl(code: Annotated(str, "filename to read the code from")):

"""Use this to execute python code read from a file. If you want to see the output of a value,

Make sure that you read the code from correctly

you should print it out with `print(...)`. This is visible to the user."""

try:

result = PythonREPL().run(code)

print("RESULT CODE EXECUTION:", result)

except BaseException as e:

return f"Failed to execute. Error: {repr(e)}"

return f"Executed:\n```python\n{code}\n```\nStdout: {result}"

llm_with_tools = llm.bind_tools((python_repl))

4. Define the function for the agent to respond to and add it as a node to the graph.

def chatbot(state: State):

return {"messages": (llm_with_tools.invoke(state("messages")))}

graph_builder.add_node("agent", chatbot)5. Define the ToolNode and add it to the graph.

code_execution = ToolNode(tools=(python_repl))

graph_builder.add_node("tools", code_execution)

If the LLM returns a call to the tool, we must route it to the tool node; otherwise we can finish it. Let's define a function for routing. Then we can add other borders.

def route_tools(state: State,):

"""

Use in the conditional_edge to route to the ToolNode if the last message

has tool calls. Otherwise, route to the end.

"""

if isinstance(state, list):

ai_message = state(-1)

elif messages := state.get("messages", ()):

ai_message = messages(-1)

else:

raise ValueError(f"No messages found in input state to tool_edge: {state}")

if hasattr(ai_message, "tool_calls") and len(ai_message.tool_calls) > 0:

return "tools"

return END

graph_builder.add_conditional_edges(

"agent",

route_tools,

{"tools": "tools", END: END},

)

graph_builder.add_edge("tools", "agent")6. Let's also add the memory so that we can chat with the agent.

memory = MemorySaver()

graph = graph_builder.compile(checkpointer=memory)

7. Compile and display the graph.

graph = graph_builder.compile(checkpointer=memory)

display(Image(graph.get_graph().draw_mermaid_png()))

8. Now we can start the chat. Since we have added memory, we will give each conversation a unique thread_id and start the conversation on that thread.

config = {"configurable": {"thread_id": "1"}}

def stream_graph_updates(user_input: str):

events = graph.stream(

{"messages": (("user", user_input))}, config, stream_mode="values"

)

for event in events:

event("messages")(-1).pretty_print()

while True:

user_input = input("User: ")

if user_input.lower() in ("quit", "exit", "q"):

print("Goodbye!")

break

stream_graph_updates(user_input)While the loop is running, we start by giving the file path and then ask questions based on the data.

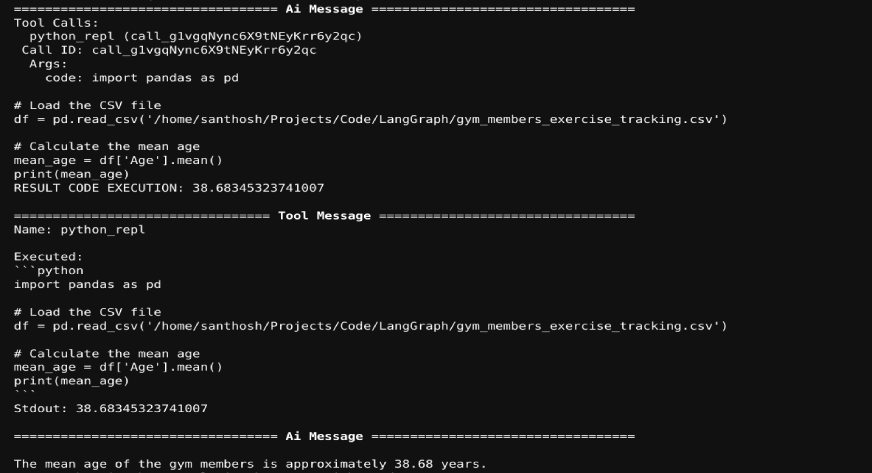

The output will be the following:

Since we have included memory, we can ask any questions about the data set in the chat. The agent will generate the required code and the code will be executed. The result of the code execution will be sent back to the LLM. Below is an example:

Also Read: How to Create Your Custom News Summary Agent with LangGraph

Building a data analytics agent with CrewAI

Now we will use CrewAI for the data analysis task.

1. Import the necessary libraries.

from crewai import Agent, Task, Crew

from crewai.tools import tool

from crewai_tools import DirectoryReadTool, FileReadTool

from langchain_experimental.utilities import PythonREPL

2. We will build an agent to generate the code and another to execute that code.

coding_agent = Agent(

role="Python Developer",

goal="Craft well-designed and thought-out code to answer the given problem",

backstory="""You are a senior Python developer with extensive experience in software and its best practices.

You have expertise in writing clean, efficient, and scalable code. """,

llm='gpt-4o',

human_input=True,

)

coding_task = Task(

description="""Write code to answer the given problem

assign the code output to the 'result' variable

Problem: {problem},

""",

expected_output="code to get the result for the problem. output of the code should be assigned to the 'result' variable",

agent=coding_agent

)

3. To run the code, we will use PythonREPL(). Define it as a crew tool.

@tool("repl")

def repl(code: str) -> str:

"""Useful for executing Python code"""

return PythonREPL().run(command=code)

4. Define the runner agent and tasks with access to repl and FileReadTool()

executing_agent = Agent(

role="Python Executor",

goal="Run the received code to answer the given problem",

backstory="""You are a Python developer with extensive experience in software and its best practices.

"You can execute code, debug, and optimize Python solutions effectively.""",

llm='gpt-4o-mini',

human_input=True,

tools=(repl, FileReadTool())

)

executing_task = Task(

description="""Execute the code to answer the given problem

assign the code output to the 'result' variable

Problem: {problem},

""",

expected_output="the result for the problem",

agent=executing_agent

)

5. Form the crew with agents and corresponding tasks.

analysis_crew = Crew(

agents=(coding_agent, executing_agent),

tasks=(coding_task, executing_task),

verbose=True

)

6. Lead the team with the following contributions.

inputs = {'problem': """read this file and return the column names and find mean age

"/home/santhosh/Projects/Code/LangGraph/gym_members_exercise_tracking.csv""",}

result = analysis_crew.kickoff(inputs=inputs)

print(result.raw)

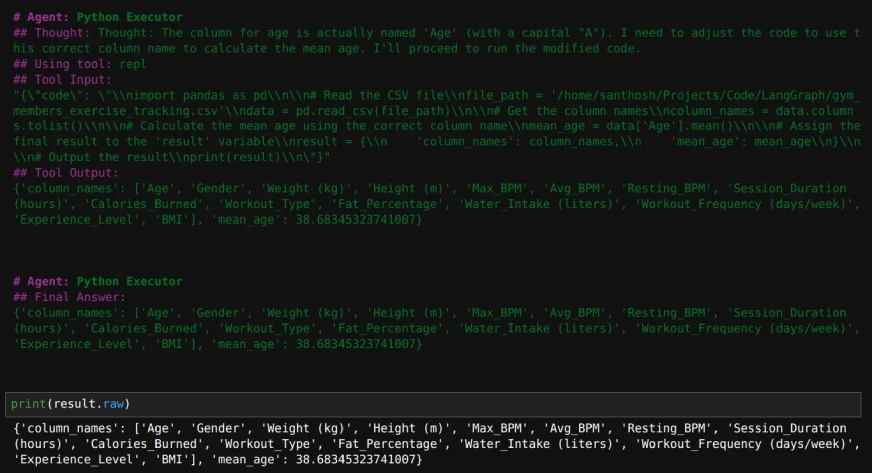

This is what the result will look like:

Also Read: Create LLM Agents on the Fly Without Code with CrewAI

Creating a data analysis agent with AutoGen

1. Import the necessary libraries.

from autogen import ConversableAgent

from autogen.coding import LocalCommandLineCodeExecutor, DockerCommandLineCodeExecutor

2. Define the code executor and an agent to use the code executor.

executor = LocalCommandLineCodeExecutor(

timeout=10, # Timeout for each code execution in seconds.

work_dir="./Data", # Use the directory to store the code files.

)

code_executor_agent = ConversableAgent(

"code_executor_agent",

llm_config=False,

code_execution_config={"executor": executor},

human_input_mode="ALWAYS",

)

3. Define an agent to write the code with a custom system message.

Take the code_writer system message from https://microsoft.github.io/autogen/0.2/docs/tutorial/code-executors/

code_writer_agent = ConversableAgent(

"code_writer_agent",

system_message=code_writer_system_message,

llm_config={"config_list": ({"model": "gpt-4o-mini"})},

code_execution_config=False,

)4. Define the problem to solve and start the chat.

problem = """Read the file at the path '/home/santhosh/Projects/Code/LangGraph/gym_members_exercise_tracking.csv'

and print mean age of the people."""

chat_result = code_executor_agent.initiate_chat(

code_writer_agent,

message=problem,

)

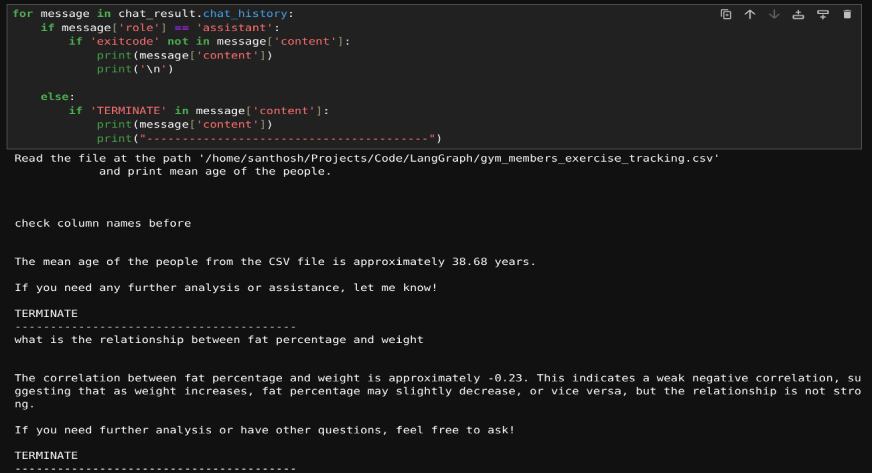

Once the chat starts, we can also ask subsequent questions about the data set mentioned above. If the code finds any errors, we may request to modify the code. If the code is fine, we can simply press 'enter' to continue executing the code.

5. We can also print the questions asked by us and their answers, if necessary, using this code.

for message in chat_result.chat_history:

if message('role') == 'assistant':

if 'exitcode' not in message('content'):

print(message('content'))

print('\n')

else:

if 'TERMINATE' in message('content'):

print(message('content'))

print("----------------------------------------")Here is the result:

Also read: Practical guide to creating multi-agent chatbots with AutoGen

LangGraph, CrewAI and AutoGen

Now that you've learned how to create a data analytics agent with all 3 frameworks, let's explore the differences between them when it comes to code execution:

| Structure | Key Features | Strengths | More suitable for |

|---|---|---|---|

| LangGraph | – Graph-based structure (nodes represent agents/tools, edges define interactions) – Seamless integration with PythonREPL |

– Highly flexible to create structured multi-step workflows – Safe and efficient code execution with memory preservation in all tasks. |

Complex, process-based analytical tasks that require clear, customizable workflows |

| ai Crew | – Focused on collaboration – Multiple agents working in parallel with predefined roles – Integrates with LangChain tools |

– Task-oriented design – Excellent for teamwork and role specialization. – Supports secure and reliable code execution with PythonREPL |

Collaborative data analysis, code review configurations, task decomposition, and role-based execution. |

| Self-generation | – Dynamic and iterative code execution. – Conversational agents for interactive execution and debugging. – Built-in chat function |

– Adaptive and conversational workflows – Focus on dynamic interaction and debugging. – Ideal for rapid prototyping and problem solving |

Rapid prototyping, problem solving, and environments where tasks and requirements evolve frequently. |

Conclusion

In this article, we demonstrate how to create data analytics agents using LangGraph, CrewAI, and AutoGen. These frameworks allow agents to generate, execute, and analyze code to efficiently address data queries. By automating repetitive tasks, these tools make data analysis faster and scalable. The modular design allows customization for specific needs, making them valuable to data professionals. These agents show the potential of ai to simplify workflows and extract insights from data with ease.

To learn more about ai agents, check out our exclusive Agentic ai Pioneer program.

Frequently asked questions

A. These frameworks automate code generation and execution, enabling faster data processing and insights. They streamline workflows, reduce manual effort, and improve the productivity of data-driven tasks.

A. Yes, agents can be customized to handle diverse data sets and complex analytical queries by integrating appropriate tools and adjusting their workflows.

A. Code generated by LLM may include errors or unsafe operations. Always validate code in a controlled environment to ensure accuracy and security before execution.

A. Memory integration allows agents to retain the context of past interactions, enabling adaptive responses and continuity in complex or multi-step queries.

A. These agents can automate tasks such as reading files, performing data cleansing, generating summaries, running statistical analysis, and answering user queries about the data.

NEWSLETTER

NEWSLETTER