artificial intelligence has made significant advances in recent years, but integrating the interaction of real -time speech with visual content remains a complex challenge. Traditional systems often depend on separate components for the detection of voice activities, voice recognition, textual dialogue and text synthesis to voice. This segmented approach can introduce delays and not capture the nuances of human conversation, such as emotions or voiceless sounds. These limitations are particularly evident in applications designed to help people with visual disabilities, where the appropriate and precise descriptions of visual scenes are essential.

Address these challenges, Kyutai has introduced Moshivis, a model of open source vision speech (VSM) that allows natural speech interactions in real time. On the basis of his previous work with Moshi, a voice text base model designed for real -time dialogue, Moshivis extends these capabilities to include visual entries. This improvement allows users to participate in fluid conversations about visual content, marking a notable advance in the development of ai.

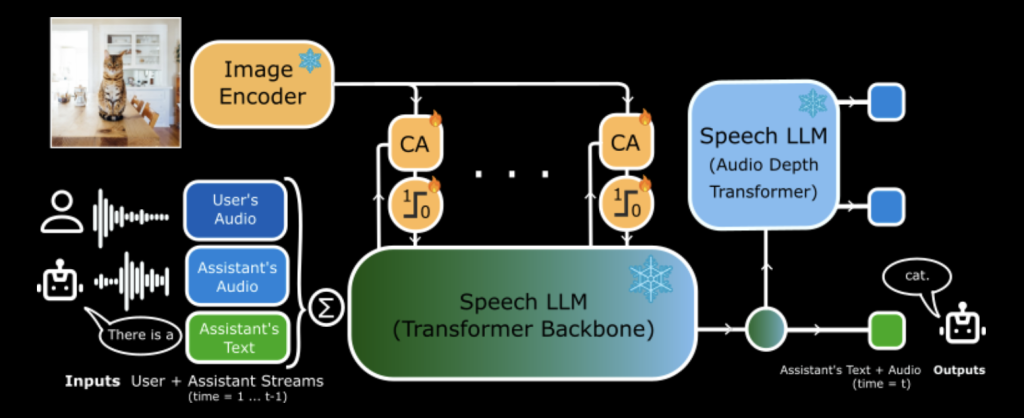

Technically, Moshivis increases MOSHI by integrating light crossing modules that infuse visual information of an existing visual encoder in Moshi's voice token current. This design ensures that Moshi's original conversation skills remain intact by introducing the ability to process and discuss visual entries. An activation mechanism within the cross -care modules allows the model to be selectively involved with visual data, maintaining efficiency and response capacity. In particular, Moshivis adds approximately 7 milliseconds of latency due to inference step in devices of degree of consumption, such as a Mac Mini with a M4 Pro chip, resulting in a total of 55 milliseconds per step of inference. This action is maintained well below the 80 millisecond threshold for latency in real time, ensuring soft and natural interactions.

In practical applications, Moshivis demonstrates its ability to provide detailed descriptions of visual scenes through natural speech. For example, when an image is presented that represents structures of green metals surrounded by trees and a building with a light brown exterior, moshivis articulates:

“I see two green metal structures with a mesh lid, and are surrounded by large trees. In the background, you can see a building with a light brown exterior and a black roof, which seems to be made of stone.”

This capacity opens new paths for applications, such as providing audio descriptions for visual disabilities accessibility, improving accessibility and allowing more natural interactions with visual information. By publishing Moshivis as an open source project, Kyutai invites the research community and developers to explore and expand this technology, promoting innovation in vision voice models. The availability of the pesos of the model, the inference code and the visual voice reference points further support the collaboration efforts to refine and diversify Moshivis applications.

In conclusion, Moshivis represents a significant advance in ai, merging visual understanding with the interaction of speech in real time. Its open source nature encourages generalized adoption and development, racing the way for more accessible and natural interactions with technology. As ai continues to evolve, innovations such as Moshivis bring us closer to the perfect integration of multimodal understanding, improving user experiences in several domains.

Verify he Technical detail and Try it here. All credit for this investigation goes to the researchers of this project. In addition, feel free to follow us <a target="_blank" href="https://x.com/intent/follow?screen_name=marktechpost” target=”_blank” rel=”noreferrer noopener”>twitter And don't forget to join our 80k+ ml subject.

NEWSLETTER

NEWSLETTER