Text embedding models have become fundamental in natural language processing (NLP). These models convert text into high-dimensional vectors that capture semantic relationships, enabling tasks such as document retrieval, classification, clustering, and more. Embeddings are especially critical in advanced systems such as augmented retrieval-generation (RAG) models, where embeddings support the retrieval of relevant documents. With the growing need for models that can handle multiple languages and long text sequences, transformer-based models have revolutionized the way embeddings are generated. However, while these models have advanced capabilities, they face limitations in real-world applications, particularly in handling extensive multilingual data and long context documents.

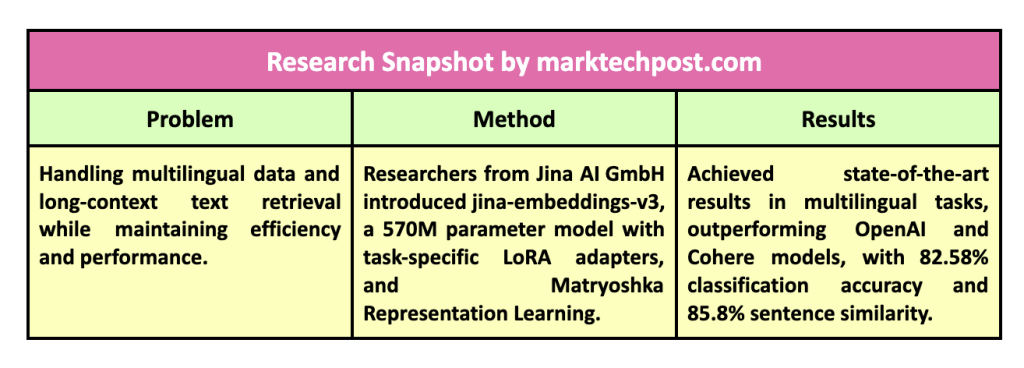

Text embedding models have faced several challenges in recent years. While advertised as general-purpose, a key problem is that many models often require specific tuning to perform well on various tasks. These models frequently struggle to balance performance across different languages and handle long text sequences. In multilingual applications, embedding models must deal with the complexity of coding relationships across different languages, each with unique linguistic structures. The difficulty increases with tasks that require processing extensive text sequences, which often exceed the capability of most current models. Furthermore, deploying these models on a large scale, often with billions of parameters, presents significant scalability and computational cost challenges, especially when marginal improvements do not justify the resource consumption.

Previous attempts to solve these challenges have relied heavily on large language models (LLMs), which can exceed 7 billion parameters. These models have demonstrated their ability to handle diverse tasks across different languages, from text classification to document retrieval. However, despite their large parameter size, performance improvements are minimal compared to models that only include encoders, such as XLM-RoBERTa and mBERT. The complexity of these models also makes them impractical for many real-world applications where resources are limited. Efforts to make embeddings more efficient have included innovations such as instruction wrapping and positional encoding methods, such as Rotational Position Embeddings (RoPE), which help models process longer text sequences. However, even with these advances, models often fail to meet the demands of real-world multilingual retrieval tasks with the desired efficiency.

Researchers at Jina ai GmbH have presented a new model, Jina-inlays-v3Specifically designed to address the inefficiencies of previous embedding models, this model, which includes 570 million parameters, delivers optimized performance across multiple tasks while supporting longer context documents of up to 8192 tokens. The model incorporates a key innovation: task-specific Low-Rank Adaptation (LoRA) adapters. These adapters enable the model to efficiently generate high-quality embeddings for various tasks, including query document retrieval, classification, clustering, and text matching. Jina-embeddings-v3’s ability to provide task-specific optimizations ensures more effective handling of multilingual data, large documents, and complex retrieval scenarios, balancing performance and scalability.

The Jina-embeddings-v3 model architecture is based on the widely recognized XLM-RoBERTa model, but with several critical improvements. It uses FlashAttention 2 to improve computational efficiency and integrates RoPE positional embeddings to handle long-context tasks of up to 8192 tokens. One of the most innovative features of the model is Matryoshka representation learning, which allows users to truncate embeddings without compromising performance. This method provides flexibility to choose different embedding sizes, such as reducing a 1024-dimensional embedding to just 16 or 32 dimensions, optimizing the trade-off between space efficiency and task performance. With the addition of task-specific LoRA adapters, which account for less than 3% of the total parameters, the model can dynamically adapt to different tasks, such as classification and retrieval. By freezing the weights of the original model, the researchers have ensured that training these adapters remains highly efficient, using only a fraction of the memory required by traditional models. This efficiency makes it practical for implementation in real-world environments.

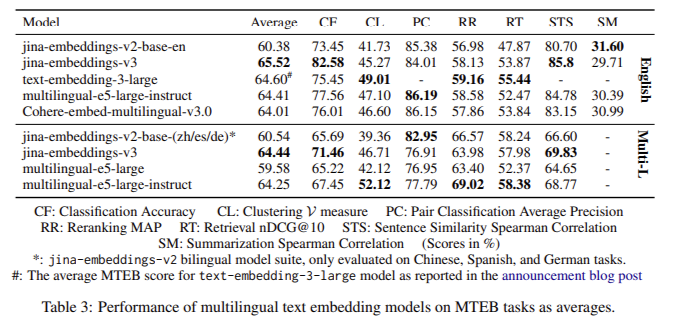

The Jina-embeddings-v3 model has shown notable performance improvements across multiple benchmark tests. The model outperformed competitors such as OpenAI’s proprietary models and Cohere’s multilingual embeddings in multilingual evaluations, particularly on English tasks. The jina-embeddings-v3 model demonstrated superior results in classification accuracy (82.58%) and sentence similarity (85.8%) on the MTEB benchmark, outperforming much larger models such as e5-mistral-7b-instruct, which has over 7 billion parameters but only shows a marginal 1% improvement on certain tasks. Jina-embeddings-v3 achieved excellent results on multilingual tasks, outperforming multilingual-e5-large-instruct on all tasks despite its significantly smaller size. Its ability to perform well on long, multilingual context retrieval tasks while requiring less computational resources makes it highly efficient and cost-effective, especially for fast, edge computing applications.

In conclusion, Jina-embeddings-v3 offers a scalable and efficient solution to the long-standing challenges faced by text embedding models in multilingual and long-context tasks. The integration of LoRA adapters, Matryoshka representation learning, and other advanced techniques ensures that the model can handle various features without the excessive computational burden seen in models with billions of parameters. The researchers have created a practical, high-performance model that outperforms many larger models and sets a new standard for embedding efficiency. The introduction of these innovations provides a clear path forward for future advancements in multilingual and long-context retrieval, making jina-embeddings-v3 a valuable tool in NLP.

Take a look at the Paper and Model card in HFAll credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram Channel and LinkedIn GrAbove!. If you like our work, you will love our fact sheet..

Don't forget to join our SubReddit of over 50,000 ml

FREE ai WEBINAR: 'SAM 2 for Video: How to Optimize Your Data' (Wednesday, September 25, 4:00 am – 4:45 am EST)

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary engineer and entrepreneur, Asif is committed to harnessing the potential of ai for social good. His most recent initiative is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has over 2 million monthly views, illustrating its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER