In recent years, LMMs have expanded rapidly, leveraging CLIP as a fundamental vision encoder for robust visual representations and LLMs as versatile tools for reasoning across modalities. However, while LLMs have grown to over 100 billion parameters, the vision models they are based on must be larger, hampering their potential. Expanding contrastive language-image pre-training (CLIP) is essential to improve both vision and multimodal models, closing the gap and enabling more effective handling of diverse types of data.

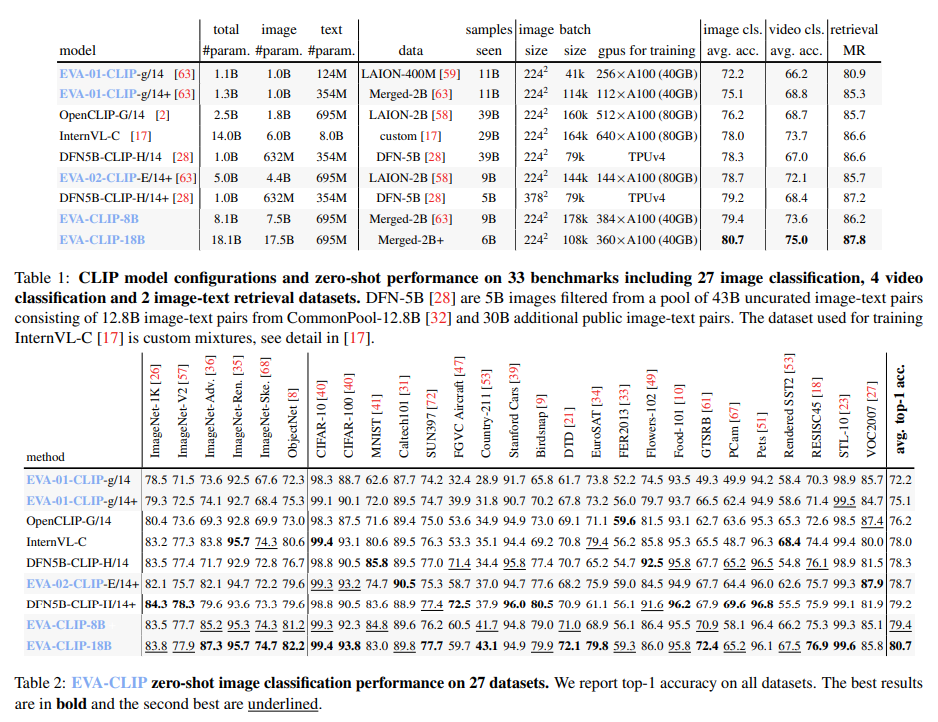

Researchers from the Beijing Academy of artificial intelligence and Tsinghua University have unveiled EVA-CLIP-18B, the largest open source CLIP model yet, with 18 billion parameters. Despite training with only 6 billion samples, it achieves an impressive zero-shot top-1 accuracy of 80.7% on 27 image classification benchmarks, outperforming previous models such as EVA-CLIP. Notably, this advance is achieved with a modest data set of 2 billion image-text pairs, openly available and smaller than those used in other models. EVA-CLIP-18B shows the potential of EVA-style weak-to-strong scaling of visual models, with the hope of encouraging further research in vision and multimodal basis models.

EVA-CLIP-18B is the largest and most powerful open source CLIP model, with 18 billion parameters. It outperforms its predecessor EVA-CLIP (5 billion parameters) and other open source CLIP models by a wide margin in terms of top-1 zero-shot accuracy across 27 image classification benchmarks. The principles of EVA and EVA-CLIP guide the expansion procedure of EVA-CLIP-18B. The EVA philosophy follows a weak-to-strong paradigm, where a small EVA-CLIP model serves as the vision encoder initialization for a larger EVA-CLIP model. This iterative scaling process stabilizes and accelerates the training of larger models.

EVA-CLIP-18B, an 18 billion parameter CLIP model, is trained on a dataset of 2 billion image-text pairs from LAION-2B and COYO-700M. Following the principles of EVA and EVA-CLIP, it employs a weak-to-strong paradigm, where a smaller EVA-CLIP model initializes a larger one, stabilizing and accelerating training. Evaluation on 33 datasets, including image and video classification and image text retrieval, demonstrates its effectiveness. The scaling process involves distilling knowledge from a small EVA-CLIP model to a larger EVA-CLIP, with the training data set largely arranged to show the effectiveness of the scaling philosophy. In particular, the approach produces sustained performance gains, exemplifying the effectiveness of weak-to-strong scaling.

EVA-CLIP-18B, which has 18 billion parameters, shows exceptional performance in various image-related tasks. It achieves an impressive top-1 zero-shot accuracy of 80.7% across 27 image classification benchmarks, outperforming its predecessor and other CLIP models by a significant margin. Furthermore, linear probing on ImageNet-1K outperforms competitors such as InternVL-C with an average accuracy of 88.9. Zero-shot text and image retrieval on the Flickr30K and COCO datasets achieves an average recall of 87.8, significantly outperforming the competition. EVA-CLIP-18B shows robustness across different variants of ImageNet, demonstrating its versatility and high performance on 33 widely used data sets.

In conclusion, EVA-CLIP-18B is the largest and highest performing open source CLIP model, with 18 billion parameters. Applying EVA's weak-to-strong vision scaling principle achieves exceptional zero-shot accuracy on 27 image classification benchmarks. This scaling approach constantly improves performance without reaching saturation, pushing the limits of the vision model's capabilities. In particular, EVA-CLIP-18B shows robustness in visual representations, maintaining performance across several ImageNet variants, including adversarial ones. Its versatility and effectiveness are demonstrated on multiple data sets, spanning image classification, image text retrieval, and video classification tasks, marking a significant advance in the capabilities of the CLIP model.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter and Google news. Join our 36k+ ML SubReddit, 41k+ Facebook community, Discord Channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

![]()

Sana Hassan, a consulting intern at Marktechpost and a dual degree student at IIT Madras, is passionate about applying technology and artificial intelligence to address real-world challenges. With a strong interest in solving practical problems, she brings a new perspective to the intersection of ai and real-life solutions.

<!– ai CONTENT END 2 –>