Multimodal large language models (MLLM) integrate visual and text data processing to improve the way artificial intelligence understands and interacts with the world. This area of research focuses on creating systems that can understand and respond to a combination of visual cues and linguistic information, more closely mimicking human-like interactions.

The challenge often lies in the limited capabilities of open source models compared to their commercial counterparts. Open source models often suffer from deficiencies in processing complex visual inputs and supporting multiple languages, which can restrict their practical applications and effectiveness in various scenarios.

Historically, most open source MLLMs have been trained at fixed resolutions, primarily using data sets limited to the English language. This approach significantly hinders their functionality when encountering high-resolution images or content in other languages, making it difficult for these models to perform well on tasks that require detailed visual understanding or multilingual capabilities.

Research from Shanghai ai Lab, SenseTime Research, Tsinghua University, Nanjing University, Fudan University and Chinese University of Hong Kong presents InternVL 1.5, an open source MLLM designed to significantly improve the capabilities of open source systems in multimodal understanding. This model incorporates three major improvements to close the performance gap between open source and proprietary commercial models. The three main components are:

- First, a powerful vision encoder, InternViT-6B, has been optimized through a continuous learning strategy, improving its visual understanding capabilities.

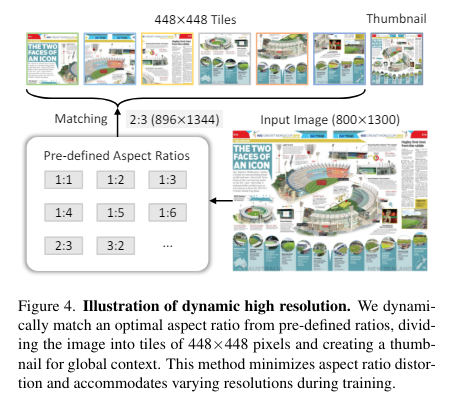

- Second, a high-resolution dynamic approach allows the model to handle images with up to 4K resolution by dynamically adjusting image tiles based on the aspect ratio and resolution of the input.

- Finally, a high-quality bilingual dataset has been meticulously assembled covering common scenes and document images annotated with question-answer pairs in English and Chinese.

The three steps significantly increase the model's performance in OCR and Chinese language-related tasks. These improvements allow InternVL 1.5 to compete strongly in various benchmarks and benchmark studies, demonstrating its improved effectiveness in multimodal tasks. InternVL 1.5 employs a segmented approach to image handling, allowing it to process images in resolutions up to 4K by dividing them into tiles ranging from 448×448 pixels, dynamically adapting based on image aspect ratio and resolution. This method improves image understanding and makes it easier to understand detailed scenes and documents. The model's enhanced linguistic capabilities arise from its training on a diverse dataset comprising both English and Chinese, covering a variety of scenes and document types, boosting its performance on OCR and text-based tasks across languages. .

The model's performance is evident in its results across multiple benchmarks, where it particularly excels on datasets related to OCR and bilingual scene understanding. InternVL 1.5 demonstrates state-of-the-art results, showing marked improvements over previous versions and outperforming some proprietary models in specific tests. For example, text-based visual question answering achieves an accuracy of 80.6% and document-based question answering achieves an impressive 90.9%. In multimodal benchmarks that evaluate models in terms of visual and textual understanding, InternVL 1.5 consistently delivers competitive results, often outperforming other open source models and rivaling commercial models.

In conclusion, InternVL 1.5 addresses the important challenges facing open source large multimodal language models, particularly in processing high-resolution images and supporting multilingual capabilities. This model significantly reduces the performance gap with its commercial counterparts by implementing a robust vision encoder, dynamic resolution adaptation, and a comprehensive bilingual dataset. InternVL 1.5's enhanced capabilities are demonstrated through its superior performance in OCR-related tasks and bilingual scene understanding, establishing it as a formidable contender in advanced artificial intelligence systems.

Review the Paper and GitHub page. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter. Join our Telegram channel, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our SubReddit over 40,000 ml

![]()

Sana Hassan, a consulting intern at Marktechpost and a dual degree student at IIT Madras, is passionate about applying technology and artificial intelligence to address real-world challenges. With a strong interest in solving practical problems, she brings a new perspective to the intersection of ai and real-life solutions.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER