The development of graphical user interface (GUI) agents faces two key challenges that hinder their effectiveness. First, existing agents lack strong reasoning capabilities as they mainly rely on one-step operations and do not incorporate reflective learning mechanisms. This often causes repeated errors when executing complex, multi-step tasks. Most current systems rely heavily on textual annotations that represent GUI data, such as accessibility trees. This leads to two types of consequences: loss of information and computational inefficiency; but they also cause inconsistencies between platforms and reduce their flexibility in real deployment scenarios.

Modern approaches to GUI automation are large, multi-modal language models that are used in conjunction with vision encoders to understand and interact with GUI configuration. Efforts such as ILuvUI, CogAgent, and Ferret-UI-anyres have advanced this field by improving GUI understanding, using high-resolution vision encoders, and employing resolution-independent techniques. However, these methods have notable drawbacks, including high computational costs, limited reliance on visual data over textual representations, and inadequate reasoning capabilities. Methodological limitations place considerable limitations on your ability to perform real-time tasks and the complexity of executing complex sequences. This severely restricts their ability to dynamically adapt and correct errors during operational processes due to the lack of a robust mechanism for hierarchical and reflective reasoning.

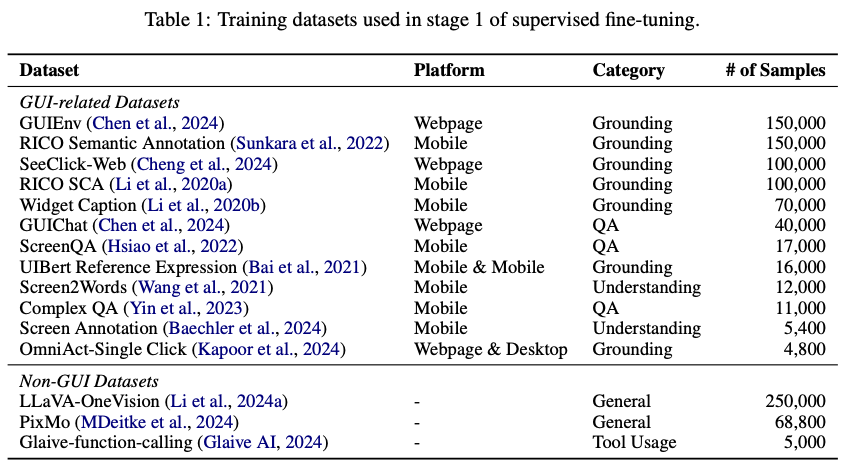

Researchers from Zhejiang University, Dalian University of technology, Reallm Labs, ByteDance Inc., and Hong Kong Polytechnic University present InfiGUIAgent, a novel multimodal GUI agent that addresses these limitations. The methodology relies on sophisticated inherent reasoning capabilities through a dual-phase supervised tuning framework to be adaptive and effective. Training in the first phase focuses on developing core capabilities by using diverse data sets that can improve understanding of graphical user interfaces, grounding, and task adaptability. The datasets used, such as Screen2Words, GUIEnv, and RICO SCA, cover tasks such as semantic interpretation, user interaction modeling, and question-and-answer-based learning, providing the agent with comprehensive functional knowledge.

In the next phase, advanced reasoning capabilities are incorporated through synthesized trajectory information, thus supporting hierarchical reasoning and expectation reflection processes. The hierarchical reasoning framework contains a bifurcated architecture: a strategic component focused on task decomposition and a tactical component on precise action selection. Expectation reflection reasoning allows the agent to adjust and self-correct through the evaluation of what was expected versus what happened, thus improving performance in different and dynamic contexts. This two-stage framework allows the system to natively handle multi-step tasks without textual augmentations, allowing for greater robustness and computational efficiency.

InfiGUIAgent was implemented by fine-tuning Qwen2-VL-2B using ZeRO0 technology for efficient resource management on GPUs. A reference-augmented annotation format was used to standardize and improve the quality of the data set so that GUI elements could be accurately spatially referenced. Curating data sets increases GUI understanding, grounding, and quality control capabilities to perform tasks such as semantic interpretation and interaction modeling. The synthesized data was then used to reason and ensure that all task coverage was covered through trajectory-based annotations similar to real-world interactions with the GUI. This modularity in the design of the action space allows the agent to respond dynamically to multiple platforms, giving it greater flexibility and applicability.

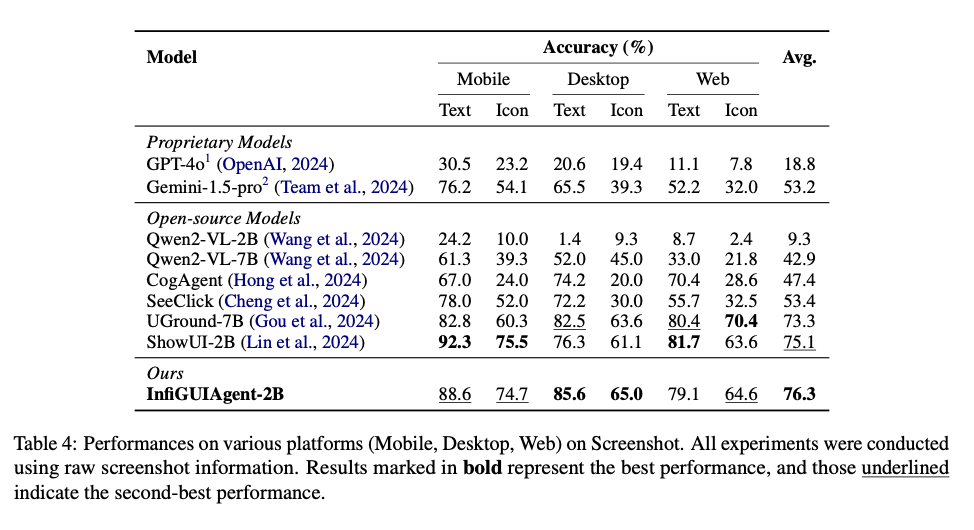

InfiGUIAgent achieved exceptional results in benchmark tests, far outperforming state-of-the-art models in both accuracy and adaptability. It managed to achieve an accuracy of 76.3% on the ScreenSpot benchmark, showing increased ability to connect GUIs across mobile, desktop, and web platforms. For dynamic environments like AndroidWorld, the agent was able to have a success rate of 0.09, which is higher than other similar models with even higher parameter counts. The results confirm that the system can competently carry out complex multi-step tasks with precision and adaptability, while underscoring the effectiveness of its hierarchical and reflective reasoning models.

InfiGUIAgent represents a breakthrough in the field of GUI automation and solves key reasons why existing tools suffer from significant limitations in reasoning and adaptability. Without requiring any textual augmentation, this state-of-the-art performance is derived from the integration of mechanisms for hierarchical task decomposition and reflective learning in a multimodal framework. The new benchmark provided here opens an opportunity to develop next-generation GUI agents that can be seamlessly integrated into real applications for efficient and robust task execution.

look at the Paper and GitHub page. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://x.com/intent/follow?screen_name=marktechpost” target=”_blank” rel=”noreferrer noopener”>twitter and join our Telegram channel and LinkedIn Grabove. Don't forget to join our SubReddit over 65,000 ml.

UPCOMING FREE ai WEBINAR (JANUARY 15, 2025): <a target="_blank" href="https://info.gretel.ai/boost-llm-accuracy-with-sd-and-evaluation-intelligence?utm_source=marktechpost&utm_medium=newsletter&utm_campaign=202501_gretel_galileo_webinar”>Increase LLM Accuracy with Synthetic Data and Assessment Intelligence–<a target="_blank" href="https://info.gretel.ai/boost-llm-accuracy-with-sd-and-evaluation-intelligence?utm_source=marktechpost&utm_medium=newsletter&utm_campaign=202501_gretel_galileo_webinar”>Join this webinar to learn practical information to improve LLM model performance and accuracy while protecting data privacy..

Aswin AK is a Consulting Intern at MarkTechPost. He is pursuing his dual degree from the Indian Institute of technology Kharagpur. He is passionate about data science and machine learning, and brings a strong academic background and practical experience solving real-life interdisciplinary challenges.

NEWSLETTER

NEWSLETTER