Transformer-based language models are critical to advancing the field of ai. Traditionally, these models have been implemented to interpret and generate human language by predicting sequences of tokens, a fundamental process in their operational framework. Given their wide application, from automated chatbots to complex decision-making systems, improving their efficiency and accuracy remains a critical area of research.

A notable limitation in current language model methodologies is their reliance on the generation of direct responses or intermediate reasoning steps, known as “thought chain” tokens. These methods assume that adding more tokens representing steps in reasoning inherently improves the problem-solving capabilities of the model. However, recent empirical evidence suggests that the benefit of these tokens may not directly correspond to improved computational reasoning, raising questions about the effectiveness of existing token utilization strategies.

A new approach that involves 'filler tokens' has been presented by researchers at New York University's Data Science Center, to address these concerns. These are essentially meaningless symbols, exemplified by strings of dots such as '……', which do not contribute to the traditional understanding of the text but serve a different purpose. Strategically placed within the input sequence, these filler tokens are designed to facilitate complex computations indirectly, offering a way to circumvent the limitations of simple token prediction.

The effectiveness of filler tokens has been explored through their application in computational tasks that challenge the capabilities of standard transformer models. Researchers have shown that transformers can effectively process more complex nonlinear tasks by incorporating these tokens into the input sequence. This approach leverages the latent computational potential of transformers by utilizing hidden layer representations of these filler tokens.

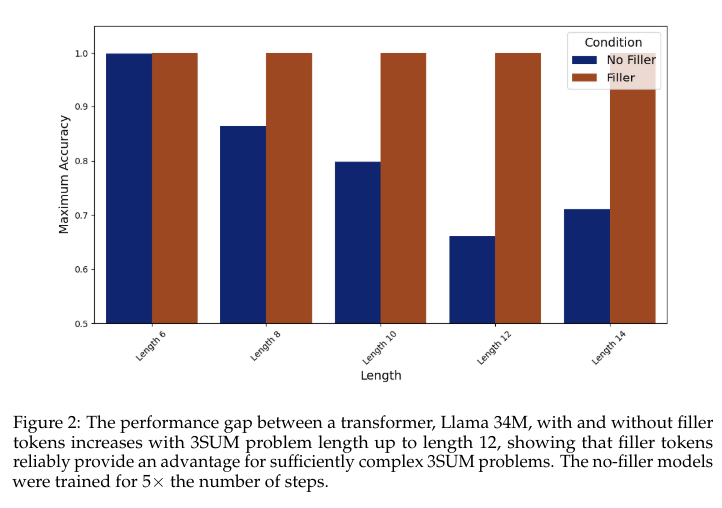

Detailed analysis reveals that incorporating padding tokens allows transformers to solve complex algorithmic problems, such as the 3SUM problem, with high precision. For example, in experiments where transformers were provided with filler tokens, the models achieved perfect accuracy on the 3SUM problem with input lengths up to 12, demonstrating a significant computational advantage over models operating without such fillers. records.

The research quantitatively illustrates the performance improvement with filler tokens. Models trained with these tokens outperformed basic immediate response models and exhibited improved problem-solving capabilities on more complex tasks. Specifically, filler tokens consistently improved model performance in settings where the input sequence involved higher-dimensional data, achieving accuracies near 100% on tasks that would otherwise stump models without this augmentation.

In conclusion, the study demonstrates that the limitations of the traditional transformer model can be overcome by integrating meaningless filler tokens into its input sequences. This innovative method avoids the limitations of using standard tokens and significantly improves the computational capabilities of the models. By employing filler tokens, the researchers were able to improve the performance of transformers on complex tasks such as the 3SUM problem, where they achieved near-perfect accuracy. These results highlight a promising new direction for improving the problem-solving capabilities of ai and suggest a potential paradigm shift in how computational resources are managed within language models.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter. Join our Telegram channel, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our SubReddit over 40,000ml

![]()

Sana Hassan, a consulting intern at Marktechpost and a dual degree student at IIT Madras, is passionate about applying technology and artificial intelligence to address real-world challenges. With a strong interest in solving practical problems, she brings a new perspective to the intersection of ai and real-life solutions.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER