amazon Rekognition makes it easy to add image and video analytics to your applications. It relies on the same proven, highly scalable deep learning technology developed by amazon computer vision scientists to analyze billions of images and videos daily. It requires no machine learning (ML) expertise to use and we continually add new computer vision features to the service. amazon Rekognition includes a simple, easy-to-use API that can quickly analyze any image or video file stored in amazon Simple Storage Service (amazon S3).

Clients in industries such as advertising and marketing technology, gaming, media, and retail and e-commerce rely on images uploaded by their end users (user-generated content, or UGC) as a critical component to driving engagement on their platform. They use amazon Rekognition content moderation to detect inappropriate, unwanted, and offensive content to protect their brand reputation and foster safe user communities.

In this post, we will discuss the following:

- Content moderation model version 7.0 and capabilities

- How does amazon Rekognition bulk analysis for content moderation work?

- How to improve content moderation prediction with bulk analytics and personalized moderation

Content moderation model version 7.0 and capabilities

amazon Rekognition Content Moderation version 7.0 adds 26 new moderation tags and expands the moderation tag taxonomy from a two-level tag category to a three-level tag category. These new tags and expanded taxonomy allow customers to discover detailed concepts about the content they want to moderate. Additionally, the updated model introduces a new ability to identify two new types of content, animated and illustrated content. This allows customers to create granular rules to include or exclude such content types from their moderation workflow. With these new updates, customers can moderate content according to their content policy more accurately.

Let's look at an example of moderation tag detection for the image below.

The following table shows the moderation tags, content type, and trust scores returned in the API response.

| Moderation tags | Taxonomy level | Confidence scores |

| Violence | L1 | 92.6% |

| Graphic violence | L2 | 92.6% |

| Explosions and explosions | L3 | 92.6% |

| Content Types | Confidence scores |

| Illustrated | 93.9% |

For the complete taxonomy for content moderation version 7.0, visit our developer guide.

Mass analysis for content moderation

amazon Rekognition content moderation also provides batch image moderation in addition to real-time moderation using amazon Rekognition Bulk Analysis. Allows you to analyze large collections of images asynchronously to detect inappropriate content and obtain information about moderation categories assigned to images. It also eliminates the need to create a batch image moderation solution for clients.

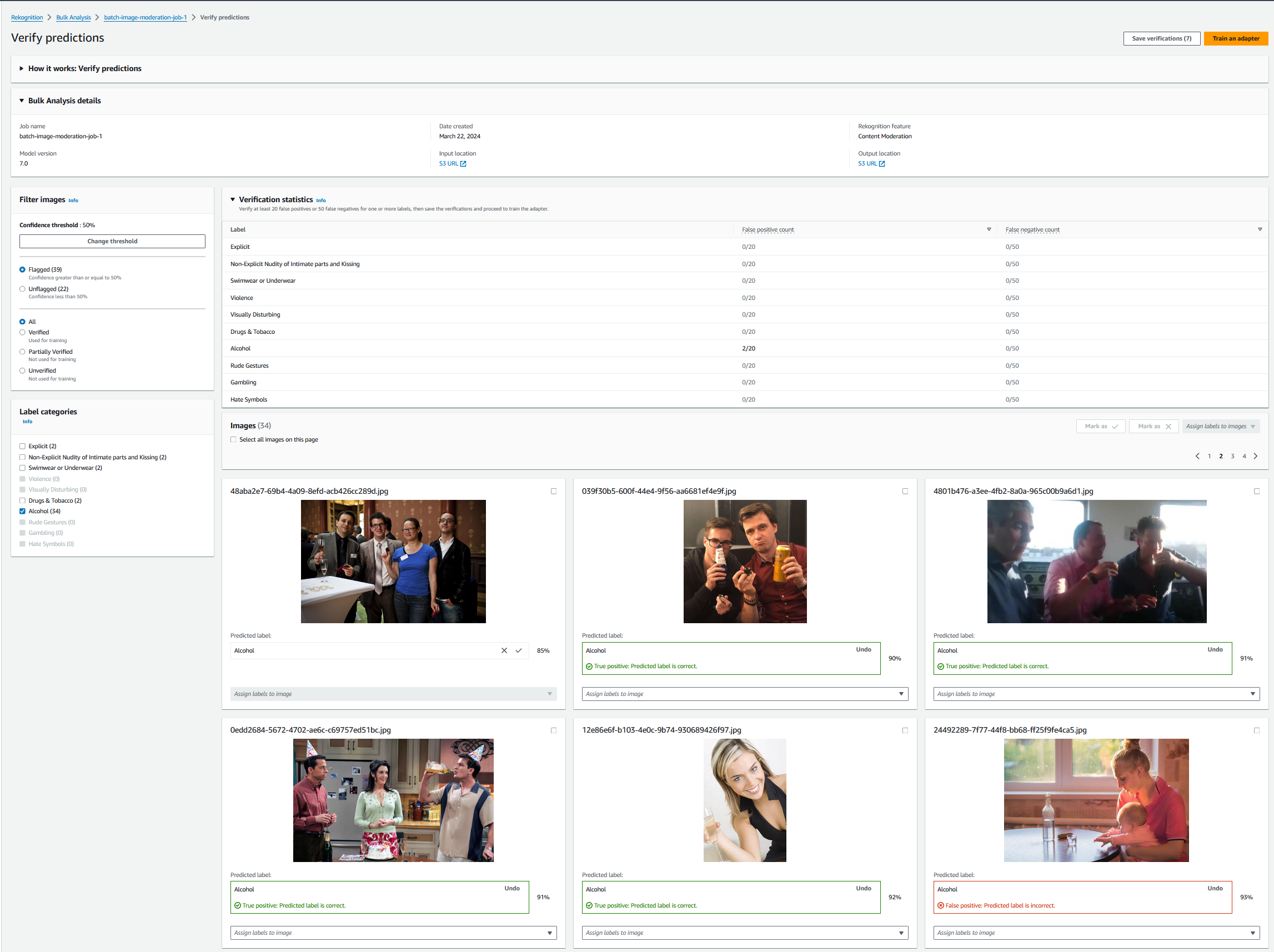

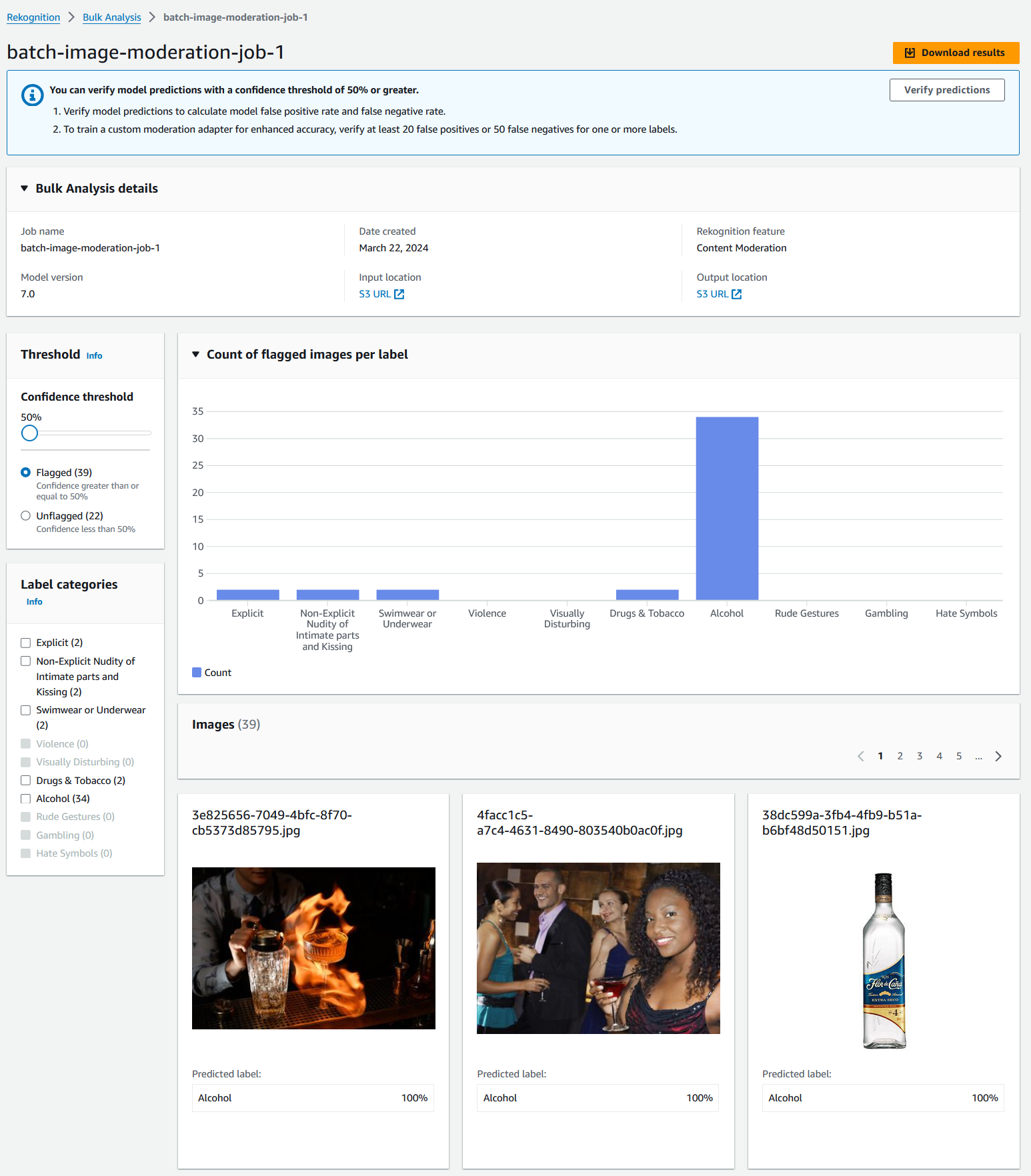

You can access the bulk analysis feature through the amazon Rekognition console or by calling APIs directly using the AWS CLI and AWS SDKs. In the amazon Rekognition console, you can upload the images you want to analyze and get results with a few clicks. Once the bulk analysis work is complete, you can identify and view moderation tag predictions, such as explicit and non-explicit nudity of private parts and kissing, violence, drugs and tobacco, and more. You also receive a confidence score for each tag category.

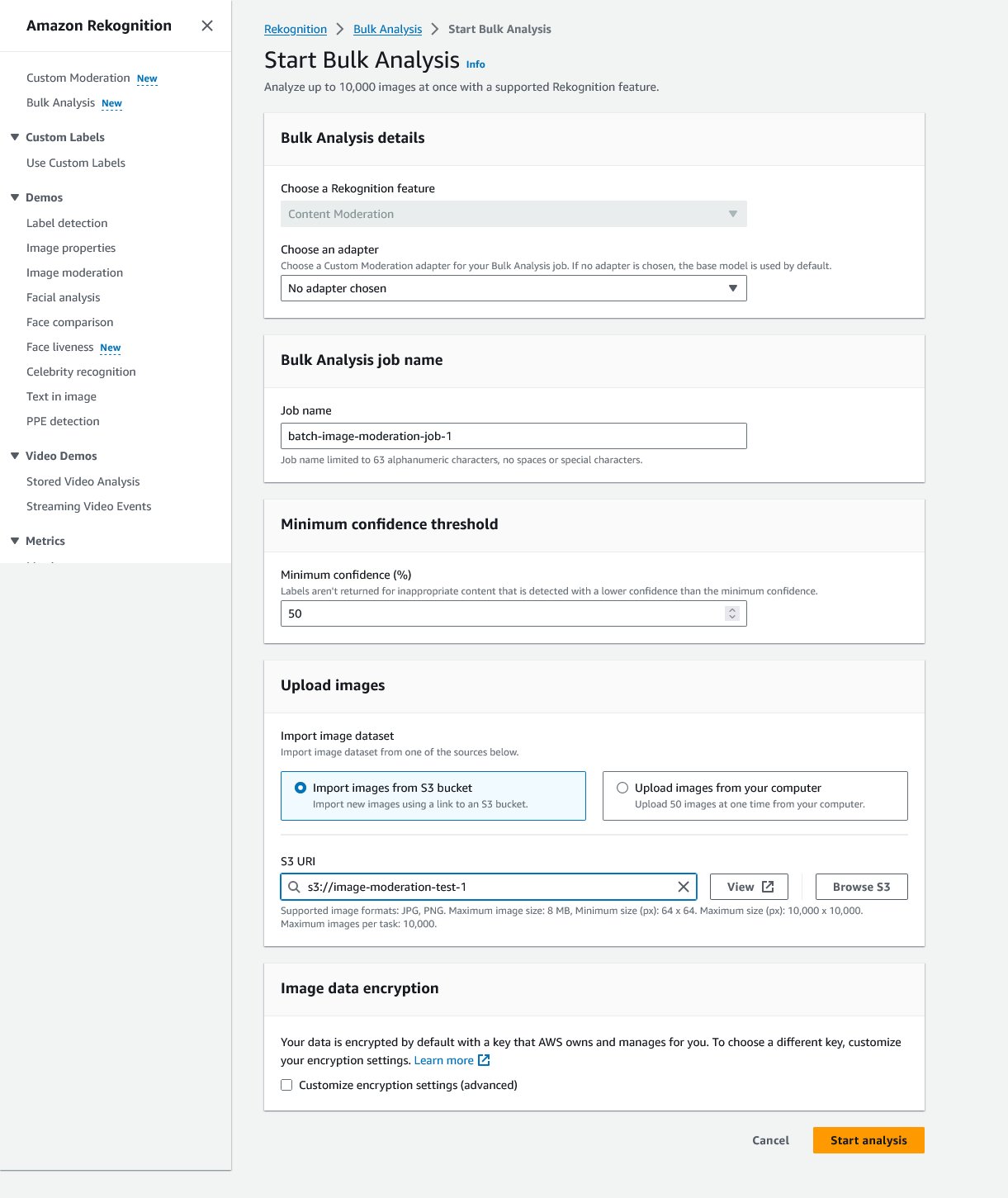

Create a bulk scan job in the amazon Rekognition console

Complete the following steps to try amazon Rekognition bulk scanning:

- In the amazon Rekognition console, choose Mass analysis in the navigation panel.

- Choose Start bulk analysis.

- Enter a job name and specify images to analyze, either by entering an S3 bucket location or by uploading images from your computer.

- You can optionally select an adapter to analyze images using the custom adapter that you trained using custom moderation.

- Choose Start analysis to execute the job.

When the process is complete, you can see the results in the amazon Rekognition console. Additionally, a JSON copy of the analysis results will be stored in the amazon S3 output location.

amazon Rekognition Bulk Analysis API Request

In this section, we will guide you through creating a bulk analysis job for image moderation using programming interfaces. If your image files are not already in an S3 bucket, upload them to ensure access by amazon Rekognition. Similar to creating a bulk analysis job in the amazon Rekognition console, when you invoke the StartMediaAnalysisJob API, you must provide the following parameters:

- Operation Settings – These are the configuration options for the media analysis job to be created:

- Minimum Trust – The minimum confidence level with the valid range of 0 to 100 for moderation tags to return. amazon Rekognition does not return any tags with a confidence level lower than this specified value.

- Input – This includes the following:

- S3 Object – The S3 object information for the input manifest file, including the bucket and file name. The input file includes JSON lines for each image stored in the S3 bucket. For example:

{"source-ref": "s3://MY-INPUT-BUCKET/1.jpg"}

- S3 Object – The S3 object information for the input manifest file, including the bucket and file name. The input file includes JSON lines for each image stored in the S3 bucket. For example:

- Output configuration – This includes the following:

- S3Cube – The name of the S3 bucket for the output files.

- S3KeyPrefix – The key prefix for the output files.

See the following code:

You can invoke the same media analysis using the following AWS CLI command:

amazon Rekognition Bulk Analysis API Results

To get a list of bulk scan jobs, you can use ListMediaAnalysisJobs. The response includes all the details about the input and output files of the analysis job and the status of the job:

You can also invoke the list-media-analysis-jobs command via AWS CLI:

amazon Rekognition Bulk Analysis generates two output files in the output bucket. The first file is manifest-summary.jsonwhich includes bulk scan job statistics and a list of errors:

The second file is results.json, which includes one JSON line for each image parsed in the following format. Each result includes the top-level category (L1) of a detected tag and the tag's second-level category (L2), with a confidence score between 1 and 100. Some Taxonomy Level 2 tags may have Level 2 tags. of Taxonomy 3 (L3). This allows for hierarchical classification of content.

You can use custom moderation adapters later to analyze your images by simply selecting the custom adapter while creating a new bulk analysis job or by using the API by passing the unique adapter ID of the custom adapter.

Summary

In this post, we provide an overview of content moderation version 7.0, bulk analytics for content moderation, and how to improve content moderation predictions using bulk analytics and custom moderation. To try the new moderation tags and bulk analysis, sign in to your AWS account and check the amazon Rekognition console for image moderation and bulk analysis.

About the authors

<img loading="lazy" class="size-full wp-image-59799 alignleft" src="https://d2908q01vomqb2.cloudfront.net/f1f836cb4ea6efb2a0b1b99f41ad8b103eff4b59/2023/07/28/mehdy-badgephotos.corp_.amazon.jpg” alt=”” width=”100″ height=”133″/>Mehdy Haghy is a Senior Solutions Architect on the AWS WWCS team, specializing in ai and ML at AWS. He works with enterprise clients, helping them migrate, modernize, and optimize their workloads for the AWS cloud. In his free time, he likes to cook Persian food and tinker with electronics.

Shipra Kanoria He is a Principal Product Manager at AWS. She is passionate about helping clients solve their most complex problems with the power of machine learning and artificial intelligence. Prior to joining AWS, Shipra spent over 4 years at amazon Alexa, where she launched many productivity-related features in the Alexa voice assistant.

Shipra Kanoria He is a Principal Product Manager at AWS. She is passionate about helping clients solve their most complex problems with the power of machine learning and artificial intelligence. Prior to joining AWS, Shipra spent over 4 years at amazon Alexa, where she launched many productivity-related features in the Alexa voice assistant.

Maria Handoko He is a Senior Product Manager at AWS. She focuses on helping clients solve their business challenges using machine learning and computer vision. In her free time, she enjoys hiking, listening to podcasts, and exploring different cuisines.

Maria Handoko He is a Senior Product Manager at AWS. She focuses on helping clients solve their business challenges using machine learning and computer vision. In her free time, she enjoys hiking, listening to podcasts, and exploring different cuisines.

NEWSLETTER

NEWSLETTER