Andrew Ng recently released AISuitean open source Python package designed to optimize the use of large language models (LLMs) across multiple vendors. This innovative tool simplifies the complexities of working with various LLMs by enabling seamless switching between models with a simple “vendor:model” chain. By significantly reducing integration overhead, AISuite improves flexibility and accelerates application development, making it an invaluable resource for developers navigating the dynamic ai landscape. In this article we will see how effective it is.

What is AISuite?

AISuite is an open source project led by Andrew Ng, designed to make working with multiple large language model (LLM) vendors easier and more efficient. Available in GitHubprovides a simple and unified interface that allows seamless switching between LLM using HTTP or SDK endpoints, following the OpenAI interface. This tool is ideal for students, educators, and developers as it offers consistent and hassle-free interactions between multiple providers.

Supported by a team of open source contributors, AISuite bridges the gap between different LLM frameworks. It allows users to integrate and compare models from vendors such as OpenAI, Anthropic, and Meta's Llama with ease. The tool simplifies tasks such as generating text, performing analysis, and building interactive systems. With features like optimized API key management, customizable client settings, and intuitive setup, AISuite supports simple applications and complex LLM-based projects.

AISuite Implementation

1. Install the necessary libraries

!pip install openai

!pip install aisuite(all)- !pip install openai: Installs the OpenAI Python library, which is required to interact with OpenAI GPT models.

- !pip install aisuite (all): Installs AISuite along with any optional dependencies required to support multiple LLM providers.

2. Set API keys for authentication

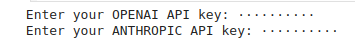

os.environ('OPENAI_API_KEY') = getpass('Enter your OPENAI API key: ')

os.environ('ANTHROPIC_API_KEY') = getpass('Enter your ANTHROPIC API key: ')- os.environ– Sets environment variables to securely store the API keys required to access LLM services.

- getpass()– Prompts the user to enter their OpenAI and Anthropic API keys securely (without showing the input).

- These keys authenticate your requests on the respective platforms.

Also Read: How to Generate Your Own OpenAI API Key and Add Credits?

3. Initialize the AISuite client

client = ai.Client()This initializes an instance of AISuite client, allowing interaction with multiple LLMs in a standardized way.

4. Define the message (messages)

messages = (

{"role": "system", "content": "Talk using Pirate English."},

{"role": "user", "content": "Tell a joke in 1 line."}

)- The message list defines a conversation entry:

- role: “system”: Provides instructions to the model (e.g., “Speak in pirate English”).

- role: “user”: Represents the user's query (e.g., “Tell a joke in 1 line”).

- This prompt ensures that responses follow a pirate theme and include a one-liner joke.

5. Query the OpenAI model

response = client.chat.completions.create(model="openai:gpt-4o", messages=messages, temperature=0.75)

print(response.choices(0).message.content)- model=”openai:gpt-4o”: Specifies the OpenAI GPT-4o model.

- messages=messages: Send the previously defined message to the model.

- temperature=0.75: Controls the randomness of the response. A higher temperature results in more creative results, while lower values produce more deterministic responses.

- responses.options(0).message.content: Extracts the text content from the model response.

6. Consult the anthropic model

response = client.chat.completions.create(model="anthropic:claude-3-5-sonnet-20241022", messages=messages, temperature=0.75)

print(response.choices(0).message.content)- model=”anthropic:claude-3-5-sonnet-20241022″: Specifies the Anthropic Claude-3-5 model.

- The remaining parameters are identical to the OpenAI query. This demonstrates how AISuite allows you to easily switch between providers by changing the model parameter.

7. Consult the Ollama model

response = client.chat.completions.create(model="ollama:llama3.1:8b", messages=messages, temperature=0.75)

print(response.choices(0).message.content)- model=”ollama:llama3.1:8b”: Specifies the Ollama Llama3.1 model.

- Again, the parameters and logic are consistent, showing how AISuite provides a unified interface between providers.

Production

Why did the pirate go to school? To improve his "arrrrrrr-ticulation"!Arrr, why don't pirates take a shower before they walk the plank? Because

they'll just wash up on shore later!Why did the scurvy dog's parrot go to the doctor? Because it had a fowl

temper, savvy?

Create a chat ending

!pip install openai

!pip install aisuite(all)

os.environ('OPENAI_API_KEY') = getpass('Enter your OPENAI API key: ')

from getpass import getpass

import aisuite as ai

client = ai.Client()

provider = "openai"

model_id = "gpt-4o"

messages = (

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Give me a tabular comparison of RAG and AGENTIC RAG"},

)

response = client.chat.completions.create(

model=f"{provider}:{model_id}",

messages=messages,

)

print(response.choices(0).message.content)Production

Certainly! Below is a tabular comparison of Retrieval-Augmented Generation

(RAG) and Agentic RAG.| Feature | RAG |

Agentic RAG ||------------------------|-------------------------------------------------|-

---------------------------------------------------|| Definition | A framework that combines retrieval from external

documents with generation. | An extension of RAG that incorporates actions

based on external interactions and dynamic decision-making. || Components | - Retrieval System (e.g., a search engine or

document database)

- Generator (e.g., a language model) | - Retrieval

System

- Generator

- Agentic Layer (action-taking and interaction

controller) || Functionality | Retrieves relevant documents and generates

responses based on prompted inputs combined with the retrieved information.

| Adds the capability to take actions based on interactions, such as

interacting with APIs, controlling devices, or dynamically gathering more

information. || Use Cases | - Knowledge-based question answering

-

Content summarization

- Open-domain dialogue systems | - Autonomous

agents

- Interactive systems

- Decision-making applications

-

Systems requiring context-based actions || Interaction | Limited to the input retrieval and output

generation cycle. | Can interact with external systems or interfaces to

gather data, execute tasks, and alter the environment based on objective

functions. || Complexity | Generally simpler as it combines retrieval with

generation without taking actions beyond generating text. | More complex due

to its ability to interact with and modify the state of external

environments. || Example of Application | Answering complex questions by retrieving parts of

documents and synthesizing them into coherent answers. | Implementing a

virtual assistant capable of performing tasks like scheduling appointments

by accessing calendars, or a chatbot that manages customer service queries

through actions. || Flexibility | Limited to the available retrieval corpus and

generation model capabilities. | More flexible due to action-oriented

interactions that can adapt to dynamic environments and conditions. || Decision-Making Ability| Limited decision-making based on static retrieval

and generation. | Enhanced decision-making through dynamic interaction and

adaptive behavior. |This comparison outlines the foundational differences and capabilities

between traditional RAG systems and the more advanced, interaction-capable

Agentic RAG frameworks.

Each model uses a different supplier

1. Installation and import of libraries

!pip install aisuite(all)

from pprint import pprint as pp- Installs the aisuite library with all optional dependencies.

- Imports a pretty print (pprint) function to format the output for better readability. A custom print function is defined to allow a custom width.

2. API key configuration

import os

from getpass import getpass

os.environ('GROQ_API_KEY') = getpass('Enter your GROQ API key: ')Prompts the user to enter their GROQ API Keywhich is stored in the GROQ_API_KEY environment variable.

<h3 class="wp-block-heading" id="h-3-initializing-the-ai-client”>3. Initializing the ai client

import aisuite as ai

client = ai.Client()Initialize an ai client using the aisuite library to interact with different models.

4. Chat Endings

messages = (

{"role": "system", "content": "You are a helpful agent, who answers with brevity."},

{"role": "user", "content": 'Hi'},

)

response = client.chat.completions.create(model="groq:llama-3.2-3b-preview", messages=messages)

print(response.choices(0).message.content)Production

How can I assist you?

- Defines a chat with two messages:

- TO system message that sets the tone or behavior of the ai (concise responses).

- TO user message as input.

- Send the messages to the ai model groq:llama-3.2-3b-preview and print the model's response.

5. Function to Send Queries

def ask(message, sys_message="You are a helpful agent.",

model="groq:llama-3.2-3b-preview"):

client = ai.Client()

messages = (

{"role": "system", "content": sys_message},

{"role": "user", "content": message}

)

response = client.chat.completions.create(model=model, messages=messages)

return response.choices(0).message.content

ask("Hi. what is capital of Japan?")Production

'Hello. The capital of Japan is Tokyo.'

- ask is a reusable function to send queries to the model.

- Accept:

- message: The user's query.

- sys_message: Optional system instruction.

- model: Specifies the ai model.

- Submit the input and return the ai response.

6. Use of various APIs

os.environ('OPENAI_API_KEY') = getpass('Enter your OPENAI API key: ')

os.environ('ANTHROPIC_API_KEY') = getpass('Enter your ANTHROPIC API key: ')

print(ask("Who is your creator?"))

print(ask('Who is your creator?', model="anthropic:claude-3-5-sonnet-20240620"))

print(ask('Who is your creator?', model="openai:gpt-4o"))Production

I was created by Meta ai, a leading artificial intelligence research

organization. My knowledge was developed from a large corpus of text, which

I use to generate human-like responses to user queries.I was created by Anthropic.

I was developed by OpenAI, an organization that focuses on artificial

intelligence research and deployment.

- Prompts the user Open ai and anthropic API keys.

- Send a query (“Who is your creator?”) to different models:

- Greek:flame-3.2-3b-preview

- anthropic:claude-3-5-sonnet-20240620

- open:gpt-4o

- Prints the response from each model, showing how different systems interpret the same query.

7. Multiple model query

models = (

'llama-3.1-8b-instant',

'llama-3.2-1b-preview',

'llama-3.2-3b-preview',

'llama3-70b-8192',

'llama3-8b-8192'

)

ret = ()

for x in models:

ret.append(ask('Write a short one sentence explanation of the origins of ai?', model=f'groq:{x}'))- A list of different model identifiers (models) is defined.

- Go through each model and consult it with:

- Write a brief one-sentence explanation of the origins of ai?

- Stores responses in the ret list.

8. Viewing model responses

for idx, x in enumerate(ret):

pprint(models(idx) + ': \n ' + x + ' ')- Browse through stored responses.

- Formats and prints the model name along with its response, making it easy to compare results.

Production

('llama-3.1-8b-instant: \n' ' The origins of artificial intelligence (ai) date back to the 1956 Dartmouth '

'Summer Research Project on artificial intelligence, where a group of '

'computer scientists, led by John McCarthy, Marvin Minsky, Nathaniel '

'Rochester, and Claude Shannon, coined the term and laid the foundation for '

'the development of ai as a distinct field of study. ')

('llama-3.2-1b-preview: \n'

' The origins of artificial intelligence (ai) date back to the mid-20th '

'century, when the first computer programs, which mimicked human-like '

'intelligence through algorithms and rule-based systems, were developed by '

'renowned mathematicians and computer scientists, including Alan Turing, '

'Marvin Minsky, and John McCarthy in the 1950s. ')

('llama-3.2-3b-preview: \n'

' The origins of artificial intelligence (ai) date back to the 1950s, with '

'the Dartmouth Summer Research Project on artificial intelligence, led by '

'computer scientists John McCarthy, Marvin Minsky, and Nathaniel Rochester, '

'marking the birth of ai as a formal field of research. ')

('llama3-70b-8192: \n'

' The origins of artificial intelligence (ai) can be traced back to the 1950s '

'when computer scientist Alan Turing proposed the Turing Test, a method for '

'determining whether a machine could exhibit intelligent behavior equivalent '

'to, or indistinguishable from, that of a human. ')

('llama3-8b-8192: \n'

' The origins of artificial intelligence (ai) can be traced back to the '

'1950s, when computer scientists DARPA funded the development of the first ai '

'programs, such as the Logical Theorist, which aimed to simulate human '

'problem-solving abilities and learn from experience. ')

The models provide varied answers to the question about the origins of ai, reflecting its training and reasoning capabilities. For example:

- Some models refer to the Dartmouth Summer Research Project on ai.

- Others mention Alan Turing or early DARPA-Funded ai programs.

Key Features and Conclusions

- Modularity: The script uses reusable functions (ask) to make queries efficient and customizable.

- Multi-model interaction: Shows the ability to interact with various ai systems, including GROQ, OpenAI, and Anthropic.

- Comparative analysis: Facilitates the comparison of responses between models to obtain information about their strengths and biases.

- Real time inputs: Supports dynamic input for API keys, ensuring secure integration.

This script is a great starting point for exploring different capabilities of the ai model and understanding its unique behaviors.

Conclusion

AISuite is an essential tool for anyone navigating the world of large language models. It allows users to leverage the best of multiple ai vendors while simplifying development and fostering innovation. Its open source nature and careful design underline its potential as a cornerstone of modern ai application development.

It speeds up development and improves flexibility by enabling seamless switching between models like OpenAI, Anthropic, and Meta with minimal integration effort. Ideal for simple and complex applications, AISuite supports modular workflows, API key management, and real-time multi-model comparisons. Its ease of use, scalability, and ability to optimize interactions between providers make it an invaluable resource for developers, researchers, and educators, enabling efficient and innovative use of diverse LLMs in an evolving ai landscape.

If you are looking for an online generative ai course, explore: GenAI Pinnacle Program

Frequently asked questions

Answer. AISuite is an open source Python package created by Andrew Ng to simplify working with multiple large language models (LLMs) from various vendors. Provides a unified interface for switching between models, simplifying integration and accelerating development.

Answer. Yes, AISuite supports querying multiple models from different vendors simultaneously. You can send the same query to different models and compare their responses.

Answer. The key feature of AISuite is its modularity and ability to integrate multiple LLMs into a single workflow. It also simplifies API key management and allows easy switching between models, facilitating quick comparisons and experimentation.

Answer. To install AISuite and the necessary libraries, run:!pip install aisuite(all)

!pip install openai

NEWSLETTER

NEWSLETTER