A fundamental aspect of ai research involves tuning large language models (LLMs) to align their outputs with human preferences. This tuning ensures that ai systems generate useful, relevant responses aligned with user expectations. The current paradigm in ai emphasizes learning from human preference data to refine these models, addressing the complexity of manually specifying reward functions for various tasks. The two predominant techniques in this area are online reinforcement learning (RL) and offline contrastive methods, each of which offers unique advantages and challenges.

A central challenge in fine-tuning LLM models to reflect human preferences is the limited coverage of static datasets. These datasets may need to adequately represent the diverse and dynamic range of human preferences in real-world applications. The dataset coverage problem becomes particularly pronounced when models are trained exclusively on previously collected data, which can lead to suboptimal performance. This problem underscores the need for methods to effectively leverage both static datasets and real-time data to improve model alignment with human preferences.

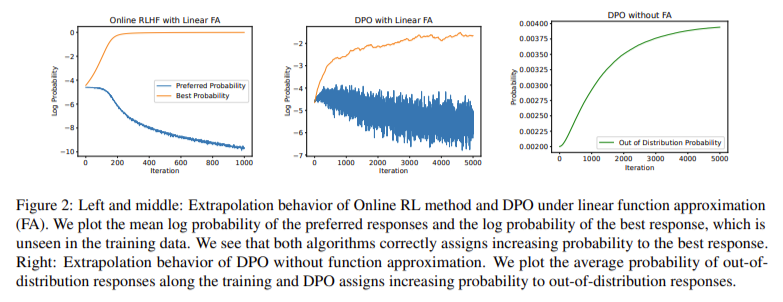

Existing techniques for preference fine-tuning in LLMs include online RL methods, such as Proximal Policy Optimization (PPO), and offline contrastive methods, such as Direct Preference Optimization (DPO). Online RL methods involve a two-stage procedure in which a reward model is trained on a fixed offline preference dataset, followed by RL training using policy data. This approach benefits from real-time feedback, but is computationally intensive. In contrast, offline contrastive methods optimize policies based solely on previously collected data, which avoids the need for real-time sampling, but potentially suffers from overfitting and limited generalization capabilities.

Researchers from Carnegie Mellon University, Aurora Innovation and Cornell University presented a new method called Hybrid Preference Optimization (HyPO)This hybrid approach combines the power of online and offline techniques, aiming to improve model performance while maintaining computational efficiency. HyPO integrates offline data for initial preference optimization. It uses unlabeled online data for Kullback-Leibler (KL) regularization, ensuring that the model stays close to a baseline policy and generalizes better beyond the training data.

HyPO uses a sophisticated algorithmic framework that leverages offline data for the DPO target and online samples to control inverse KL divergence. The algorithm iteratively updates the model parameters by optimizing the DPO loss while incorporating a KL regularization term derived from the online samples. This hybrid approach effectively addresses the shortcomings of purely offline methods, such as overfitting and insufficient dataset coverage, by incorporating the strengths of online RL methods without their computational complexity.

HyPO’s performance was evaluated on several benchmarks, including the TL;DR summarization task and general chat benchmarks such as AlpacaEval 2.0 and MT-Bench. The results were impressive: HyPO achieved a 46.44% success rate on the TL;DR task using the Pythia 1.4B model, compared to 42.17% for the DPO method. For the Pythia 2.8B model, HyPO achieved a 50.50% success rate, significantly outperforming DPO’s 44.39%. Furthermore, HyPO demonstrated superior control over inverse KL divergence, with values of 0.37 and 2.51 for the Pythia 1.4B and 2.8B models, respectively, compared to 0.16 and 2.43 for DPO.

In general chat tests, HyPO also showed notable improvements. For example, in the MT-Bench evaluation, HyPO-optimized models achieved scores of 8.43 and 8.09 for the first and second turn averages, respectively, outperforming DPO-optimized models’ scores of 8.31 and 7.89. Similarly, in AlpacaEval 2.0, HyPO achieved win rates of 30.7% and 32.2% for the first and second turns, compared to DPO’s 28.4% and 30.9%.

Empirical results highlight HyPO’s ability to mitigate overfitting issues commonly observed in offline contrastive methods. For example, when trained on the TL;DR dataset, HyPO maintained a significantly lower mean validation KL score than DPO, indicating better alignment with the baseline policy and reduced overfitting. This ability to leverage online data for regularization helps HyPO achieve more robust performance across multiple tasks.

In conclusion, the introduction of Hybrid Preference Optimization (HyPO), which effectively combines online and offline data, addresses the limitations of existing methods and improves the alignment of large language models with human preferences. The performance improvements demonstrated in empirical evaluations underscore the potential of HyPO to deliver more accurate and reliable ai systems.

Review the PaperAll credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram Channel and LinkedIn GrAbove!. If you like our work, you will love our Newsletter..

Don't forget to join our Over 47,000 ML subscribers on Reddit

Find upcoming ai webinars here

Sana Hassan, a Consulting Intern at Marktechpost and a dual degree student at IIT Madras, is passionate about applying technology and ai to address real-world challenges. With a keen interest in solving practical problems, she brings a fresh perspective to the intersection of ai and real-life solutions.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER