Introduction

Recent advances in software and hardware have opened up exciting possibilities, making it possible to run large language models (LLMs) on personal computers. A fantastic tool that facilitates this is LM Studio. In this article, we’ll take an in-depth look at how to run an LLM locally using LM Studio. We’ll go through the essential steps, explore potential challenges, and highlight the benefits of having an LLM right on your machine. Whether you’re a tech enthusiast or just curious about the latest ai, this guide will offer you valuable insights and practical tips. Let’s get started!

General description

- Understand the basic requirements for running an LLM locally.

- Set up LM Studio on your computer.

- Run and interact with an LLM using LM Studio.

- Recognize the benefits and limitations of local LLM implementation.

What is LM Studio?

ai/” target=”_blank” rel=”noreferrer noopener nofollow”>LM Study It streamlines the task of operating and monitoring LLMs on personal computers. It offers powerful functionalities suitable for everyone. With LM Studio, downloading, configuring and deploying different LLMs becomes very easy, allowing you to use its capabilities without relying on cloud services.

Key Features of LM Studio

These are the key features of LM Studio

- User-friendly interface: LM Studio easily manages models, datasets, and configurations.

- Model Management: Easily download and switch between different LLMs.

- Custom Settings: Adjust settings to optimize performance based on your hardware capabilities.

- Interactive console: Interact with the LLM in real time through an integrated console.

- Offline capabilities: Run models without an Internet connection, ensuring privacy and control over your data.

Read also: Beginner's Guide to Building Large Language Models from Scratch

LM Studio Setup

Here's how you can set up LM Studio:

System Requirements

Before installing ai” target=”_blank” rel=”noreferrer noopener nofollow”>LM StudyMake sure your computer meets the following minimum requirements:

- CPU Requirements: A processor with 4 or more cores.

- Operating System Compatibility: Windows 10, Windows 11, macOS 10.15 or newer, or a modern Linux distribution.

- RAM: At least 16 GB.

- Disk space: An SSD with at least 50 GB available.

- Graphic card: An NVIDIA GPU with CUDA capabilities (optional for better performance).

Installation steps

- Download LM Studio: Visit the official website ai/” target=”_blank” rel=”noreferrer noopener nofollow”>LM Study website and download the installer for your operating system.

- Install LM Studio: Follow the on-screen instructions to install the software on your computer.

- Launch LM Studio: After installation, open it and follow the initial setup wizard to configure basic settings.

Download and configure a template

Here's how you can download and configure a template:

- Choose a model:Go to the “Models” section in the LM Studio interface and browse the available language models. Select one that meets your requirements and click “Download”.

- Adjusting model settings:After downloading, modify the model settings such as batch size, memory usage, and computational power. These settings must match your hardware specifications.

- Initialize the model:Once the settings have been configured, start the model by clicking “Load Model”. This may take a few minutes depending on the model size and hardware.

Execution and interaction with the LLM

Using the interactive console

Provides an interactive console that allows you to enter text and receive responses from the loaded LLM. This console is ideal for testing the capabilities of the model and experimenting with different prompts.

- Open the console: In the LM Studio interface, navigate to the “Console” section.

- Input text: Type your message or question in the input field and press “Enter”.

- Receive response: The LLM will process your input and generate a response, which will be displayed in the console.

Integration with applications

LM Studio also supports API integration, allowing you to incorporate LLM into your applications. This is particularly useful for developing chatbots, content generation tools, or any other application that benefits from natural language understanding and generation.

LM Studio Demo with Gemma 2B from Google

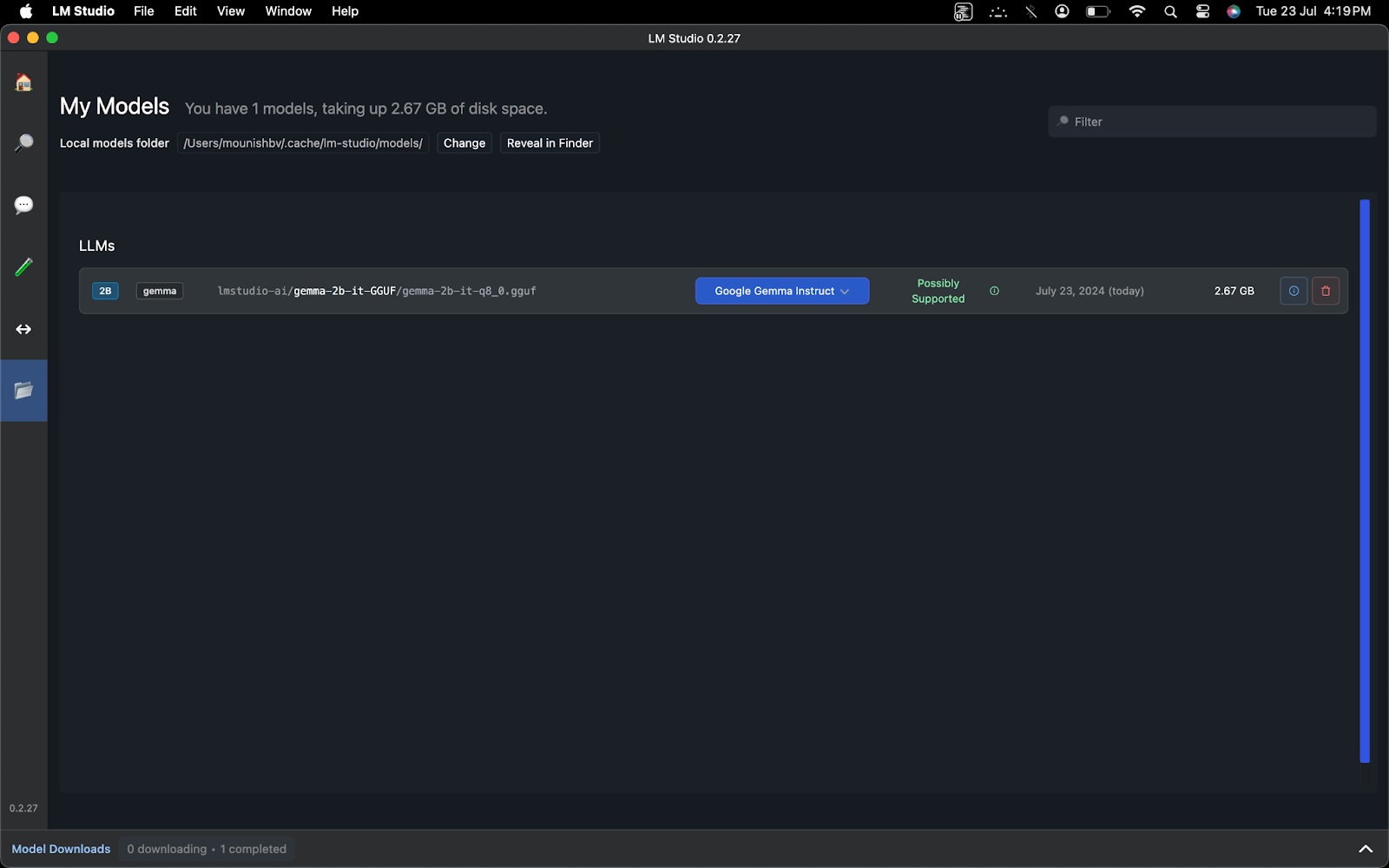

I downloaded Gemma 2B from Google Instruct from the home page, a small and quick LLM. You can download any suggested model from the home page or search for any particular model. You can view downloaded models in “My Models”

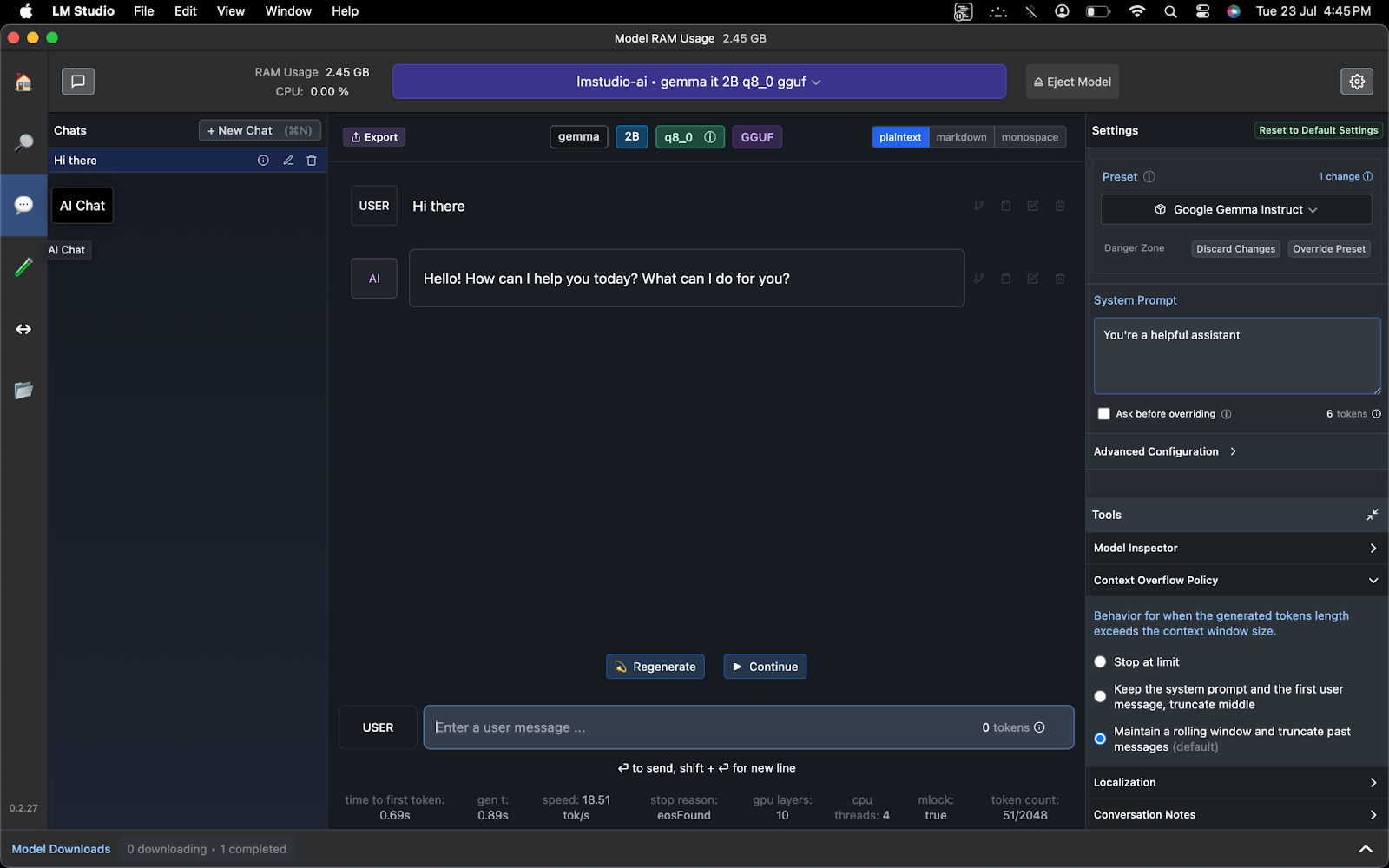

Go to the ai Chat option on the left and choose your model at the top. I'm using the Gemma 2B instruction set here. Notice that you can see the RAM usage at the top.

I set the system message to “You are a helpful assistant” on the right. This is optional; you can leave it as default or configure it as per your requirements.

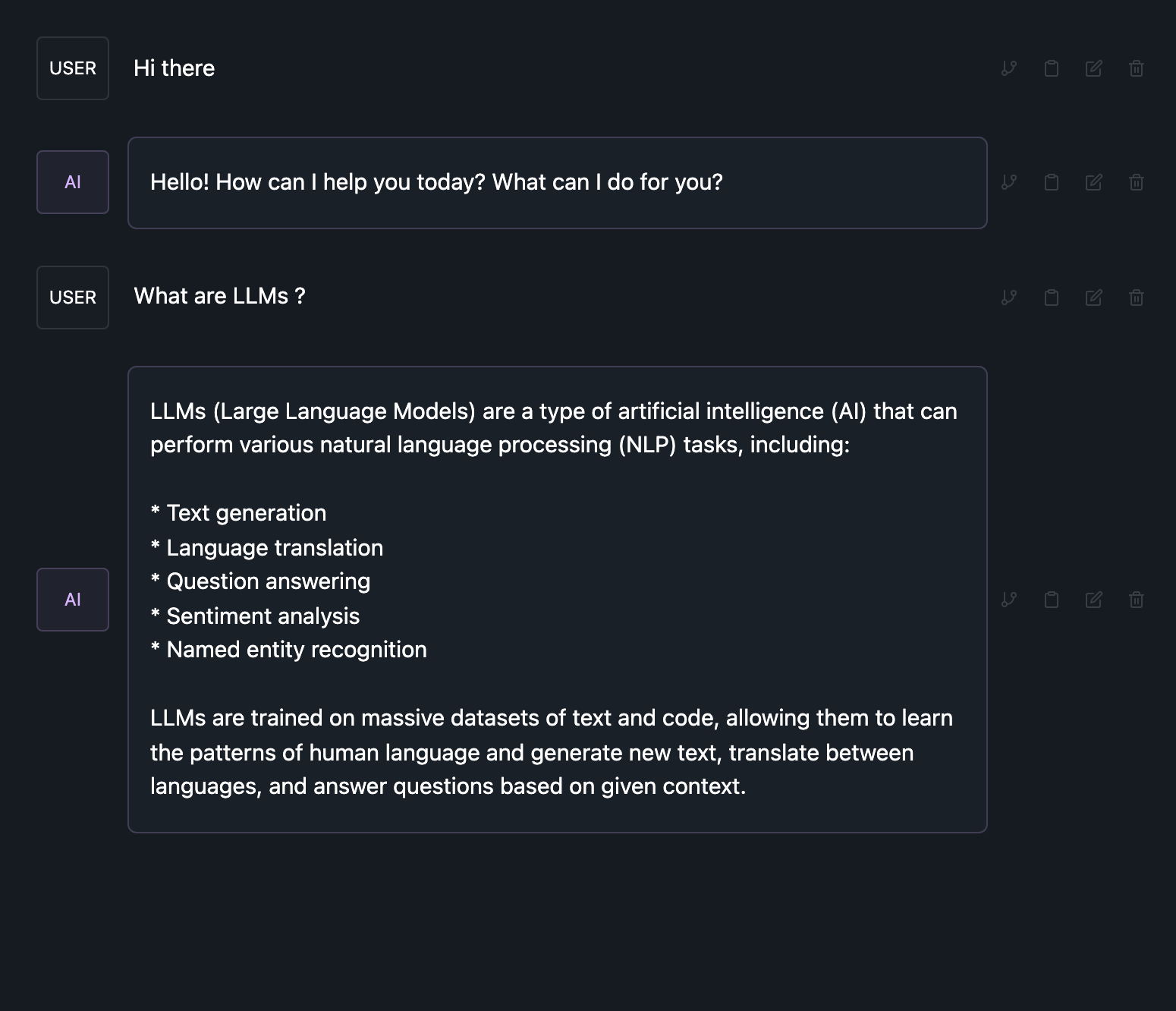

We can see that the text-generating LLM is responding to my prompts and answers my questions. You can now explore and experiment with various LLMs locally.

Benefits of doing an LLM locally

These are the benefits:

- Data Privacy: Running an LLM locally ensures that your data remains private and secure as it does not need to be transmitted to external servers.

- Economic: Use your existing hardware to avoid recurring costs associated with cloud-based LLM services.

- Personalization: Customize the model and its configuration to best suit your specific requirements and hardware capabilities.

- Offline access: Use the model without an Internet connection, ensuring accessibility even in remote or restricted environments.

Limitations and challenges

Below are the limitations and challenges of running LLM locally:

- Hardware Requirements: Running LLM locally is computationally intensive, particularly for larger models.

- Configuration complexity: Initial installation and configuration may be complex for users with limited technical knowledge.

- Performance: On-premises deployment may not match the performance and scalability of cloud-based solutions, especially for real-time applications.

Conclusion

Using LM Studio to run an LLM on a personal computer offers several advantages, including increased data security, lower costs, and greater customization capabilities. While there are hurdles related to hardware demands and the setup process, the benefits make it an attractive option for those looking to work with large language models.

Frequent questions

Answer: LM Studio makes it easy to deploy and manage large language models locally, offering an easy-to-use interface and robust features.

Answer: Yes, LM Studio allows you to run models offline, ensuring data privacy and accessibility in remote environments.

Answer: Data privacy, cost savings, personalization and offline access.

Answer: Challenges include high hardware requirements, complex configuration processes, and potential performance limitations compared to cloud-based solutions.