Imagine trying to bake a cake without a recipe. You may remember bits and pieces, but chances are you'll miss something crucial. This is similar to how traditional large language models (LLMs) work: they are brilliant but sometimes lack specific, up-to-date information.

He naive rag The paradigm represents the oldest methodology, which gained prominence shortly after ChatGPT was widely adopted. This approach follows a traditional process that includes indexing, retrieval, and generation, often called a “read-retrieve” framework.

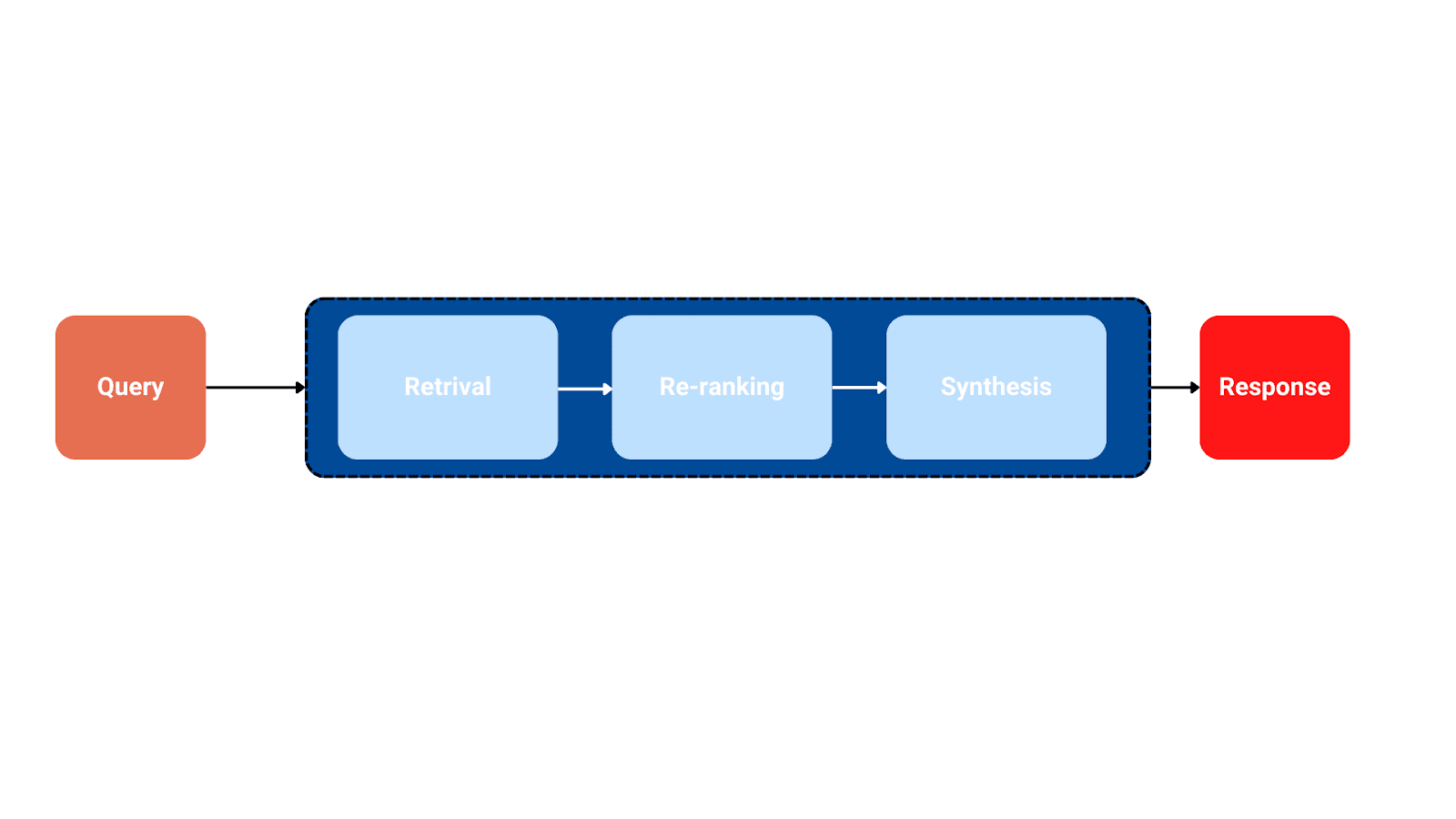

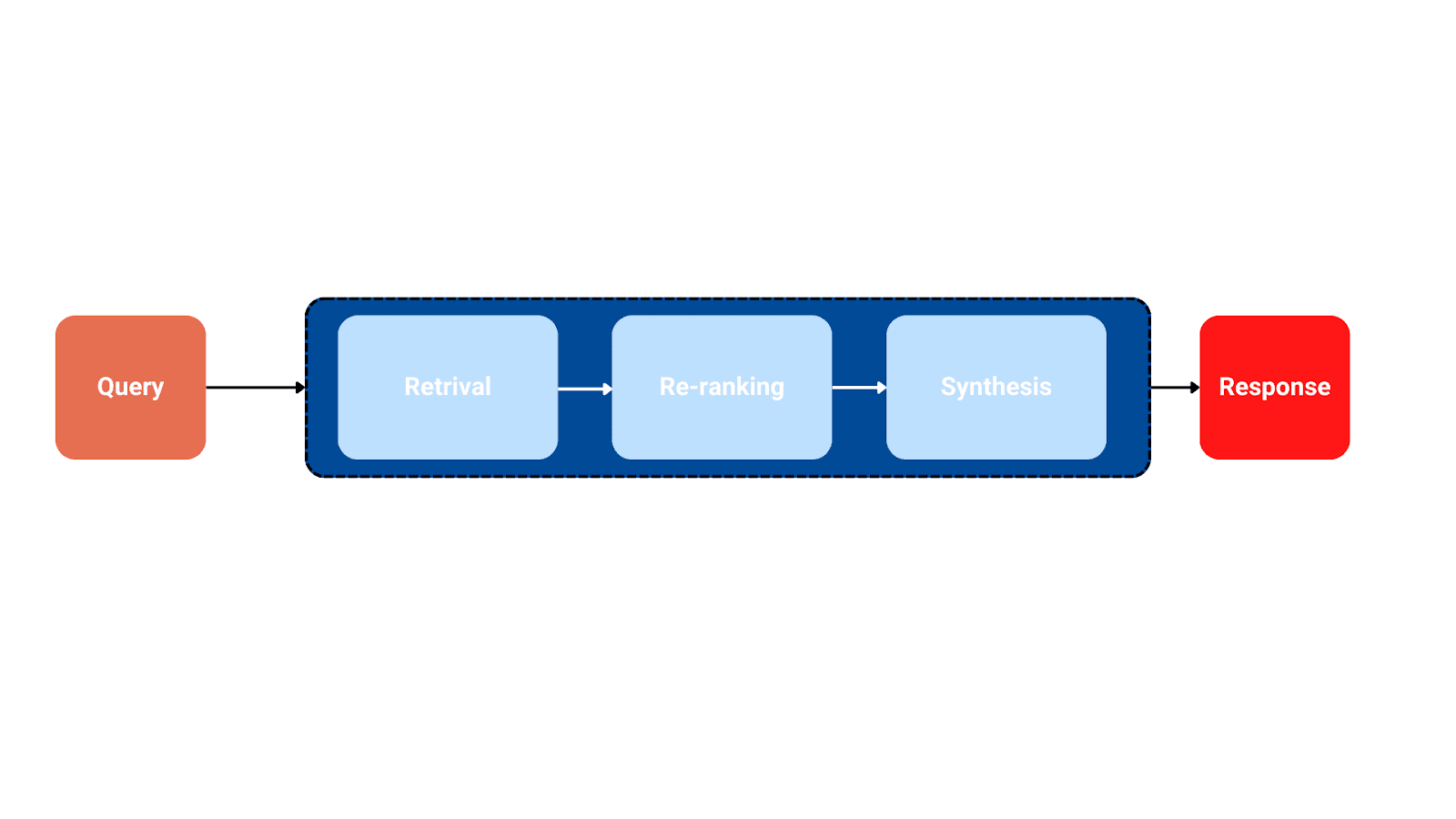

The following image illustrates a Naive RAG pipe:

This image shows the Naive RAG pipeline from query to retrieval to response. Author's image

Implementing Agentic RAG using LangChain takes this a step further. Unlike the naive approach of RAG, Agentic RAG introduces the concept of an “agent” that can actively interact with the recovery system to improve the quality of the generated result.

To start, let's first define what Agentic RAG is.

What is Agentic RAG?

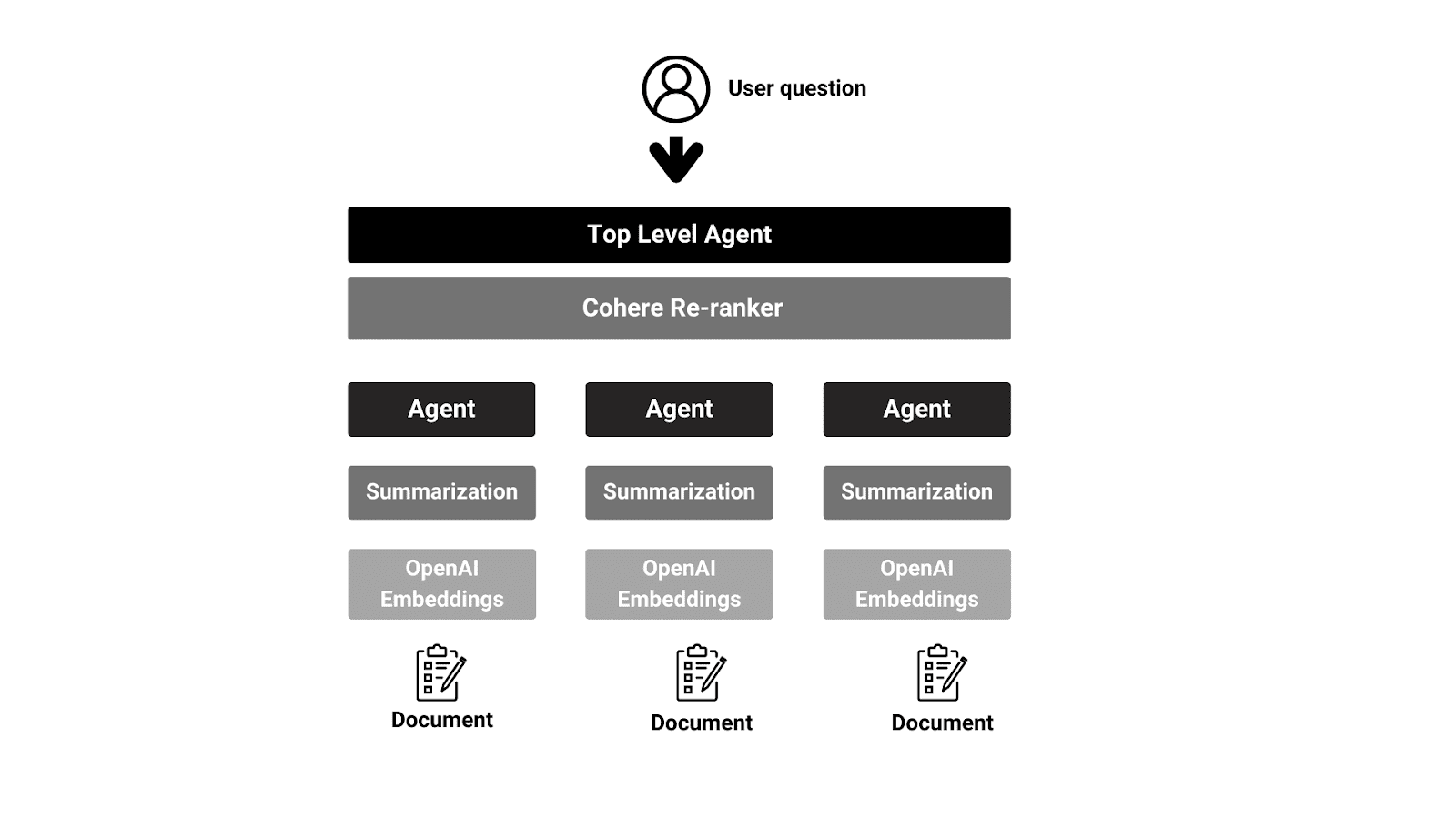

Agentic RAG (Agent-Based Retrieval-Augmented Generation) is an innovative approach to answering questions across multiple documents. Unlike traditional methods that rely solely on large language models, Agentic RAG uses intelligent agents that can plan, reason, and learn over time.

These agents are responsible for comparing documents, summarizing specific documents, and evaluating summaries. This provides a more flexible and dynamic framework for answering questions, as agents collaborate to perform complex tasks.

The key components of Agentic RAG are:

- Document Agents: Responsible for answering questions and summarizing within your designated documents.

- Meta agent: The high-level agent who supervises document agents and coordinates their efforts.

This hierarchical structure allows Agentic RAG to leverage the strengths of individual document agents and the meta-agent, resulting in enhanced capabilities in tasks that require strategic planning and nuanced decision making.

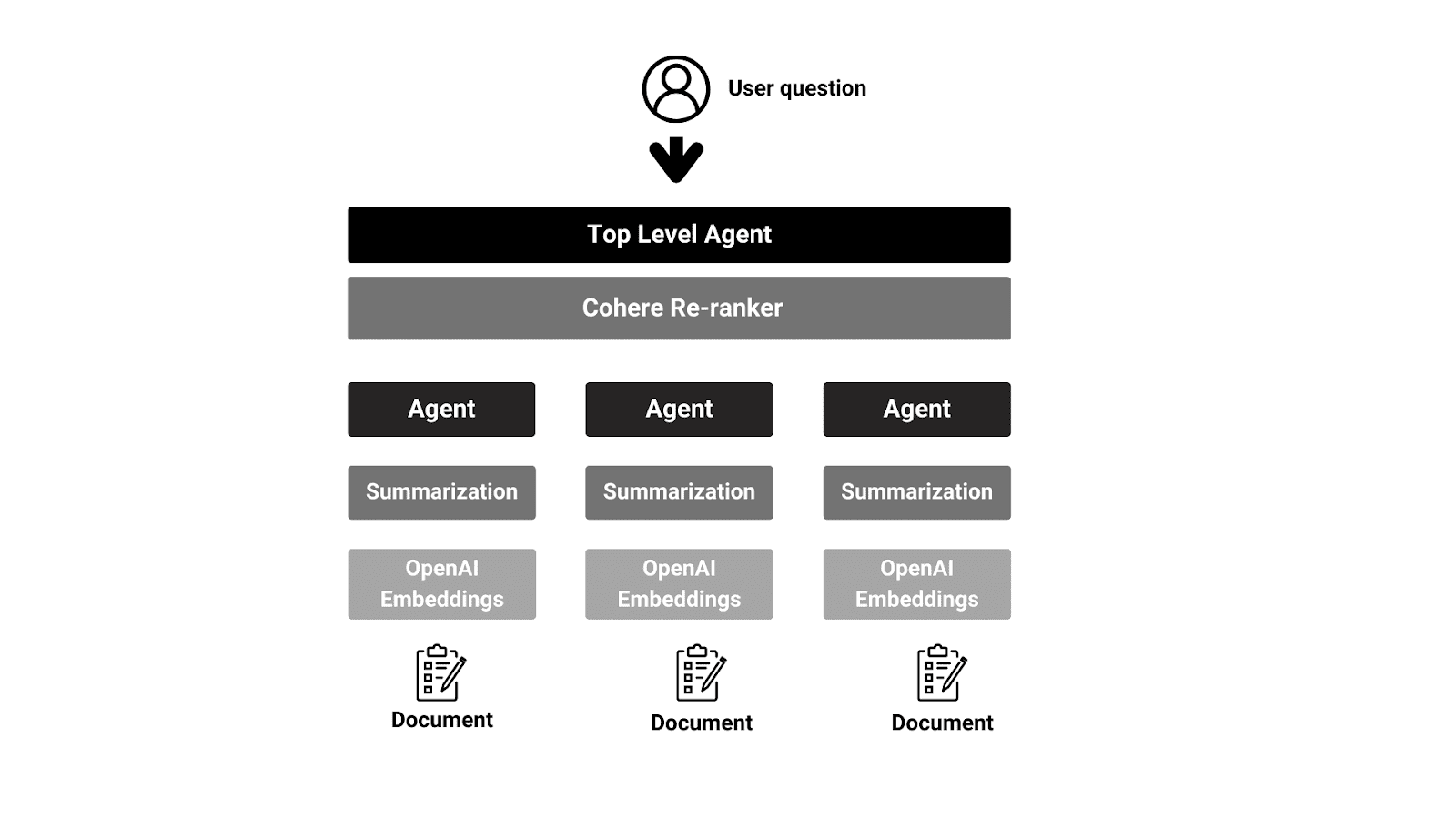

This image illustrates the different layers of agents, from the top-level agent to the subordinate document agents. fountain: ai/blog/agentic-rag-with-llamaindex-2721b8a49ff6″ target=”_blank” rel=”noopener”>CallIndex

Benefits of using Agentic RAG

Using an agent-based implementation in recovery-augmented generation (RAG) offers several benefits including task specialization, parallel processing, scalability, flexibility, and fault tolerance. This is explained in detail below:

- Task specialization: Agent-based RAG allows specialization of tasks between different agents. Each agent can focus on a specific aspect of the task, such as retrieving documents, summarizing, or answering questions. This specialization improves efficiency and accuracy by ensuring that each agent is well suited to their assigned role.

- Parallel processing: Agents in an agent-based RAG system can work in parallel, processing different aspects of the task simultaneously. This parallel processing capability results in faster response times and improved overall performance, especially when dealing with large data sets or complex tasks.

- Scalability: Agent-based RAG architectures are inherently scalable. New agents can be added to the system as needed, allowing it to handle increasing workloads or accommodate additional functionality without significant changes to the overall architecture. This scalability ensures that the system can grow and adapt to changing requirements over time.

- Flexibility: These systems offer flexibility in task assignment and resource management. Agents can be dynamically assigned to tasks based on workload, priority, or specific requirements, enabling efficient resource utilization and adaptability to different workloads or user demands.

- Fault tolerance: Agent-based RAG architectures are inherently fault tolerant. If one agent fails or becomes unavailable, other agents can continue to perform their tasks independently, reducing the risk of system downtime or data loss. This fault tolerance improves the reliability and robustness of the system, ensuring uninterrupted service even in the face of failures or interruptions.

Now that we have learned what it is, in the next part we will implement RAG agent.

Olumida of Shittu is a software engineer and technical writer passionate about leveraging cutting-edge technologies to create compelling narratives, with a keen eye for detail and a knack for simplifying complex concepts. You can also find Shittu at twitter.com/Shittu_Olumide_”>twitter.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>