Mobilenet is an open source model created to support the appearance of smartphones. Use a CNN architecture to perform computer vision tasks, such as image classification and object detection. The models that use this architecture generally require many computational costs and hardware resources, but Mobilenet was made to work with mobile devices and integration.

Over the years, this model has been used for several real world applications. It also has some capacities, such as reducing the parameters using the depth separation convolution. Then, with limited hardware resources of mobile devices, this technique can help the model work.

We will discuss how this model efficiently classifies the images that use predicted images previously trained to classifier with depth.

Learning objectives

- Learn about Mobilenet and your work principle.

- Get information about Mobilenet's architecture.

- Execute the Mobilenet inference to perform image classification.

- Explore Mobilenet's real life applications.

This article was published as part of the Blogathon of Data Sciences.

Mobilenet work principle

The principle of operation of Mobilenet It is one of the most important parts of the structure of this model. Describe the techniques and methods used to build this model and make it adaptable to mobile and integrated devices. The design of this model takes advantage of the architecture of the Neural Network of Convolution (CNN) to allow it to operate on mobile devices.

However, a crucial part of this architecture is the use of a depth separable convolution to reduce the number of parameters. This method uses two operations: in depth and punctual convolution.

Standard covulation

A standard convolution process begins with the filter (nucleus); This step is where image characteristics, such as edges, textures or patterns, are detected in the images. This is followed by sliding the filter through the wide and height of the image. And each step implies a multiplication and adds in the form of an element. The sum of this provides a unique number that results in the formation of a characteristics map. It represents the presence and strength of the characteristics detected by the filter.

However, this comes with a high computational cost and a higher parameter count, hence the need for a convolution in depth and punctual.

How does the depth and point convolution work?

The in -depth convolution applies a single filter to the input channel, while the point combines the exit of the convolution in depth to create new features from the image.

So, the difference here is that with the application of depth only one filter, the multiplication task is reduced, which means that the output has the same number of channels as the entrance. This leads to punctual convolution.

Punctual convolution uses a 1 × 1 filter that combines or expands characteristics. This helps the model to learn different patterns that dispense the characteristics of the channel to create a new map of features. This allows the punctual convolution to increase or decrease the number of channels on the map of output features.

Mobilenet Architecure

This computer vision model is based on CNN architecture to perform image classification tasks and object detection. The use of the in -depth separable convolution is to adapt this model to mobile and integrated devices, since it allows the construction of light neuronal networks.

This mechanism causes the reduction of parameter counts and latency to meet the limitations of resources. Architecture allows efficiency and precision at the exit of the model.

The second version of this model (Mobilenetv2) was built with an improvement. Mobilenet V2 introduced a special type of construction component called inverted waste with bottlenecks. These blocks help reduce the number of processed channels, which makes the model more efficient. It also includes shortcuts between bottleneck layers to improve information flow. Instead of using a standard activation function (RELU) in the last layer, it uses a linear activation, which works better because the data has a lower spatial size at that stage.

How to execute this model?

The use of this model for the classification of images requires some steps. The model receives and classifies an input image using its incorporated prediction class. We are going to immerse ourselves in the steps on how to execute Mobilenet:

Import necessary libraries for image classification

You must import some essential modules to execute this model. This begins with the importation of the image processor and the image classification module from the transformer library. They help prepock images and load a previously trained model, respectively. In addition, Pil is used to manipulate images, while 'requests' allows you to obtain images from the web.

from transformers import AutoImageProcessor, AutoModelForImageClassification

from PIL import Image

import requestsLoad input image

image = Image.open('/content/imagef-ishfromunsplash-ezgif.com-webp-to-jpg-converter.jpg')The 'image.open' function is used from the Pil library to load the image from a file route, which, in this case, was loaded from our local device. Another alternative would be to obtain the image using its URL.

Load of the previously trained model for the classification of images

The following code initializes the process 'self -imageprocessor' of the previously trained model Mobilenetv2. This part handles the preprocessing of the image before feeding the model. In addition, as seen in the second line, the Code loads the corresponding Mobilenetv2 model for image classification.

preprocessor = AutoImageProcessor.from_pretrained("google/mobilenet_v2_1.0_224")

model = AutoModelForImageClassification.from_pretrained("google/mobilenet_v2_1.0_224")Input processing

This step is where the preprocessing image becomes an adequate format for Pytorch. Then, it is passed through the model to generate output records, which will become probabilities using softmax.

inputs = preprocessor(images=image, return_tensors="pt")

outputs = model(**inputs)

logits = outputs.logitsProduction

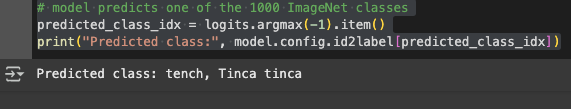

# model predicts one of the 1000 ImageNet classes

predicted_class_idx = logits.argmax(-1).item()

print("Predicted class:", model.config.id2label(predicted_class_idx))This code finds the class with the highest prediction score of the model output (logits) and recovers its corresponding label of the model configuration. Then the predicted class label is printed.

Here is the exit:

Here is a link to Colab file.

Application of this model

Mobilenet has found applications in several cases of use of real life. And it has been used in several fields, including medical care. These are some of the applications of this model:

- During the Covid Pandemia, Mobilenet was used to classify thorax radiography in three: normal pneumonia, covid and viral. The result also came with very high precision.

- Mobilenetv2 was also efficient to detect two main forms of skin cancer. This innovation was significant since medical attention in areas that could not pay stable internet connectivity that abandoned this model.

- In agriculture, this model was also crucial to detect leaves in tomato crops. Then, with a mobile application, this model can help detect 10 common leaf diseases.

You can also consult the model here: <a target="_blank" href="https://viso.ai/deep-learning/mobilenet-efficient-deep-learning-for-mobile-vision/” target=”_blank” rel=”noreferrer noopener nofollow”>Link

Conclude

Mobilenet is the result of a master class of Google researchers to bring models with high computational costs to mobile devices without interfering with their efficiency. This model was based on an architecture that allows the creation of the classification and detection of images only from mobile applications. Cases of use in health and agriculture are evidence of the capacities of this model.

To go

There are some conversation points about how this model works, from architecture to applications. These are some of the most prominent aspects of this article:

- Improved architecture: the second version of Mobilenet came with inverted waste and bottleneck layers in Mobilenetv2. This development improved efficiency and precision while maintaining light performance.

- Efficient mobile optimization: The design of this model for mobile and integrated design means that it serves low computer resources while offering effective performance.

- Real world applications: Mobilenet has been used successfully in medical care (eg, COVID-19 and skin cancer detection) and agriculture (for example, detect diseases of leaves in crops).

The means shown in this article are not owned by Analytics Vidhya and are used at the author's discretion.

Frequent questions

ANS. MOBILENETV2 uses a separate in invested depth and waste, which makes it more efficient for mobile and integrated systems compared to traditional CNNs.

ANS. Mobilenetv2 is optimized for classification tasks of low latency and real -time images, which makes it appropriate for mobile and edge devices.

ANS. While Mobilenetv2 is optimized for efficiency, it maintains high precision close to the largest models, so it is a solid option for mobile applications.

ANS. While Mobilenetv2 is optimized for efficiency, it maintains high precision close to the largest models, so it is a solid option for mobile applications.

(Tagstotranslate) Blogathon

NEWSLETTER

NEWSLETTER