Openai's SDK agent has taken things a bit with the launch of his voice agent function, allowing him to create intelligent applications, in real time and speech based. Whether you are building a language tutor, a virtual assistant or a support bot, this new capacity provides a completely new level of interaction: natural, dynamic and human. We are going to break down and walk for what it is, how it works and how you can build a multilingual voice agent yourself.

What is a voice agent?

A voice agent is a system that listens to his voice, understands what he is saying, thinks of an answer and then responds out loud. Magic works with a combination of discourse to text, language models and text technologies to voice.

Openai SDK agent makes this incredibly accessible through something called Voicepipeline, a 3 -step structured process:

- Speech-To-Text (STT): Capture and convert your words spoken into text.

- Agent logic: This is your code (or your agent), which discovers the appropriate response.

- Text to voice (TTS): Converts the text response of the agent into audio that is spoken out loud.

Choose correct architecture

Depending on your use case, you will want to choose one of the two main architectures compatible with OpenAi:

1. Voice -to -voice architecture (multimodal)

This is the real-time audio approach that uses models such as GPT-4O-Realtime-Previa. Instead of translating to text behind the scene, the model processes and generates speech directly.

Why use this?

- Low latency interaction, in real time

- Emotion and understanding of vocal tone

- Natural and soft conversation flow

Perfect for:

- Language tutoring

- Live conversation agents

- Interactive narration or learning applications

| Strengths | Better for |

|---|---|

| Low latency | Interactive and unstructured dialogue |

| Multimodal understanding (voice, tone, pauses) | Real -time commitment |

| Conscious responses of emotion | Customer service, virtual companions |

This approach makes conversations feel fluid and human, but they may need more attention in cases of edge such as registration or exact transcripts.

2. Chained architecture

The chained method is more traditional: speech becomes text, the LLM processes that text and then the answer becomes speech. The recommended models here are:

- GPT-4O-Transcribe (for STT)

- GPT-4O (for logic)

- GPT-4O-MIN-TTS (for TTS)

Why use this?

- You need transcripts for audit/registration

- Have structured workflows such as customer service or lead rating

- Wants predictable and controllable behavior

Perfect for:

- Support bots

- Sales agents

- Specific homework assistants

| Strengths | Better for |

|---|---|

| High control and transparency | Structured workflows |

| Reliable text -based processing | Applications that need transcripts |

| Predictable outputs | Flows with customer -oriented scripts |

This is easier to purify and a great starting point if you are new in voice agents.

How does the voice agent work?

We configure a Voice voice With a personalized workflow. This workflow executes an agent, but can also trigger special answers if it says a secret word.

This is what happens when you talk:

- Audio goes to the voice of voice while you speak.

- When you stop talking, the pipe goes into action.

- The pipe then:

- Transcribe your speech to the text.

- Send the transcription to the workflow, which executes the agent's logic.

- Transmit the agent's response to a voice text model (TTS).

- Play the audio generated for you.

It is in real time, interactive and intelligent enough to react differently if it slips into a hidden phrase.

Settings of a pipe

When configuring a voice pipe, there are some key components that can customize:

- Workflow: This is the logic that is executed every time the new audio is transcribed. Define how the agent processes and responds.

- STT and TTS MODELS: Choose which voice models to text and text to voice that will use its pipe.

- Configuration settings: This is where you adjust how your pipe behaves:

- Model supplier: A mapping system that links the names of models with real model instances.

- Tracking options: Control if the tracking is enabled, if audio files can be loaded, assign work flow names, tracking identifications and more.

- Specific model configuration: Customize the indications, language preferences and compatible data types for TTS and STT models.

Run a voice pipe

To start a voice pipe, you will use the run() method. Accept the audio entry in one of two ways, depending on how the speech is handling:

- Audio audio It is ideal when you already have a complete audio clip or transcription. It is perfect for cases where you know when the speaker is ready, as with a pre -recorded audio or push configurations to speak. There is no need to detect live activity here.

- STREAMEUDIOINPUT It is designed for dynamics in real time. It feeds on audio fragments as they are captured, and the voice pipe is automatically resolved when activating the logic of the agent using something called activity detection. This is very useful when it comes to open microphones or hands -free interaction where it is not obvious when the speaker ends.

Understand the results

Once your pipe is running, it returns a streamdaudiorsult, which allows you to transmit events in real time as the interaction develops. These events come in some flavors:

- Voicestreameventaudio – Contains audio output fragments (that is, what the agent says).

- VICERTREAMEVELFEFYCLE – Brand important events of the life cycle, such as the beginning or end of a conversation turn.

- VoeystroMeVerror – He points out that something went wrong.

Practical voice agent used by Operai SDK agent

Here is a cleaner and well -structured version of your guide to configure a practical voice agent that uses OpenAi agent SDK, with detailed steps, grouping and clarity improvements. Everything is casual and practical, but more readable and processable:

1. Configure the directory of your project

mkdir my_project

cd my_project

2. Create and activate a virtual environment

Create the environment:

python -m venv .venvActivate it:

source .venv/bin/activate3. Install Operai SDK agent

pip install openai-agent4. Establish an OpenAI API key

export OPENAI_API_KEY=sk-...5. Clone the example repository

git clone https://github.com/openai/openai-agents-python.git6. Modify the example code for Hindi Agent and Audio Garning

Navigate to the example file:

cd openai-agents-python/examples/voice/staticNow, Edit Main.py:

You will do two key things:

- Add a Hindi agent

- Enable audio savings after playback

Replace all content in main.py. This is the final code here:

import asyncio

import random

from agents import Agent, function_tool

from agents.extensions.handoff_prompt import prompt_with_handoff_instructions

from agents.voice import (

AudioInput,

SingleAgentVoiceWorkflow,

SingleAgentWorkflowCallbacks,

VoicePipeline,

)

from .util import AudioPlayer, record_audio

@function_tool

def get_weather(city: str) -> str:

print(f"(debug) get_weather called with city: {city}")

choices = ("sunny", "cloudy", "rainy", "snowy")

return f"The weather in {city} is {random.choice(choices)}."

spanish_agent = Agent(

name="Spanish",

handoff_description="A spanish speaking agent.",

instructions=prompt_with_handoff_instructions(

"You're speaking to a human, so be polite and concise. Speak in Spanish.",

),

model="gpt-4o-mini",

)

hindi_agent = Agent(

name="Hindi",

handoff_description="A hindi speaking agent.",

instructions=prompt_with_handoff_instructions(

"You're speaking to a human, so be polite and concise. Speak in Hindi.",

),

model="gpt-4o-mini",

)

agent = Agent(

name="Assistant",

instructions=prompt_with_handoff_instructions(

"You're speaking to a human, so be polite and concise. If the user speaks in Spanish, handoff to the spanish agent. If the user speaks in Hindi, handoff to the hindi agent.",

),

model="gpt-4o-mini",

handoffs=(spanish_agent, hindi_agent),

tools=(get_weather),

)

class WorkflowCallbacks(SingleAgentWorkflowCallbacks):

def on_run(self, workflow: SingleAgentVoiceWorkflow, transcription: str) -> None:

print(f"(debug) on_run called with transcription: {transcription}")

async def main():

pipeline = VoicePipeline(

workflow=SingleAgentVoiceWorkflow(agent, callbacks=WorkflowCallbacks())

)

audio_input = AudioInput(buffer=record_audio())

result = await pipeline.run(audio_input)

# Create a list to store all audio chunks

all_audio_chunks = ()

with AudioPlayer() as player:

async for event in result.stream():

if event.type == "voice_stream_event_audio":

audio_data = event.data

player.add_audio(audio_data)

all_audio_chunks.append(audio_data)

print("Received audio")

elif event.type == "voice_stream_event_lifecycle":

print(f"Received lifecycle event: {event.event}")

# Save the combined audio to a file

if all_audio_chunks:

import wave

import os

import time

os.makedirs("output", exist_ok=True)

filename = f"output/response_{int(time.time())}.wav"

with wave.open(filename, "wb") as wf:

wf.setnchannels(1)

wf.setsampwidth(2)

wf.setframerate(16000)

wf.writeframes(b''.join(all_audio_chunks))

print(f"Audio saved to {filename}")

if __name__ == "__main__":

asyncio.run(main())

6. Execute the voice agent

Be sure to be in the right board:

cd openai-agents-pythonThen, spear:

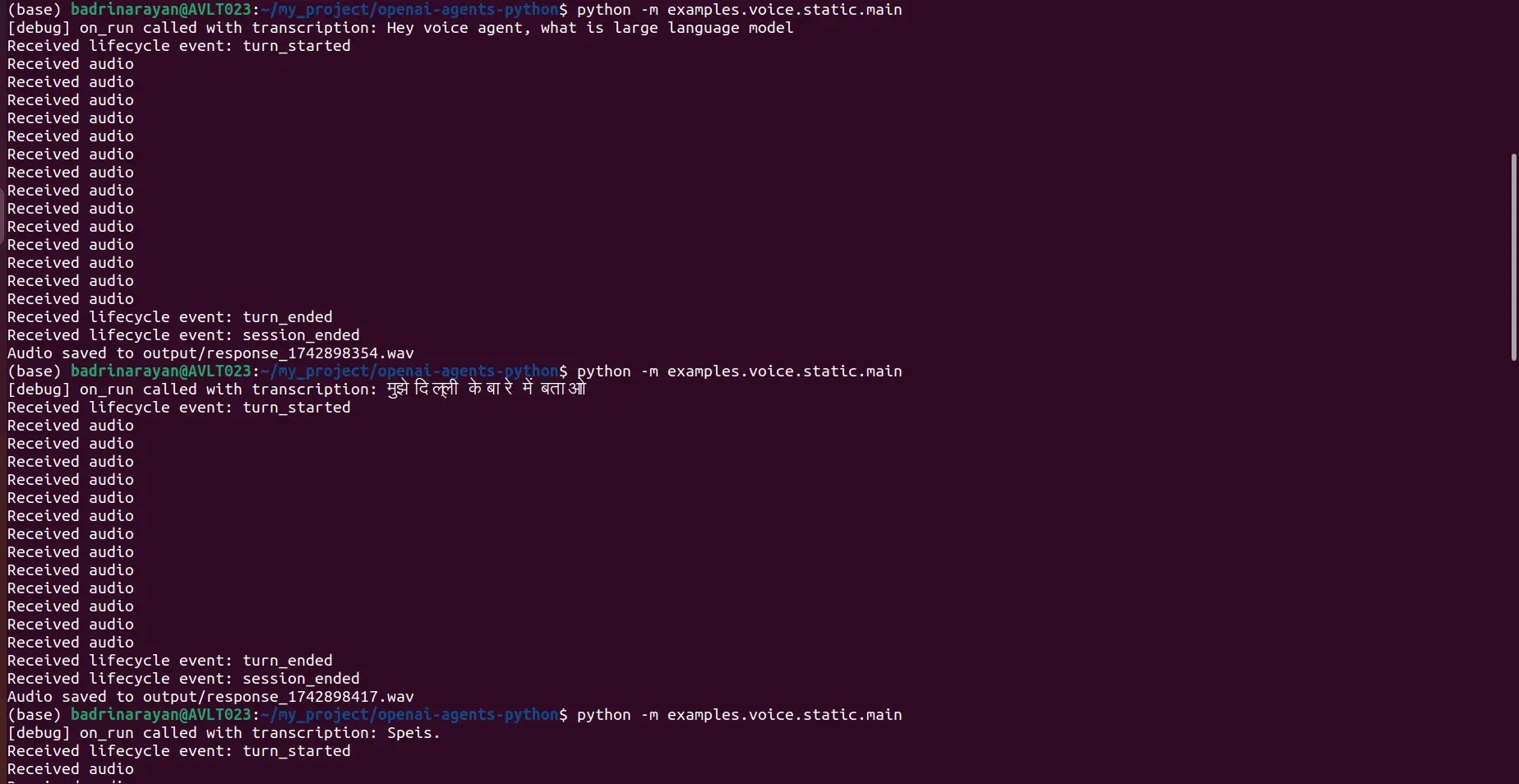

python -m examples.voice.static.mainI asked the agent two things, one in English and one in Hindi:

- Voice notice: Hello, voice agent, what is a large language model?

- Voice notice: “Tell me about Delhi

Here is the terminal:

Production

English response:

No answer:

Additional resources

Do you want to dig deeper? Look at these:

Also read: OpenAI audio models: how to access, characteristics, applications and more

Conclusion

Building a voice agent with Openai SDK agent is much more accessible now: you no longer need to join a ton of tools. Simply choose the correct architecture, configure your voice voice and let the SDK do heavy work.

If you go for a high quality conversation flow, go multimodal. If you want structure and control, see chained. Anyway, this technology is powerful, and it will only improve. If you are creating one, let me know in the comments section below.

Log in to continue reading and enjoying content cured by experts.

NEWSLETTER

NEWSLETTER