This post was co-written with Greg Benson, Chief Scientist; Aaron Kesler, Sr. Product Manager; and Rich Dill, Enterprise Solutions Architect from SnapLogic.

Many customers are building generative ai apps on Amazon Bedrock and Amazon CodeWhisperer to create code artifacts based on natural language. This use case highlights how large language models (LLMs) are able to become a translator between human languages (English, Spanish, Arabic, and more) and machine interpretable languages (Python, Java, Scala, SQL, and so on) along with sophisticated internal reasoning. This emergent ability in LLMs has compelled software developers to use LLMs as an automation and UX enhancement tool that transforms natural language to a domain-specific language (DSL): system instructions, API requests, code artifacts, and more. In this post, we show you how SnapLogic, an AWS customer, used Amazon Bedrock to power their SnapGPT product through automated creation of these complex DSL artifacts from human language.

When customers create DSL objects from LLMs, the resulting DSL is either an exact replica or a derivative of an existing interface data and schema that forms the contract between the UI and the business logic in the backing service. This pattern is particularly trending with independent software vendors (ISVs) and software as a service (SaaS) ISVs due to their unique way of representing configurations through code and the desire to simplify the user experience for their customers. Example use cases include:

The most straightforward way to build and scale text-to-pipeline applications with LLMs on AWS is using Amazon Bedrock. Amazon Bedrock is the easiest way to build and scale generative ai applications with foundation models (FMs). It is a fully managed service that offers access to a choice of high-performing foundation FMs from leading ai via a single API, along with a broad set of capabilities you need to build generative ai applications with privacy and security. Anthropic, an ai safety and research lab that builds reliable, interpretable, and steerable ai systems, is one of the leading ai companies that offers access to their state-of-the art LLM, Claude, on Amazon Bedrock. Claude is an LLM that excels at a wide range of tasks, from thoughtful dialogue, content creation, complex reasoning, creativity, and coding. Anthropic offers both Claude and Claude Instant models, all of which are available through Amazon Bedrock. Claude has quickly gained popularity in these text-to-pipeline applications because of its improved reasoning ability, which allows it to excel in ambiguous technical problem solving. Claude 2 on Amazon Bedrock supports a 100,000-token context window, which is equivalent to about 200 pages of English text. This is a particularly important feature that you can rely on when building text-to-pipeline applications that require complex reasoning, detailed instructions, and comprehensive examples.

SnapLogic background

SnapLogic is an AWS customer on a mission to bring enterprise automation to the world. The SnapLogic Intelligent Integration Platform (IIP) enables organizations to realize enterprise-wide automation by connecting their entire ecosystem of applications, databases, big data, machines and devices, APIs, and more with pre-built, intelligent connectors called Snaps. SnapLogic recently released a feature called SnapGPT, which provides a text interface where you can type the desired integration pipeline you want to create in simple human language. SnapGPT uses Anthropic’s Claude model through Amazon Bedrock to automate the creation of these integration pipelines as code, which are then used through SnapLogic’s flagship integration solution. However, SnapLogic’s journey to SnapGPT has been a culmination of many years operating in the ai space.

SnapLogic’s ai journey

In the realm of integration platforms, SnapLogic has consistently been at the forefront, harnessing the transformative power of artificial intelligence. Over the years, the company’s commitment to innovating with ai has become evident, especially when we trace the journey from Iris to ai” target=”_blank” rel=”noopener”>AutoLink.

The humble beginnings with Iris

In 2017, SnapLogic unveiled Iris, an industry-first ai-powered integration assistant. Iris was designed to use machine learning (ML) algorithms to predict the next steps in building a data pipeline. By analyzing millions of metadata elements and data flows, Iris could make intelligent suggestions to users, democratizing data integration and allowing even those without a deep technical background to create complex workflows.

AutoLink: Building momentum

Building on the success and learnings from Iris, SnapLogic introduced AutoLink, a feature aimed at further simplifying the data mapping process. The tedious task of manually mapping fields between source and target systems became a breeze with AutoLink. Using ai, AutoLink automatically identified and suggested potential matches. Integrations that once took hours could be run in mere minutes.

The generative leap with SnapGPT

SnapLogic’s latest foray in ai brings us SnapGPT, which aims to revolutionize integration even further. With SnapGPT, SnapLogic introduces the world’s first generative integration solution. This is not just about simplifying existing processes, but entirely reimagining how integrations are designed. The power of generative ai can create entire integration pipelines from scratch, optimizing the workflow based on the desired outcome and data characteristics.

SnapGPT is extremely impactful to SnapLogic’s customers because they are able to drastically decrease the amount of time required to generate their first SnapLogic pipeline. Traditionally, SnapLogic customers would need to spend days or weeks configuring integration pipelines from scratch. Now, these customers are able to simply ask SnapGPT to, for example, “create a pipeline which will move all of my active SFDC customers to WorkDay.” A working first draft of a pipeline is automatically created for this customer, drastically cutting down the development time required for creation of the base of their integration pipeline. This allows the end customer to spend more time focusing on what has true business impact to them instead of working on configurations of an integration pipeline. The following example shows how a SnapLogic customer can enter a description into the SnapGPT feature to quickly generate a pipeline, using natural language.

AWS and SnapLogic have collaborated closely throughout this product build and have learned a lot along the way. The rest of this post will focus on the technical learnings AWS and SnapLogic have had around using LLMs for text-to-pipeline applications.

Solution overview

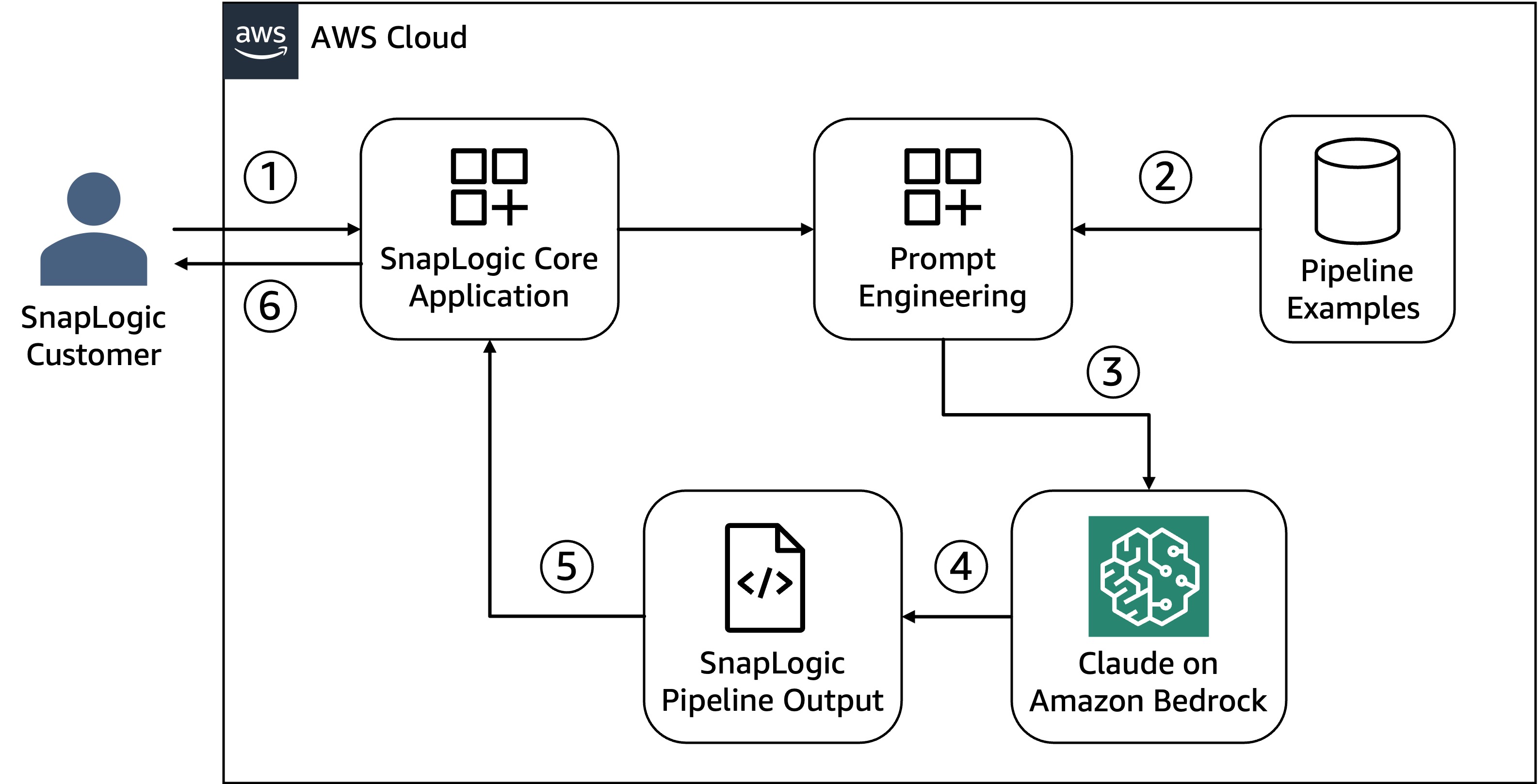

To solve this text-to-pipeline problem, AWS and SnapLogic designed a comprehensive solution shown in the following architecture.

A request to SnapGPT goes through the following workflow:

- A user submits a description to the application.

- SnapLogic uses a Retrieval Augmented Generation (RAG) approach to retrieve relevant examples of SnapLogic pipelines that are similar to the user’s request.

- These extracted relevant examples are combined with the user input and go through some text preprocessing before they’re sent to Claude on Amazon Bedrock.

- Claude produces a JSON artifact that represents a SnapLogic pipeline.

- The JSON artifact is directly integrated to the core SnapLogic integration platform.

- The SnapLogic pipeline is rendered to the user in a visual friendly manner.

Through various experimentation between AWS and SnapLogic, we have found the prompt engineering step of the solution diagram to be extremely important to generating high-quality outputs for these text-to-pipeline outputs. The next section goes further into some specific techniques used with Claude in this space.

Prompt experimentation

Throughout the development phase of SnapGPT, AWS and SnapLogic found that rapid iteration on prompts being sent to Claude was a critical development task to improving the accuracy and relevancy of text-to-pipeline outputs in SnapLogic’s outputs. By using Amazon SageMaker Studio interactive notebooks, the AWS and SnapLogic team were able to quickly work through different versions of prompts by using the Boto3 SDK connection to Amazon Bedrock. Notebook-based development allowed the teams to quickly create client-side connections to Amazon Bedrock, include text-based descriptions alongside Python code for sending prompts to Amazon Bedrock, and hold joint prompt engineering sessions where iterations were made quickly between multiple personas.

Anthropic Claude prompt engineering methods

In this section, we describe some of the iterative techniques we used to create a high-performing prompt based on an illustrative user request: “Make a pipeline which uses the ExampleCompany database which retrieves all active customers.” Note that this example is not the schema that SnapGPT is powered by, and is only used to illustrate a text-to-pipeline application.

To baseline our prompt engineering, we use the following original prompt:

Make a pipeline which uses the ExampleCompany database which retrieves all active customers

The expected output is as follows:

{ "database": "ExampleCompany", "query": "SELECT * FROM ec_prod.customers WHERE status="active"" }

Improvement #1: Using the Human and Assistant annotations

Claude’s training procedure teaches the FM to understand dialogue between a human and an assistant in its prompt structure. Claude users can take advantage of this structure by ending their prompt in Assistant:, which will trigger Claude to start generating the response to a query based on what the human has said. Note that because Claude will continue to generate text until it is told to stop, make sure you use a stop sequence of \n\nHuman: in the API request to Amazon Bedrock when using Claude. This will ensure you only receive the response from the assistant and not the predicted next response from the human in the dialogue chain. These \n\nHuman and \n\nAssistant indicators not only help in limiting Claude’s response to relevant information, but also help in providing clear demarcation between primary human inputs and assistant responses from other interactions. This especially helps in a few-shot prompt setting where you can have multiple queries and responses with queries tagged with \n\nHuman and previous responses tagged with \n\nAssistant. Let’s look at the improved prompt and associated illustrative API call to Amazon Bedrock via the Boto3 SDK.

We use the following prompt:

Human: Make a pipeline which uses the ExampleCompany database which retrieves all active customers

Assistant:

The output is as follows:

Here is a MongoDB pipeline to retrieve all active customers from the ExampleCompany database:

pipeline = ( { '$match': { 'database': 'ExampleCompany', 'status': 'active' } } ) db.customers.aggregate(pipeline)The key steps are:

– `$match` to filter the documents to only those in the ExampleCompany database and with status equal to “active”.

– `db.customers.aggregate()` runs the pipeline on the customers collection and returns the filtered results.

So this will return all customer documents from the ExampleCompany database where the status field is set to “active”.

Notice how in the output, Claude makes up an answer to our question without any context. The answer is potentially helpful, but it’s not specific to the problem we’re trying to solve because it is not provided with enough context. It’s also important to note that Anthropic requires this human and assistant format when using the Claude models; if you don’t include this step, Amazon Bedrock will return an error.

Improvement #2: Include hyper-specific context for Claude before it’s told what to do

Claude performs best when it’s given good context about what it needs to accomplish as well as how it is supposed to accomplish that task. Here are a few examples of things to include as context for Claude before you tell it the task:

- Describe the job function that you want Claude to fulfill. By doing this, you are making Claude think in the role of someone working in that job, or more like someone just being hired in that specific job role. This will help Claude formulate the answer from that specific job role’s perspective, reducing hallucination and randomness in the answer.

- Include technical details regarding the output format. Foundation models are generally good at following actions, so asking Claude to generate the output in a specific format or schema with one or a few examples will help Claude provide the answer in the right format, reducing the need for filtering and postprocessing of the output. The example in the following prompt shows a fictitious schema that matches the expected output.

Let’s combine these suggestions to improve upon our original prompt:

Human: Your job is to act as an expert on ETL pipelines. Specifically, your job is to create a JSON representation of an ETL pipeline which will solve the user request provided to you.

The JSON output should follow the following format:

Here is your task: make a pipeline which uses the ExampleCompany database which retrieves all active customers

Assistant:

The output is as follows:

Here is a JSON representation of an ETL pipeline to retrieve all active customers from the ExampleCompany database:

This pipeline uses the ExampleCompany database and a SQL query to select all records from the customers table where the status is ‘active’.

With the addition of context, the model is now able to get most of the way to our answer. It now knows how to structure the output and it understands that it needs to write a SQL statement to solve the task. However, you will notice that the model doesn’t correctly use the ec_prod.customers nomenclature we are expecting. Room for improvement! Also note, in practice, you will need to include significantly more context than what we provided in this example for high-quality results in text-to-pipeline use cases.

Improvement #3: Enclosing examples in XML tags

Few-shot learning is a popular way to increase the accuracy with FMs where you have relevant examples to guide the FM in their task. This is particularly important when working in text-to-pipeline applications because these hyper-specific pipeline outputs are usually not included in any public dataset because they are specific to an individual customer’s data representation. When using Claude, you can take advantage of its innate knowledge of arbitrary XML tags in order to provide these examples in a few-shot format. In the following improved prompt, notice the addition of examples enclosed in <example> XML tags. Also, you can use abbreviations for human and assistant with H and A to provide context to Claude without making it think these were specific pieces of the dialogue that have already occurred.

We use the following prompt:

Human: Your job is to act as an expert on ETL pipelines. Specifically, your job is to create a JSON representation of an ETL pipeline which will solve the user request provided to you.

The JSON output should follow the following format:

Here are some examples of user requests and associated output JSON artifacts which correctly solve the task provided. Make sure to use the same nomenclature which is provided in the examples if you are using the same databases.

<example-1>

H: query the ExampleCompany database for any active orders for customer #1234

A:

The reasoning for this query is that the customer #1234 is filtered and we are searching the “orders” table for any existing records after the “active” filter.

</example-1>

<example-2>

H: remove all customers in the ExampleCompany database which have not been active in the last month

A:

The reasoning for this query is that the “ec_prod.customers” table is the only table in ExampleCompany database which contains customer records.

</example-2>

Here is your task: make a pipeline which uses the ExampleCompany database which retrieves all active customers.

Assistant:

The output is as follows:

Here is the JSON representation for an ETL pipeline to retrieve all active customers from the ExampleCompany database:

The reasoning is:

The ExampleCompany database is specified as the database to use

The ec_prod.customers table contains the customer data

The status field indicates if a customer is active so the query filters for only active customers

This should retrieve all active customer records from the ExampleCompany database. Let me know if you need any clarification or have additional requirements for the ETL pipeline!

The JSON output now has the correct database nomenclature, which Claude was able to use because of the examples that we provided. Coupling human and assistant indicators with XML tags makes it easier for Claude to understand what is the task and what was the answer in similar examples with very clear separation between multiple few-shot examples. The easier it is for Claude to understand, the better and more relevant the answer will be, further reducing the chance for the model to hallucinate and provide random irrelevant answers.

Improvement #4: Triggering Claude to begin JSON generation with XML tags

A small challenge with text-to-pipeline applications using FMs is the need to exactly parse an output from resulting text so it can be interpreted as code in a downstream application. One way to solve this with Claude is to take advantage of its XML tag understanding and combine this with a custom stop sequence. In the following prompt, we have instructed Claude to enclose the output in <json></json> XML tags. Then, we have added the <json> tag to the end of the prompt. This ensures that the first text that comes out of Claude will be the start of the JSON output. If you don’t do this, Claude often responds with some conversational text, then the true code response. By instructing Claude to immediately start generating the output, you can easily stop generation when you see the closing </json> tag. This is shown in the updated Boto3 API call. The benefits of this technique are twofold. First, you are able to exactly parse the code response from Claude. Second, you are able to reduce cost because Claude only generates code outputs and no extra text. This reduces cost on Amazon Bedrock because you are charged for each token that is produced as output from all FMs.

We use the following prompt:

Human: Your job is to act as an expert on ETL pipelines. Specifically, your job is to create a JSON representation of an ETL pipeline which will solve the user request provided to you.

The JSON output should follow the following format:

Here are some examples of user requests and associated output JSON artifacts which correctly solve the task provided. Make sure to use the same nomenclature which is provided in the examples if you are using the same databases.

<example-1>

H: query the ExampleCompany database for any active orders for customer #1234

A:

<json>

</json>

The reasoning for this query is that the customer #1234 is filtered and we are searching the “orders” table for any existing records after the “active” filter.

</example-1>

<example-2>

H: remove all customers in the ExampleCompany database which have not been active in the last month

A:

<json>

</json>

The reasoning for this query is that the “ec_prod.customers” table is the only table in ExampleCompany database which contains customer records.

</example-2>

Always remember to enclose your JSON outputs in <json></json> tags.

Here is your task: make a pipeline which uses the ExampleCompany database which retrieves all active customers.

Assistant:

<json>

We use the following code:

body = json.dumps({"prompt": prompt, "stop_sequences": ('\n\nHuman:', '</json>')})

response = bedrock.invoke_model(

body=body,

modelId='anthropic.claude-v2'

)The output is as follows:

{ "database": "ExampleCompany", "query": "SELECT * FROM ec_prod.customers WHERE status="active"" }

Now we have arrived at the expected output with only the JSON object returned! By using this method, we are able to generate an immediately usable technical artifact as well as reduce the cost of generation by reducing output tokens.

Conclusion

To get started today with SnapGPT, request a free trial of SnapLogic or request a demo of the product. If you would like to use these concepts for building applications today, we recommend experimenting hands-on with the prompt engineering section in this post, using the same flow on a different DSL generation use case that suits your business, and diving deeper into the RAG features that are available through Amazon Bedrock.

SnapLogic and AWS have been able to partner effectively to build an advanced translator between human language and the complex schema of SnapLogic integration pipelines powered by Amazon Bedrock. Throughout this journey, we have seen how the output generated with Claude can be improved in text-to-pipeline applications using specific prompt engineering techniques. AWS and SnapLogic are excited to continue this partnership in Generative ai and look forward to future collaboration and innovation in this fast-moving space.

About the Authors

Greg Benson is a Professor of Computer Science at the University of San Francisco and Chief Scientist at SnapLogic. He joined the USF Department of Computer Science in 1998 and has taught undergraduate and graduate courses including operating systems, computer architecture, programming languages, distributed systems, and introductory programming. Greg has published research in the areas of operating systems, parallel computing, and distributed systems. Since joining SnapLogic in 2010, Greg has helped design and implement several key platform features including cluster processing, big data processing, the cloud architecture, and machine learning. He currently is working on Generative ai for data integration.

Greg Benson is a Professor of Computer Science at the University of San Francisco and Chief Scientist at SnapLogic. He joined the USF Department of Computer Science in 1998 and has taught undergraduate and graduate courses including operating systems, computer architecture, programming languages, distributed systems, and introductory programming. Greg has published research in the areas of operating systems, parallel computing, and distributed systems. Since joining SnapLogic in 2010, Greg has helped design and implement several key platform features including cluster processing, big data processing, the cloud architecture, and machine learning. He currently is working on Generative ai for data integration.

Aaron Kesler is the Senior Product Manager for ai products and services at SnapLogic, Aaron applies over ten years of product management expertise to pioneer ai/ML product development and evangelize services across the organization. He is the author of the upcoming book “What’s Your Problem?” aimed at guiding new product managers through the product management career. His entrepreneurial journey began with his college startup, STAK, which was later acquired by Carvertise with Aaron contributing significantly to their recognition as tech Startup of the Year 2015 in Delaware. Beyond his professional pursuits, Aaron finds joy in golfing with his father, exploring new cultures and foods on his travels, and practicing the ukulele.

Aaron Kesler is the Senior Product Manager for ai products and services at SnapLogic, Aaron applies over ten years of product management expertise to pioneer ai/ML product development and evangelize services across the organization. He is the author of the upcoming book “What’s Your Problem?” aimed at guiding new product managers through the product management career. His entrepreneurial journey began with his college startup, STAK, which was later acquired by Carvertise with Aaron contributing significantly to their recognition as tech Startup of the Year 2015 in Delaware. Beyond his professional pursuits, Aaron finds joy in golfing with his father, exploring new cultures and foods on his travels, and practicing the ukulele.

Rich Dill is a Principal Solutions Architect with experience cutting broadly across multiple areas of specialization. A track record of success spanning multi-platform enterprise software and SaaS. Well known for turning customer advocacy (serving as the voice of the customer) into revenue-generating new features and products. Proven ability to drive cutting-edge products to market and projects to completion on schedule and under budget in fast-paced onshore and offshore environments. A simple way to describe me: the mind of a scientist, the heart of an explorer and the soul of an artist.

Rich Dill is a Principal Solutions Architect with experience cutting broadly across multiple areas of specialization. A track record of success spanning multi-platform enterprise software and SaaS. Well known for turning customer advocacy (serving as the voice of the customer) into revenue-generating new features and products. Proven ability to drive cutting-edge products to market and projects to completion on schedule and under budget in fast-paced onshore and offshore environments. A simple way to describe me: the mind of a scientist, the heart of an explorer and the soul of an artist.

Clay Elmore is an ai/ML Specialist Solutions Architect at AWS. After spending many hours in a materials research lab, his background in chemical engineering was quickly left behind to pursue his interest in machine learning. He has worked on ML applications in many different industries ranging from energy trading to hospitality marketing. Clay’s current work at AWS centers around helping customers bring software development practices to ML and generative ai workloads, allowing customers to build repeatable, scalable solutions in these complex environments. In his spare time, Clay enjoys skiing, solving Rubik’s cubes, reading, and cooking.

Clay Elmore is an ai/ML Specialist Solutions Architect at AWS. After spending many hours in a materials research lab, his background in chemical engineering was quickly left behind to pursue his interest in machine learning. He has worked on ML applications in many different industries ranging from energy trading to hospitality marketing. Clay’s current work at AWS centers around helping customers bring software development practices to ML and generative ai workloads, allowing customers to build repeatable, scalable solutions in these complex environments. In his spare time, Clay enjoys skiing, solving Rubik’s cubes, reading, and cooking.

Sina Sojoodi is a technology executive, systems engineer, product leader, ex-founder and startup advisor. He joined AWS in March 2021 as a Principal Solutions Architect. Sina is currently the US-West ISV area lead Solutions Architect. He works with SaaS and B2B software companies to build and grow their businesses on AWS. Previous to his role at Amazon, Sina was a technology executive at VMware, and Pivotal Software (IPO in 2018, VMware M&A in 2020) and served multiple leadership roles including founding engineer at Xtreme Labs (Pivotal acquisition in 2013). Sina has dedicated the past 15 years of his work experience to building software platforms and practices for enterprises, software businesses and the public sector. He is an industry leader with a passion for innovation. Sina holds a BA from the University of Waterloo where he studied Electrical Engineering and Psychology.

Sina Sojoodi is a technology executive, systems engineer, product leader, ex-founder and startup advisor. He joined AWS in March 2021 as a Principal Solutions Architect. Sina is currently the US-West ISV area lead Solutions Architect. He works with SaaS and B2B software companies to build and grow their businesses on AWS. Previous to his role at Amazon, Sina was a technology executive at VMware, and Pivotal Software (IPO in 2018, VMware M&A in 2020) and served multiple leadership roles including founding engineer at Xtreme Labs (Pivotal acquisition in 2013). Sina has dedicated the past 15 years of his work experience to building software platforms and practices for enterprises, software businesses and the public sector. He is an industry leader with a passion for innovation. Sina holds a BA from the University of Waterloo where he studied Electrical Engineering and Psychology.

Sandeep Rohilla is a Senior Solutions Architect at AWS, supporting ISV customers in the US West region. He focuses on helping customers architect solutions leveraging containers and generative ai on the AWS cloud. Sandeep is passionate about understanding customers’ business problems and helping them achieve their goals through technology. He joined AWS after working more than a decade as a solutions architect, bringing his 17 years of experience to bear. Sandeep holds an MSc. in Software Engineering from the University of the West of England in Bristol, UK.

Sandeep Rohilla is a Senior Solutions Architect at AWS, supporting ISV customers in the US West region. He focuses on helping customers architect solutions leveraging containers and generative ai on the AWS cloud. Sandeep is passionate about understanding customers’ business problems and helping them achieve their goals through technology. He joined AWS after working more than a decade as a solutions architect, bringing his 17 years of experience to bear. Sandeep holds an MSc. in Software Engineering from the University of the West of England in Bristol, UK.

Dr. Farooq Sabir is a Senior artificial intelligence and Machine Learning Specialist Solutions Architect at AWS. He holds PhD and MS degrees in Electrical Engineering from the University of Texas at Austin and an MS in Computer Science from Georgia Institute of technology. He has over 15 years of work experience and also likes to teach and mentor college students. At AWS, he helps customers formulate and solve their business problems in data science, machine learning, computer vision, artificial intelligence, numerical optimization, and related domains. Based in Dallas, Texas, he and his family love to travel and go on long road trips.

Dr. Farooq Sabir is a Senior artificial intelligence and Machine Learning Specialist Solutions Architect at AWS. He holds PhD and MS degrees in Electrical Engineering from the University of Texas at Austin and an MS in Computer Science from Georgia Institute of technology. He has over 15 years of work experience and also likes to teach and mentor college students. At AWS, he helps customers formulate and solve their business problems in data science, machine learning, computer vision, artificial intelligence, numerical optimization, and related domains. Based in Dallas, Texas, he and his family love to travel and go on long road trips.